Agile development has become much more than just another buzzword. It’s how enterprises are building new services that compete with disruptors who are delivering great user experiences in finance, media delivery, retailing – basically, every industry – through modern applications.

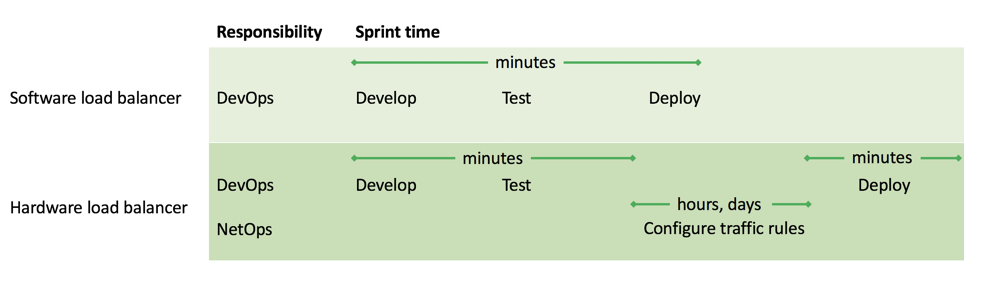

As a result, businesses are applying incredible pressure on developers and ops team to keep ahead of the competition by delivering new features weekly, daily, or even hourly. However, as they deploy those features, developers can’t get new traffic‑routing rules applied in real time; they have to wait for networking teams to reconfigure hardware load balancers, which can take many hours, days, or even weeks. This is causing immense friction in enterprises as traditional networking teams hold on to their function and relevance, despite the fact that the problem is obvious even to them.

It’s Not Your Network Team’s Fault – It’s Your Load Balancer’s

It’s not the fault of networking teams that they are the single point of failure for all traffic flowing into and out of the data center. The problem dates back at least ten years, to a time when the existing architectures prevented applications from scaling fast enough to meet increasing consumer demand. Most enterprises adopted hardware load balancers like F5 BIG-IP as the solution, as they were powerful enough to handle large amounts of traffic and load balance it across all the applications hosted in the data center.

As a result, the routing rules for many different applications were all deployed on the same device, and networking teams had to make sure the rules didn’t conflict with each other. It took time to conduct the required careful testing and QA prior to deploying new or modified applications into production.

But times have changed. Apps can now be architected for scale, and traffic‑routing rules can be deployed in software solutions like NGINX Plus as part of the application lifecycle. As updates are deployed through a continuous integration/continuous delivery (CI/CD) pipeline, so are new rules. The responsibility for traffic‑routing rules has shifted to DevOps teams, and this change is enabling truly agile development and deployment. This software‑only development and delivery chain is a perfect example of infrastructure as code.

Operate at Agile Speeds with Software Load Balancers

With software load balancers, teams can work in a fully agile world. DevOps teams – people who make ops changes at dev speeds – can change a configuration in seconds. This unlocks a complete move to truly agile development and deployment of software. Most of all, it reduces the entire organization’s time to market – which CIOs and CEOs care about deeply.

Switching to Software Doesn’t Mean Scrapping Your Hardware Investments

If you’ve invested in hardware load balancers, you don’t need to throw them out. Many NGINX customers continue using them at the edge, for basic packet handling or even SSL termination. However, there’s no need to make your development team put Layer 7, intelligent traffic routing into hardware boxes – or cloud versions of hardware boxes – that take days and weeks to update.

NGINX Helps Our Customers Deliver on the Promise of Agile

A few weeks ago, a large NGINX customer – whose organization embraced agile development principles years ago – told me that their entire DevOps team would quit en masse if they suddenly had to return to logging tickets just to implement new routing rules.

Another customer told me that he was proudly bragging internally that his team could deploy new routing rules in a matter of seconds, using NGINX, when his colleagues in a different business unit were waiting weeks because they were still dependent on hardware load balancers. In the competitive world we live in today, why slow your development teams down?

The move to agile isn’t just a developer thing, or even just a developer and operations thing. It needs to become organization‑wide. CIOs need to acknowledge that their development teams are experiencing bottlenecks that are easily removed. To keep up with the ever‑faster cycle times in business and in consumer services today, you have to move to software load balancers, at least for application‑level changes.

Are you struggling with bottlenecks in your agile processes? Or have you already invested in a flexible infrastructure to support your agile development? If so, we’d love to hear from you. Leave a comment below.