Table of Contents

0:00 Introduction

My name is Seungyeob Choi and I’m lead engineer and manager of the streaming team at Verizon Digital Media Services (VDMS) and i’m in charge of developing the streaming platform. In this presentation I’m going to discuss streaming by NGINX. Everybody knows that NGINX is a very good webserver, but not many people know that NGINX is also a very good streaming server.

0:29 Agenda

Let’s look at how we are going to use NGINX as a streaming server. First i’m going to review different methods of offering video from your website, i’m going to present the NGINX RTMP module, I’m going to review NGINX Plus’ streaming features, and the most important part of this presentation is going to be how to do HTTP VOD streaming on HLS and DASH using NGINX.

1:03 Overview of Streaming

Let’s review different methods of offering video from your website.

1:11 Progressive Download and HTML5

The most primitive and oldest way of offering video is through progressive download. Clients have a media player plugin in their browser, and that media player plugin requests the media file and the player plays the video while the video is still being downloaded. There are two requirements for the video to be played smoothly. The download speed has to be higher than the play speed and the metadata of the video has to be located at the beginning of the video. If the metadata is located at the end of the video, then the player cannot start the video until it receives the metadata part of the video. That is a very old way of offering video. Most browsers today are capable of HTML5. HTML5 allows the videos to be natively played by the browser. It supports videopad via HTML5, but that is only a part of the aspects. The most important part is that the browser can now natively play video files – mostly MP4. Unlike the old progressive download method, the browsers today are smart enough to make multiple HTTP byte-range requests for the video file. So even if the metadata is located at the end of the video, browsers can quickly start the video without any delay.

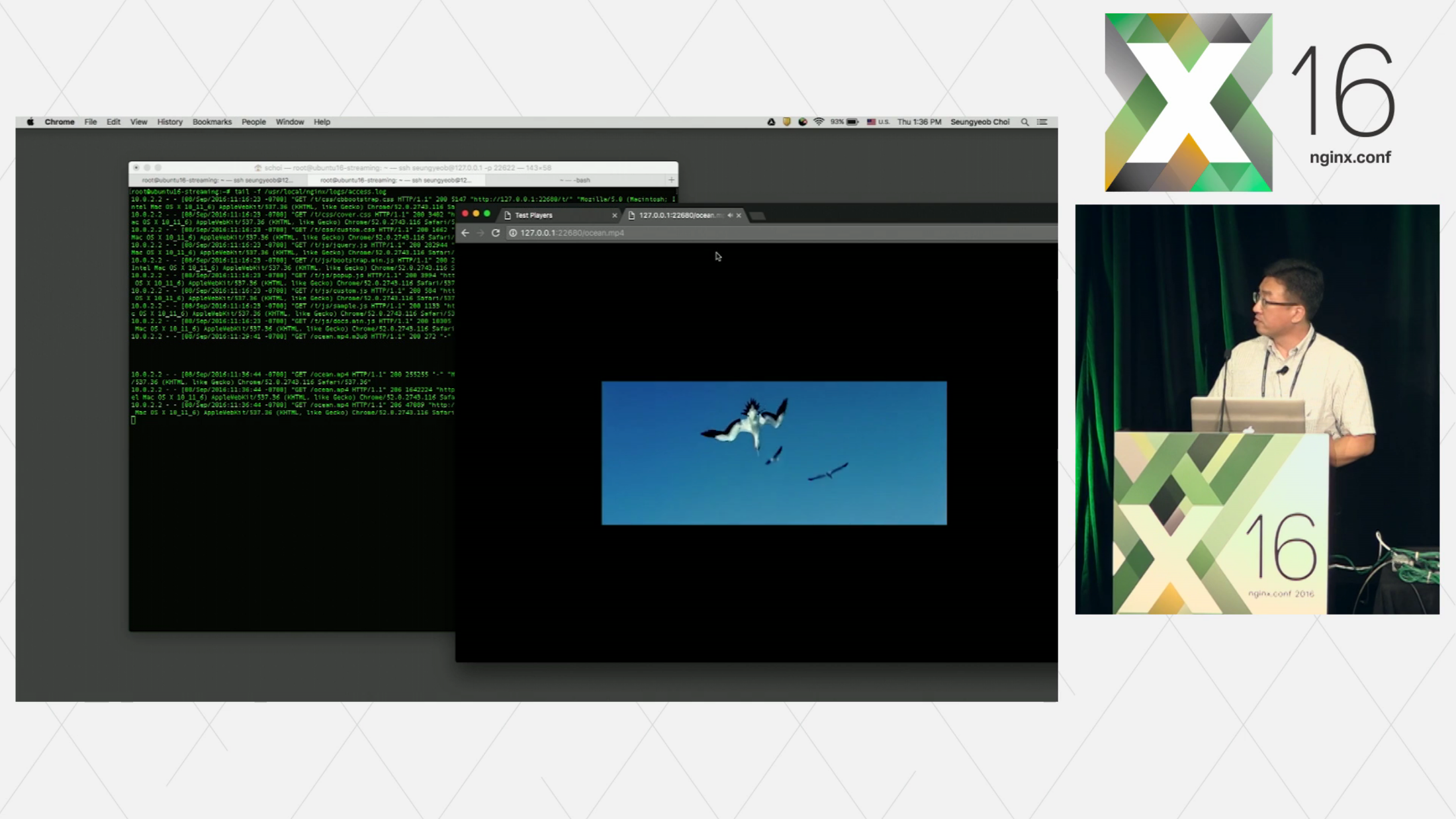

3:03 Demo Video Streaming

Let me show you an example of native playback by a browser. So I just played an MP4 file using a browser, and you can see that it is not just a single request. We get multiple requests with byte-range, and the browser requests metadata part of the video immediately so that you can play the video without any delay.

4:08 RTMP

RTMP is a proprietary protocol by Adobe, and it became the most popular streaming protocol in mid-2000. The reason was because Adobe Flash Media Server was one of the best streaming solutions at the time. A good thing about RTMP at the time was that Adobe Flash Player was installed almost everywhere, but it isn’t anymore. So at the time, RTMP was able to be played in almost any client, and now the advantages of RTMP that is still valued today is that it can allow very low latency. If you deliver a RTMP live stream, then the end-to-end latency could be just 2 to 3 seconds. That is almost impossible by different streaming protocols.

Some of the disadvantages of RTMP is that it requires the Adobe Flash Player plugin, which nobody wants to install these days. Also, RTMP can utilize the HTTP basic infrastructure for delivery caching and load balancing because it is a proprietary protocol. The RTMP protocol was designed before the technology for dynamic bitrate switching was matured, so RTMP is lacking the whole bitrate switching feature.

5:50 HTTP Based Streaming Protocols

More and more streaming traffic is moving from proprietary protocols like RTMP to HTTP-based protocols. The basic idea of HTTP-based protocols is original video file like MP4 is divided into smaller chunks. Each smaller chunk represents only a few seconds of video, and the server provides a manifest file that is like a playlist that has all the segment files. The player requests the manifest, and when the player receives the manifest, it makes a sequence of requests for each individual segment file. So it plays the first segment and at the same time it downloads the second segment, and when the first segment is done then it starts playing the second segment and at the same time it requests the third segment. This technology was first introduced in 2007 by Move Networks, and after that Microsoft Developed Microsoft Smooth Streaming, Adobe developed HDS, and Apple developed HLS. So it seems like everybody made a different implementation from the same idea.

People didn’t like that situation, so they came up with a standardized HTTP-based protocol called MPEG-DASH. Since then, Microsoft Smooth and Adobe HDS has been losing their foothold in the streaming industry, but Apple keeps pushing their HLS protocol and MPEG-DASH is getting more and more accepted. So HLS and MPEG-DASH are the two thriving HTTP-based protocols today.

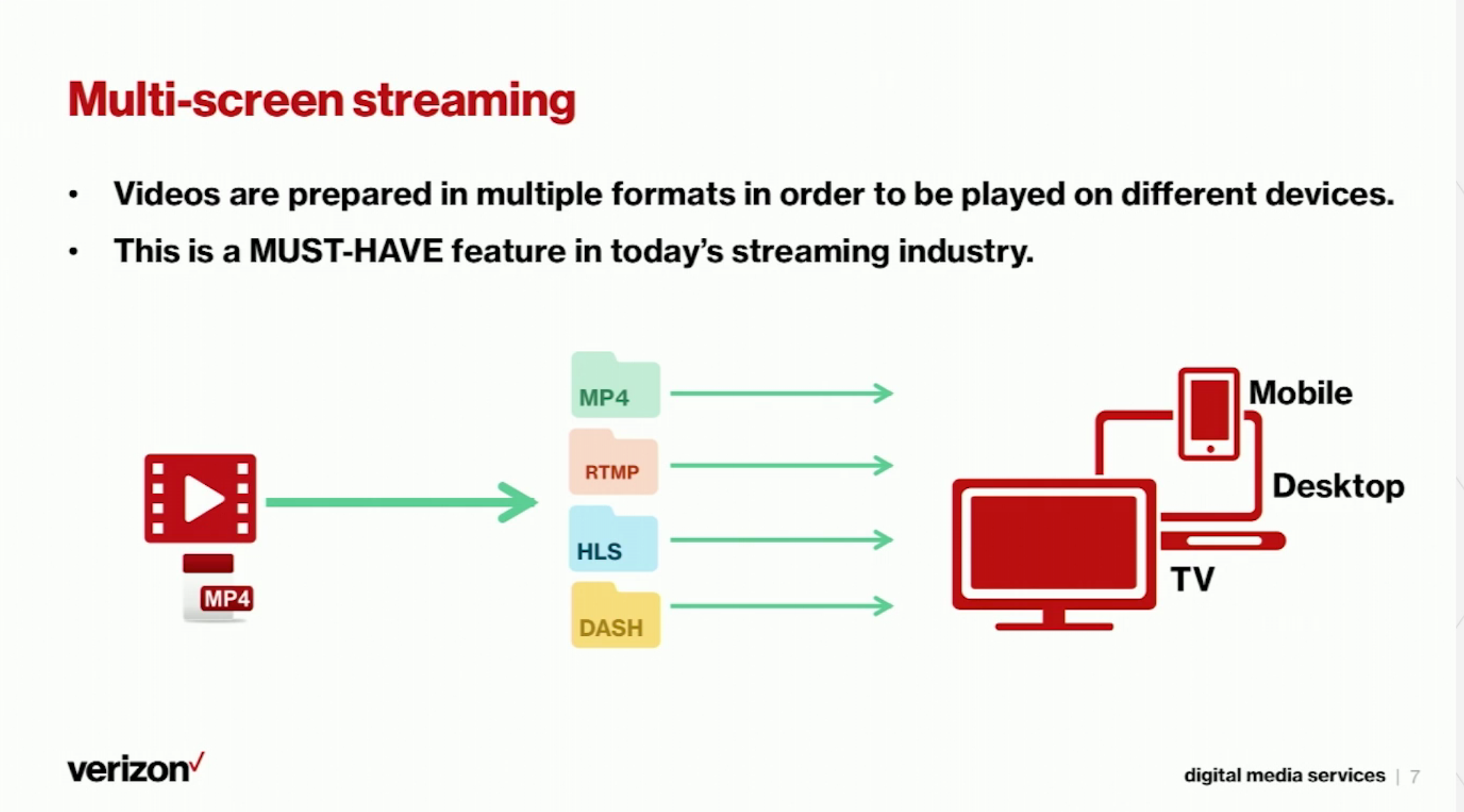

7:43 Multi-Screen Streaming

Multi-screen delivery is something content providers are struggling with. So the purpose is we want to support as many clients as possible. Each client can only play a certain type of video, but all browsers today that is capable of media source extensions can play MPEG-DASH. Apple devices like iPhone and iPad can play HLS. Old browsers that have the Adobe Flash plugin can play RTMP. So in order to cover all the different type of clients, we want to provide all these different formats from the server, and MP4 is simple because it is a file that can be delivered through HTTP. In order to offer RTMP, we need an RTMP server like an Adobe Media Server or Wowza. In order to offer HLS and DASH, the file has to be processed and then they’re delivered over HTTP. So we can consider HLS and DASH in the same category. They require an additional repackaging step and then the files are delivered over HTTP.

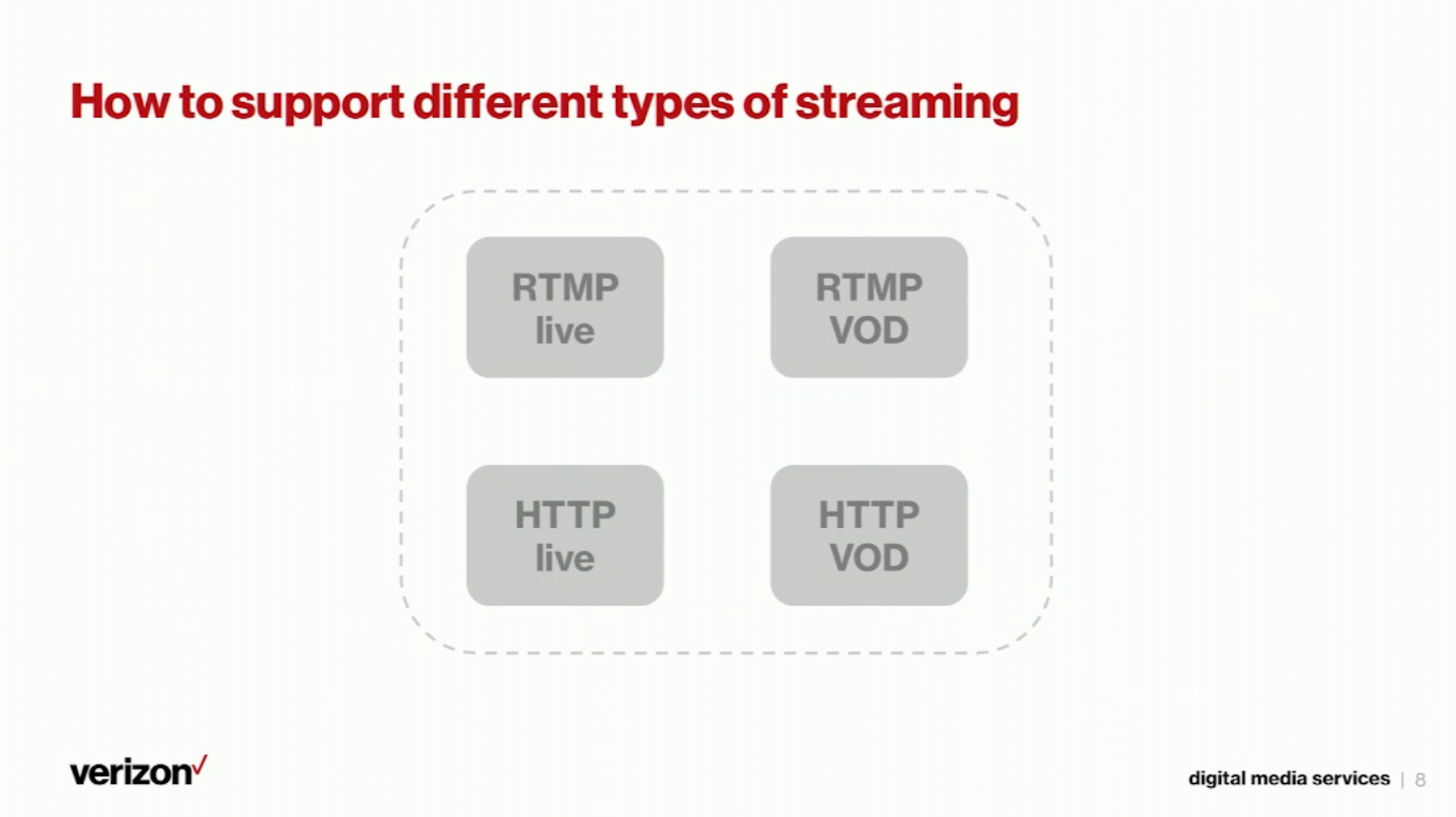

9:22 How To Support Different Types of Streaming

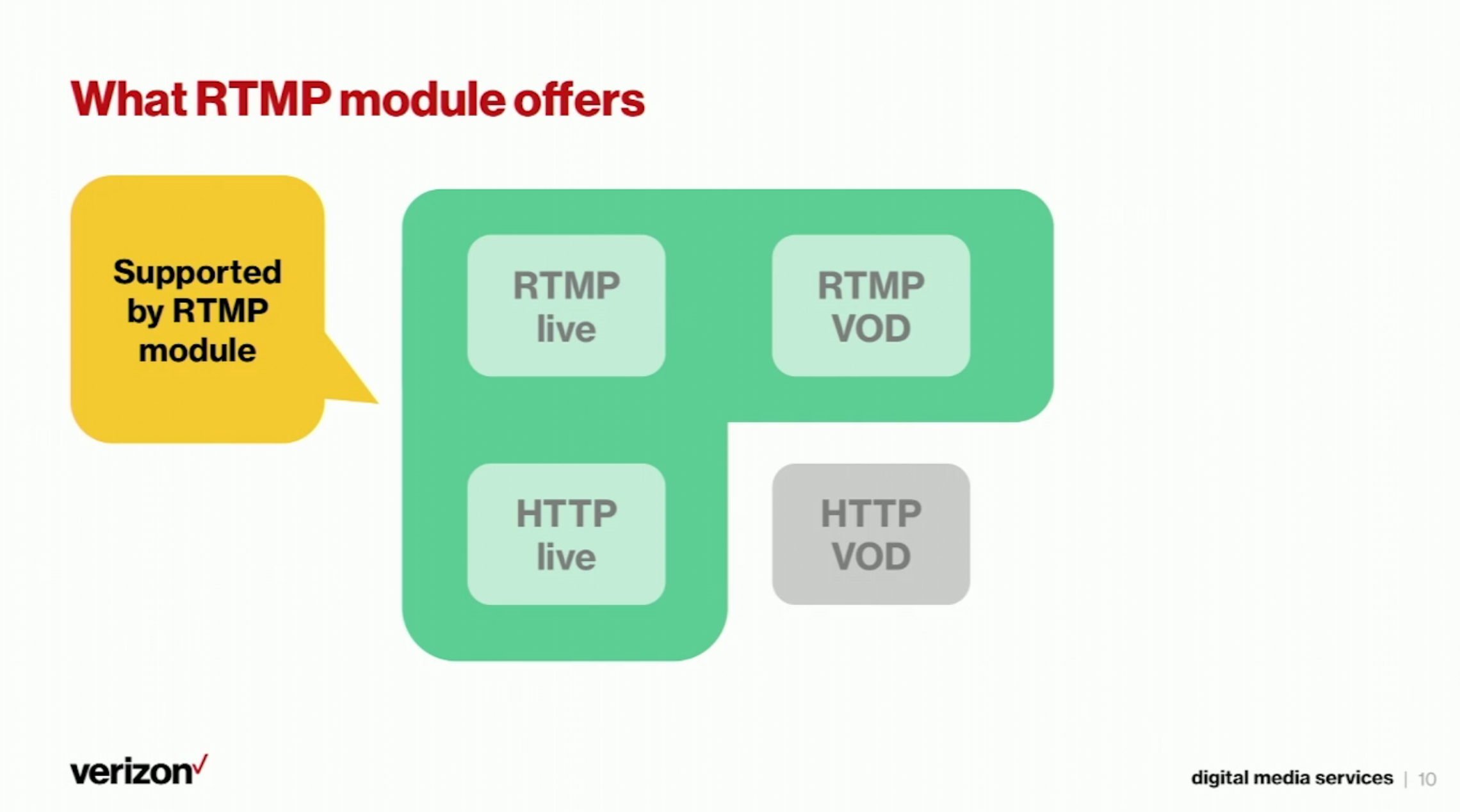

Let’s look at some of the types of streaming that we want to support – RTMP live, RTMP VOD, HTTP live including HLS live and DASH live, and HTTP VOD including HLS VOD and DASH VOD

9:46 Streaming Using RTMP Module

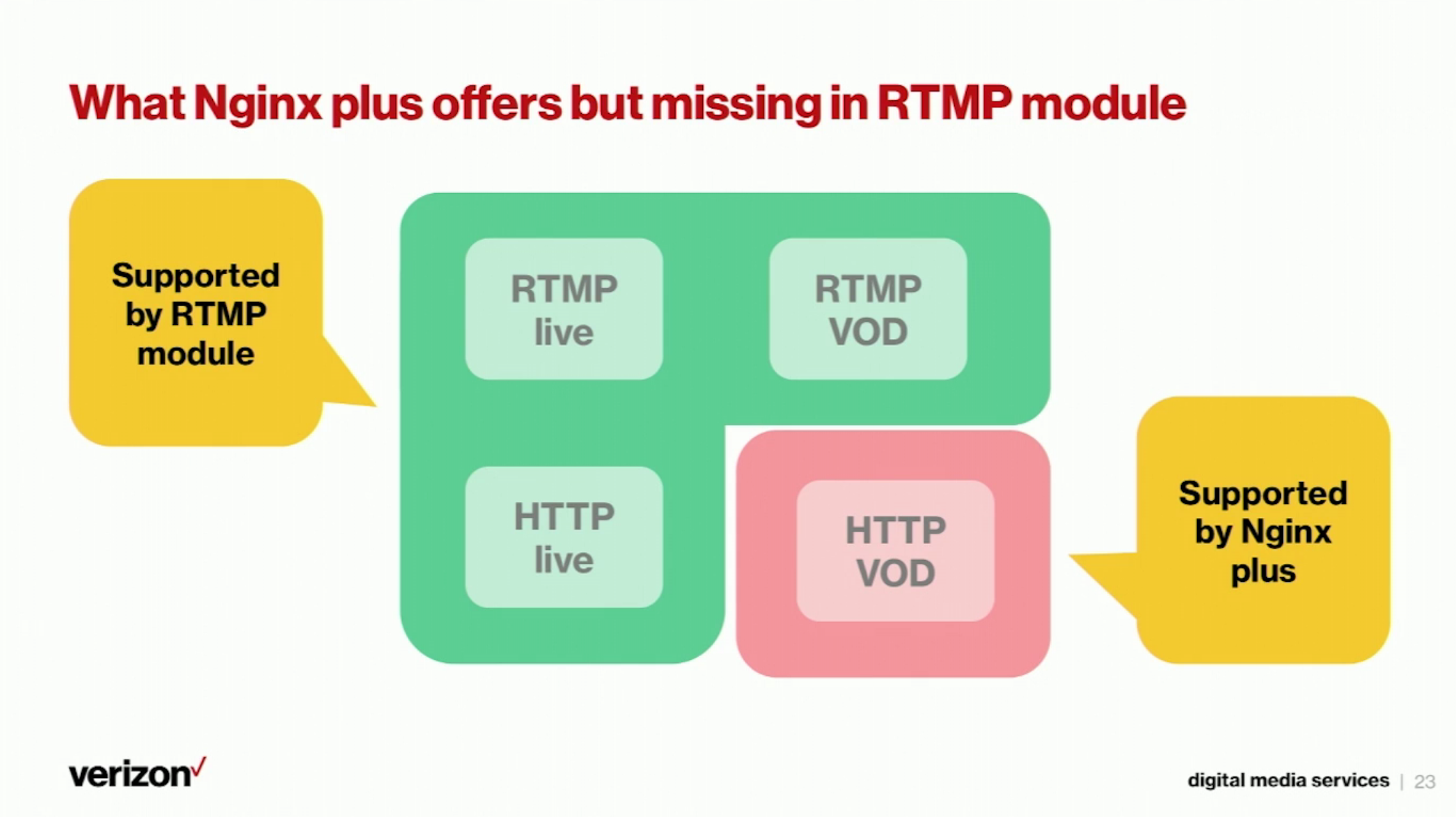

9:48 What RTMP Module Offers

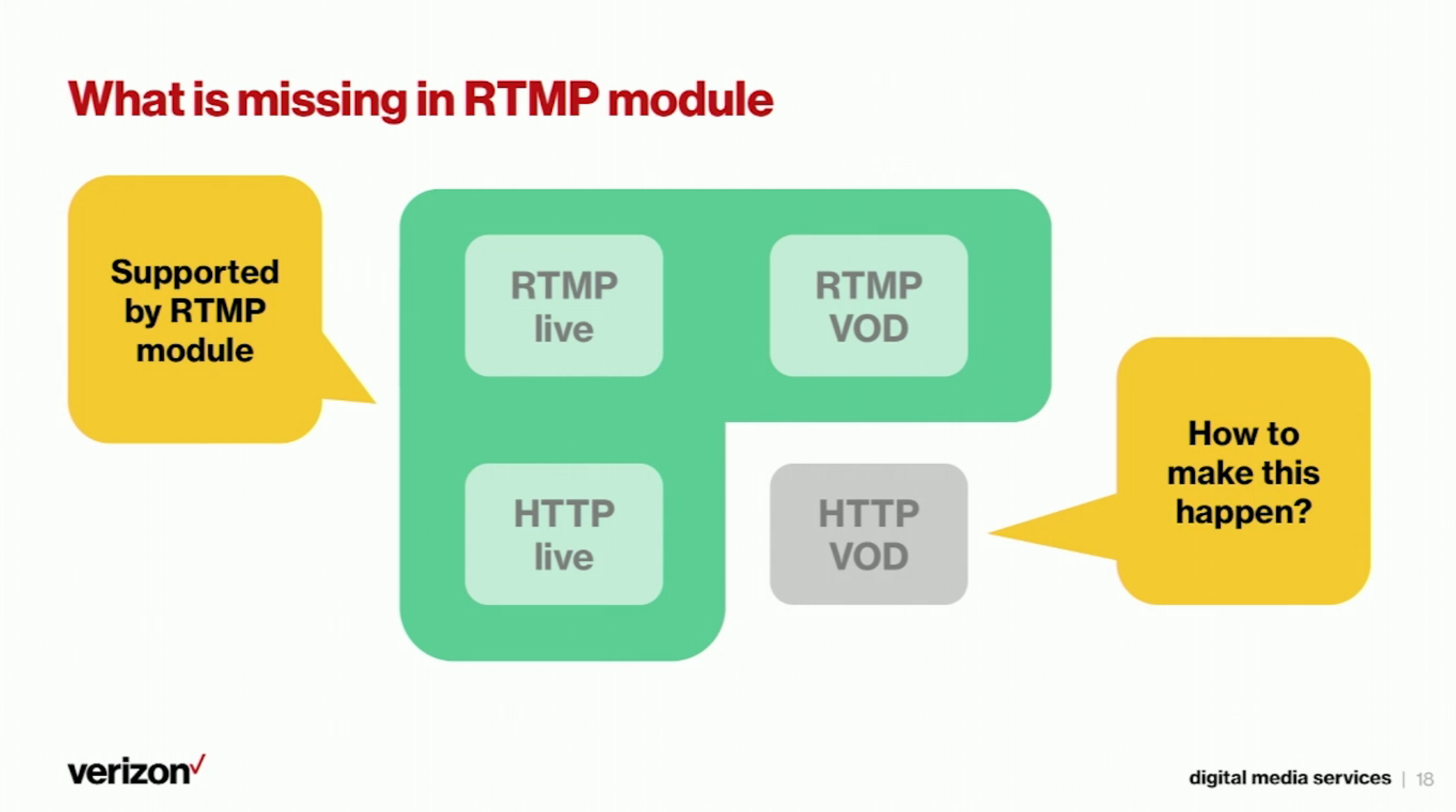

There is an open source module called NGINX RTMP module that can cover RTMP live, RTMP VOD, and HTTP live including HSL live and DASH live, but not HTTP VOD.

9:58 Download and Install NGINX RTMP Module

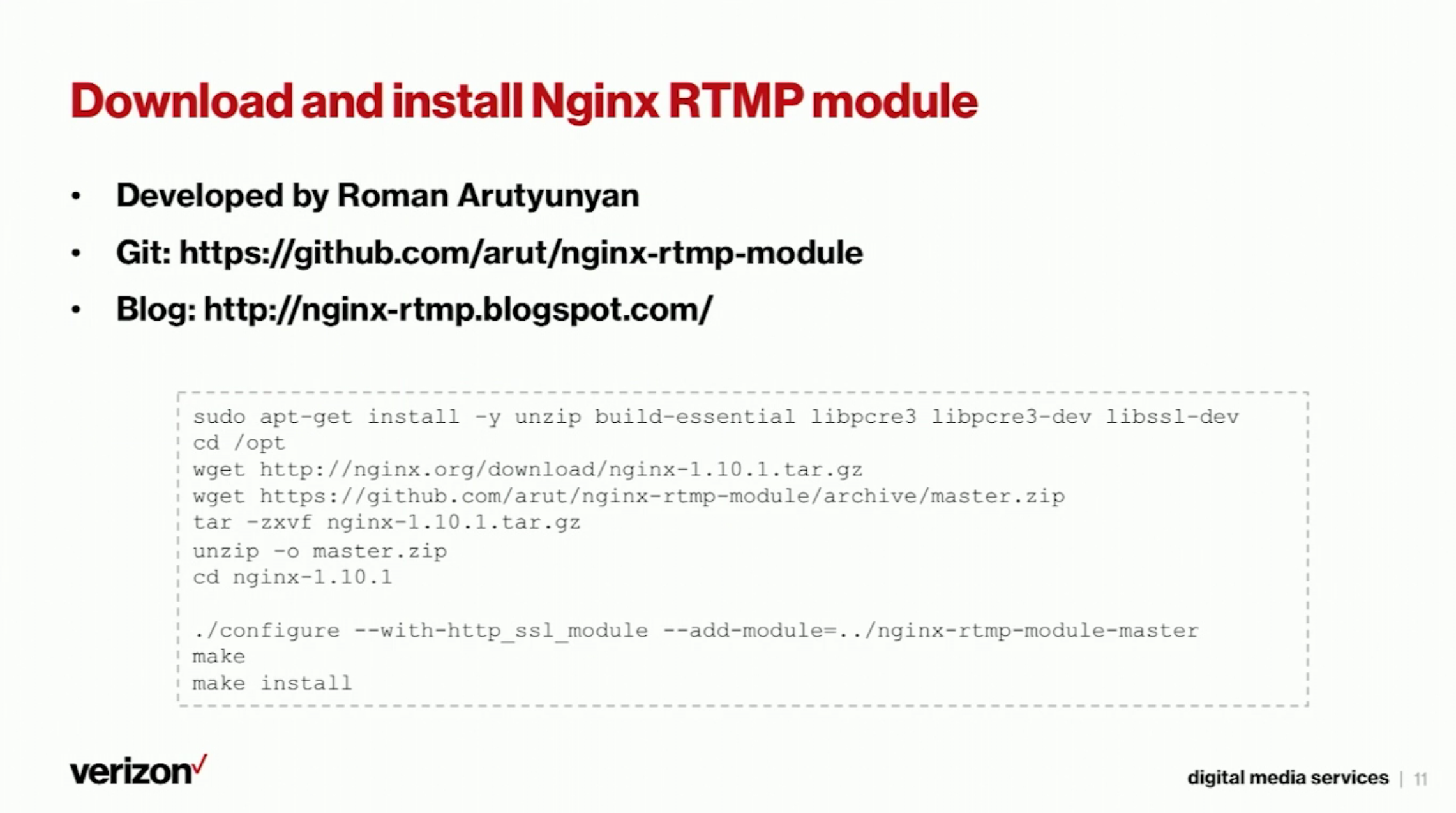

The NGINX RTMP module can be downloaded from https://github.com/arut/nginx-rtmp-module, and all the information from the NGINX RTMP module can be found from the developer’s blog. You should download NGINX source, download the RTMP module source, and then compile NGINX using RTMP as an added module. Once you install it, NGINX is going to work as a streaming server.

10:28 RTMP VOD

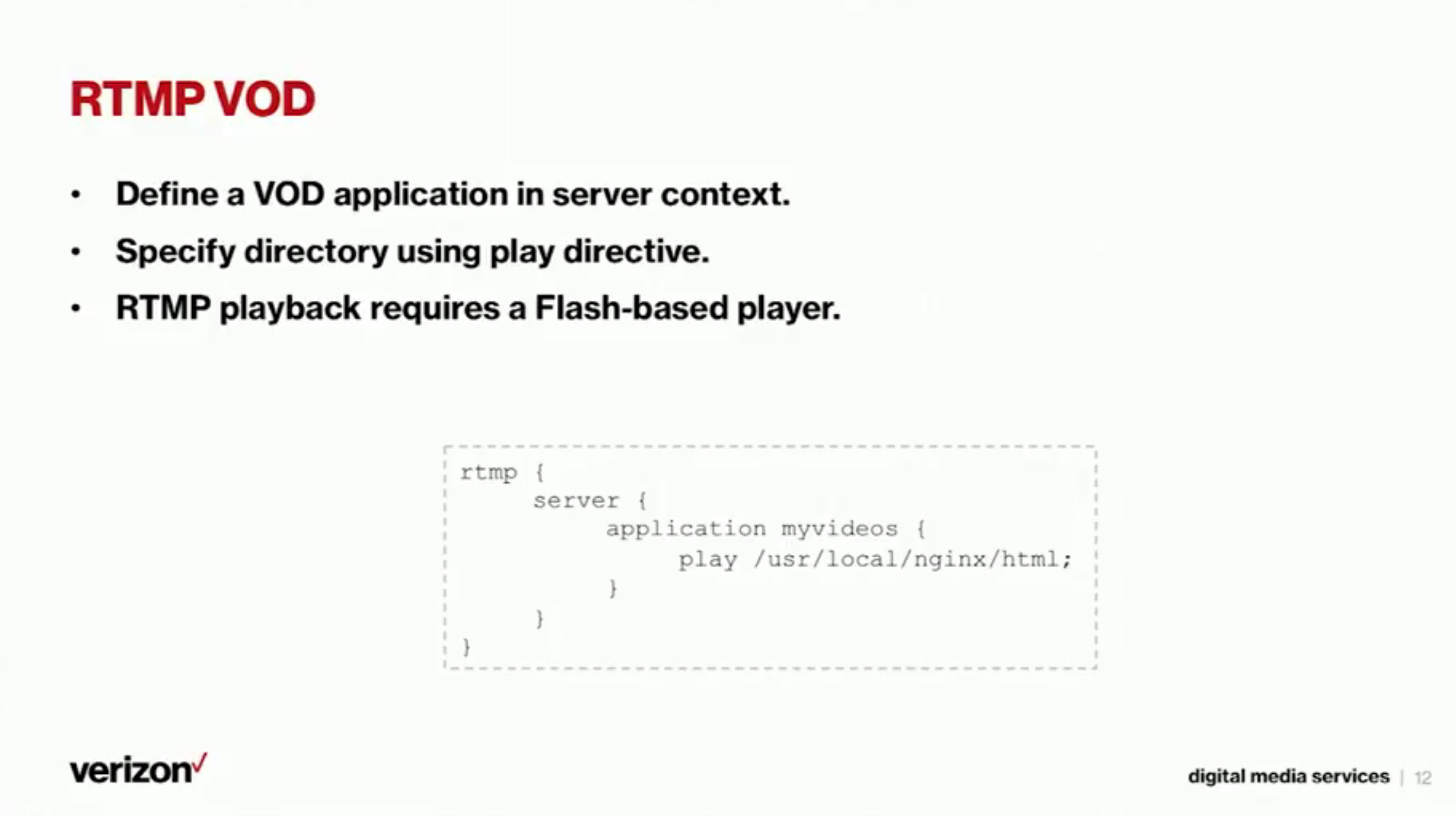

If you install the NGINX RTMP module, then you can offer RTMP streaming. If you want to offer RTMP VOD, you can define a RTMP block in the NGINX config file that is a separate block outside the HTTP block. You have to define RTMP, put the server, define the application, and specify the location of the media file using the “play” directive.

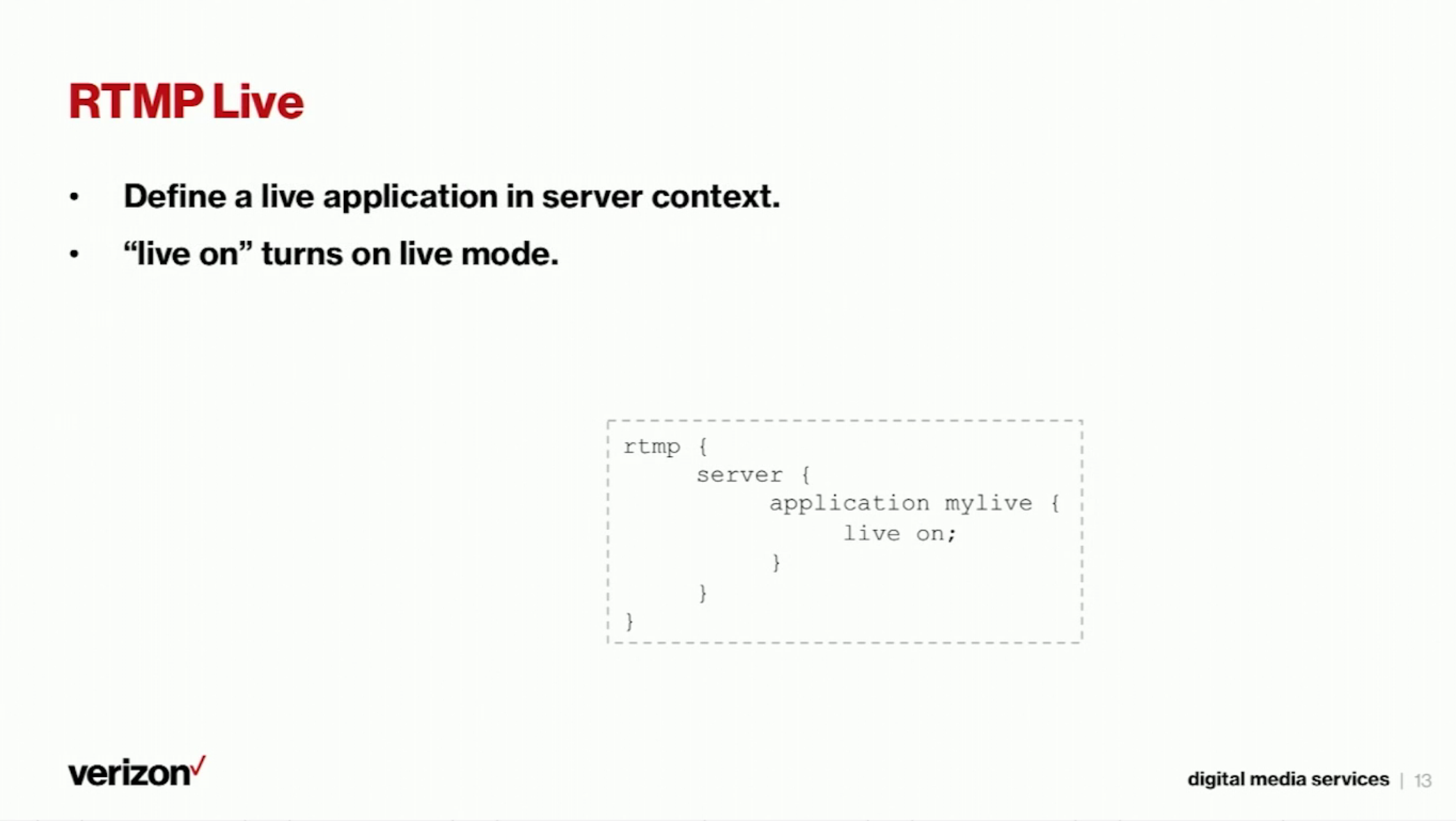

11:03 RTMP Live

You can also define a live application. The difference between VOD and Live is: VOD delivers from existing files and Live delivers a live stream that is being ingested. If you put “live on”, then it enables live mode for that application.

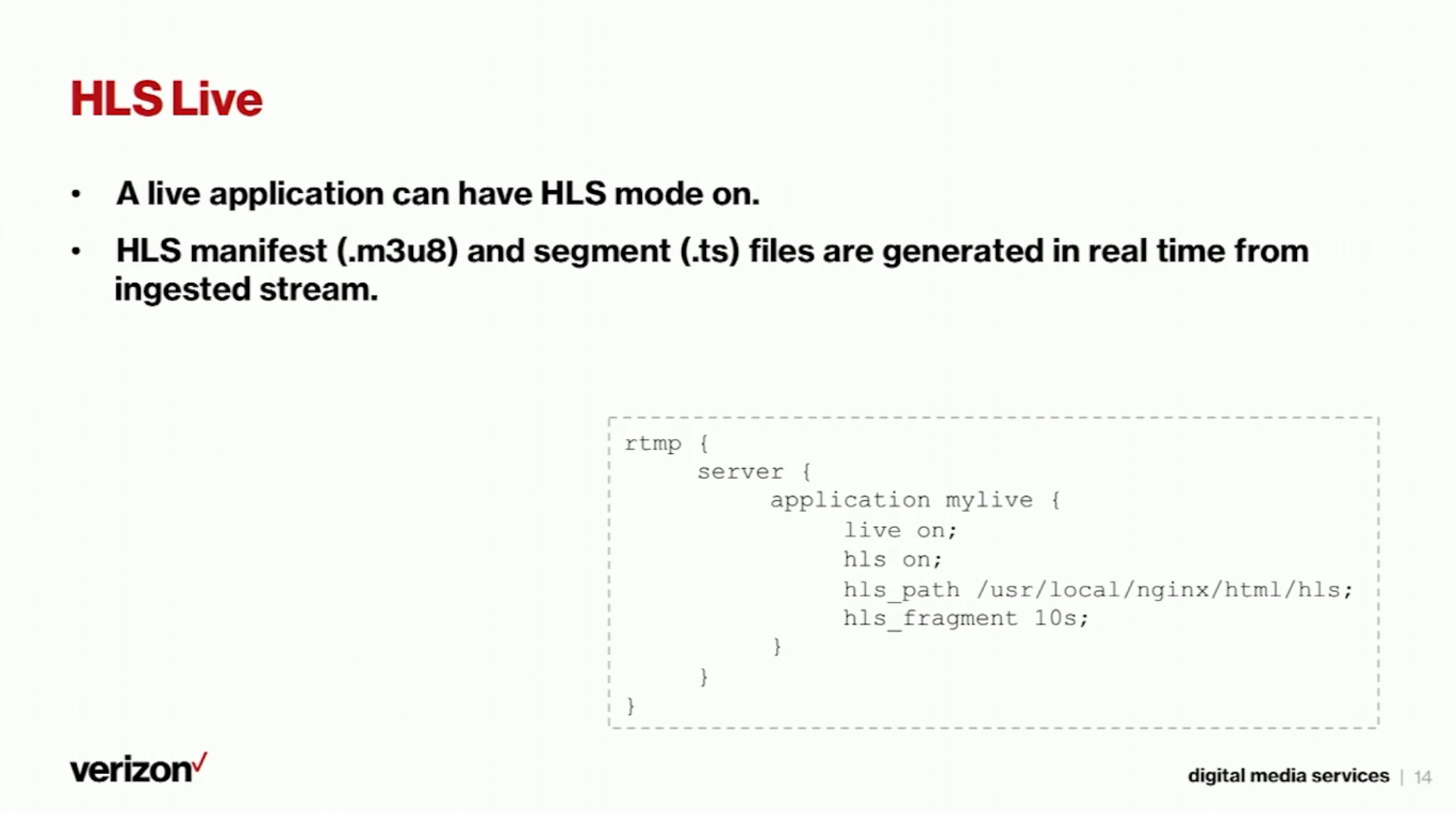

11:34 HLS Live

The NGINX RTMP module supports HLS Live, and you can enable HLS on a live application by using “hls on” and then specifying a HLS path where the files are generated by NGINX. You also need to specify the “hls_fragment” that is the target duration for each HLS segment.

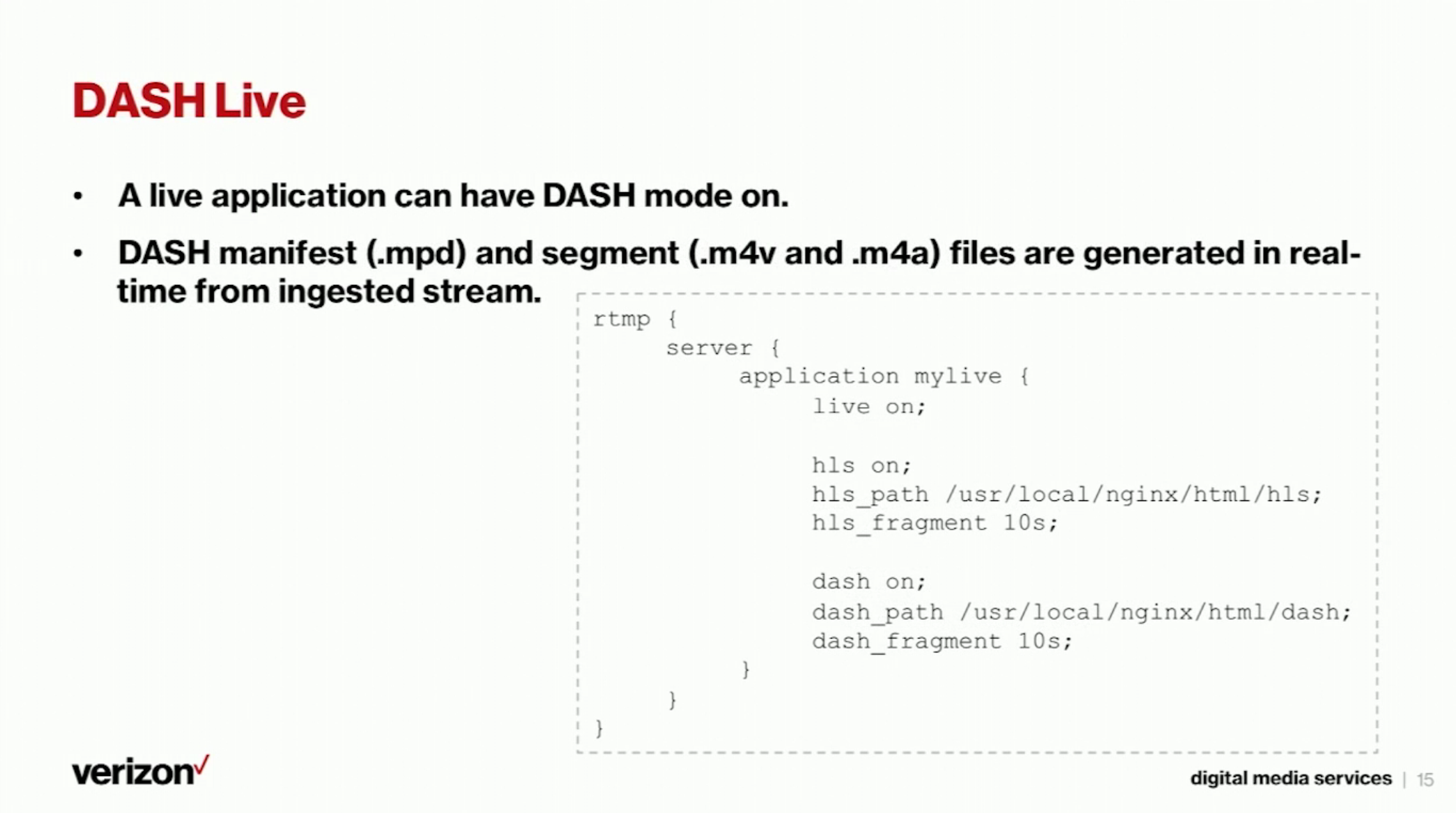

11:58 DASH Live

We can also enable DASH on a live application by adding “dash on” and “dash_path” that is where the DASH files are generated as well as the segment duration for DASH.

12:12 Demo Streaming With NGINX RTMP Module

Let me show you how it works. So I’m running NGINX with the RTMP module and I’m publishing a stream. Then you can see the files are being generated by the NGINX RTMP module. The first segment was complete, the second segment is being appended. Now it generated the third segment, the HLS files are being generated and DASH files are being generated in the DASH directory. Now we have seen that the NGINX RTMP module can do RTMP VOD, Live, and HTTP Live.

13:32 VOD By Pre-packaging

13:41 What is Missing in RTMP Module

What are we going to do about HTTP VOD?

13:46 Pre-Packaging to HLS Using ffmpeg

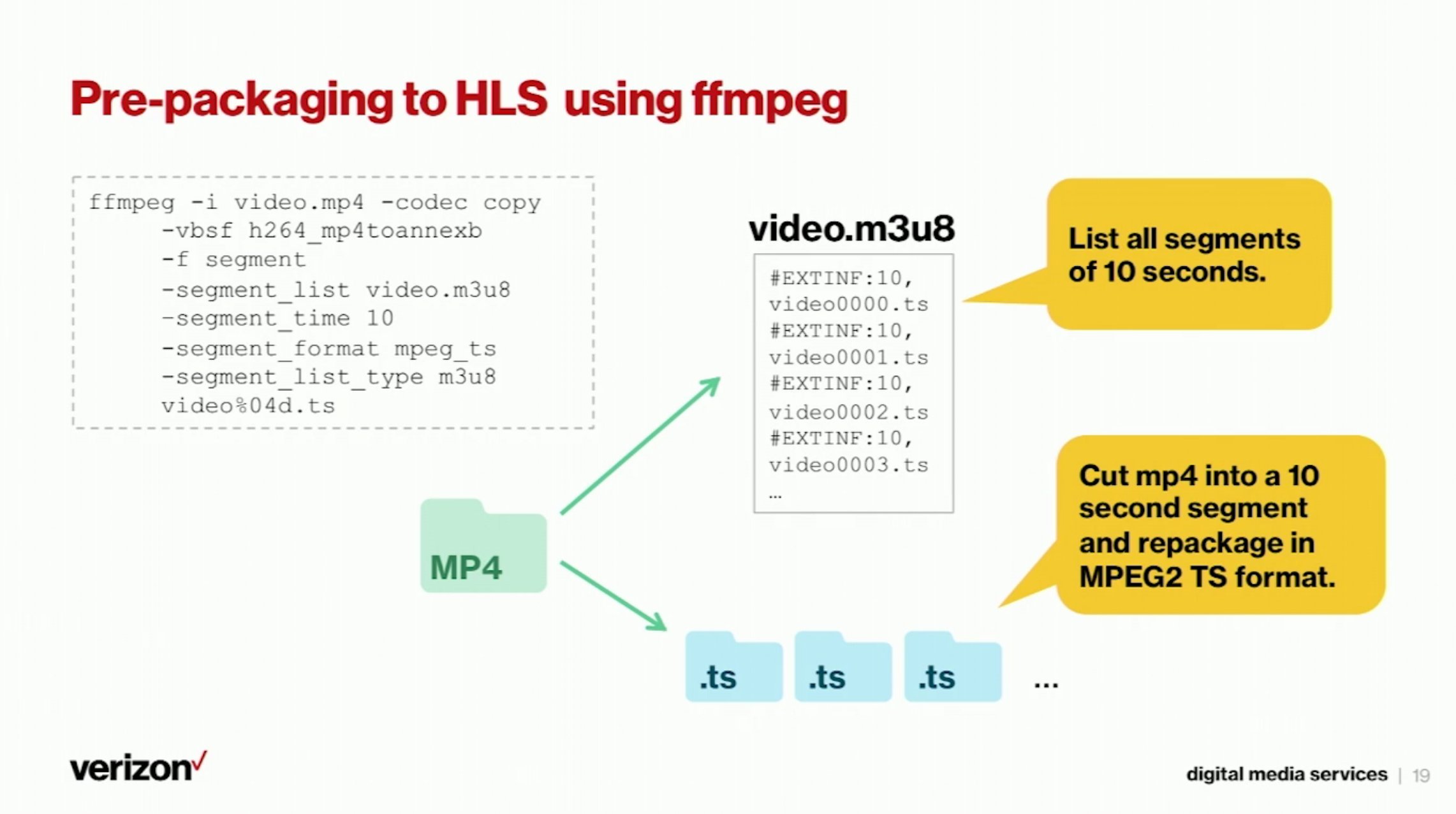

HTTP VOD can be prepared using tools like ffmpeg. You can run ffmpeg using these parameters, then ffmpeg generates a HLS manifest file and a HLS segment file. You specify the segment duration by using “-segment_time 10” – segment time has to be ten seconds. You also need to specify the segment format “mpeg_ts” – each HLS segment has to be packaged in an MPEG2 TS format. Then those generate a m3u8 manifest file and ts segment files can be delivered over HTTP.

14:35 Pre-Packaging To MPEG-DASH Using gpac (MP4Box)

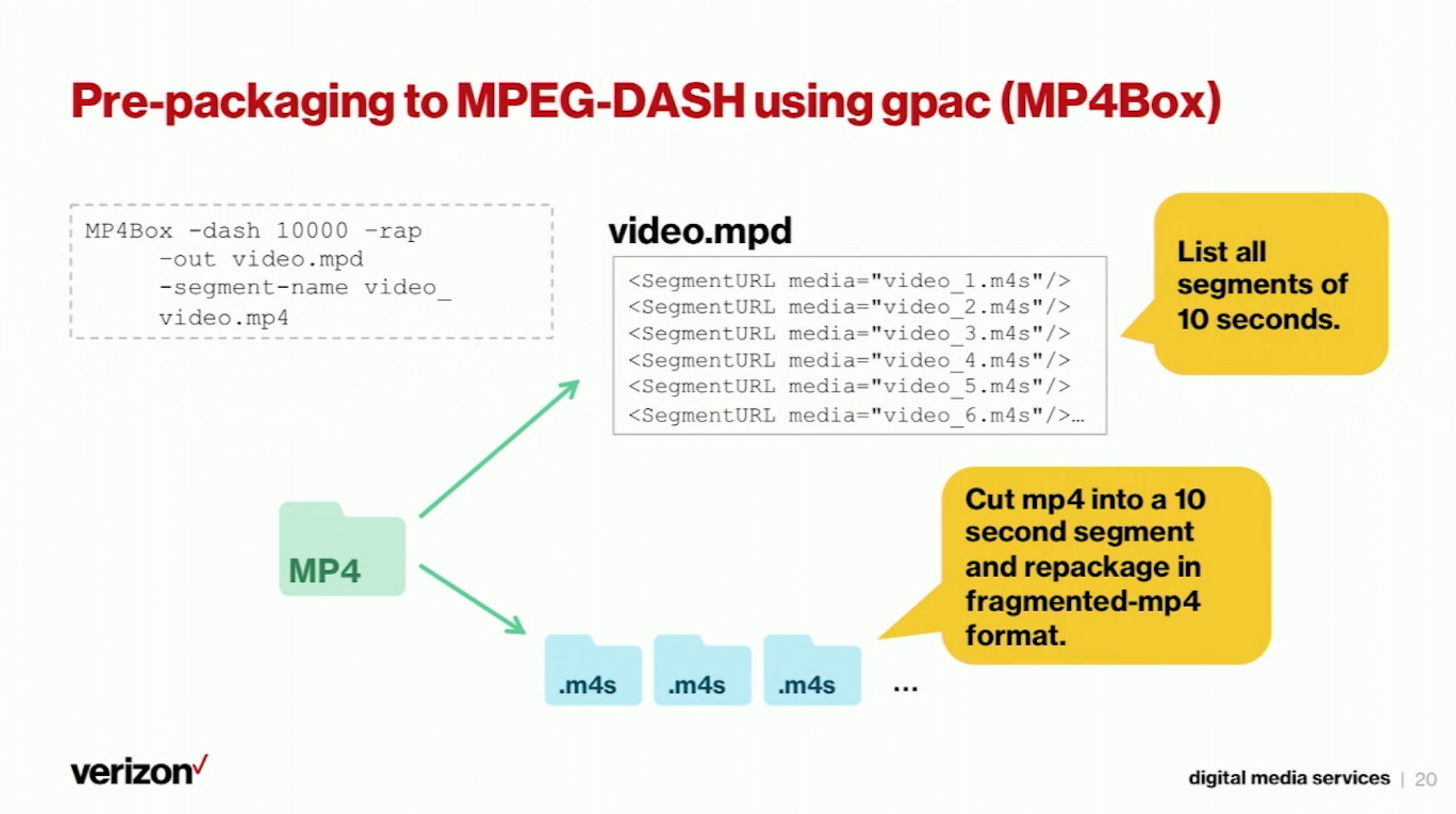

You can also generate DASH files using a different tool called “MP4Box” that is in the gpac package. If you run a MP4Box using these parameters, then you get files for DASH streaming – a manifest file that has a .mpd format and also segment files. Then you just put those files into the web server and then you can deliver them over HTTP.

15:09 We Don’t Like This Method

We don’t like this method. The biggest reason is that we don’t like to add an additional step before we provision the video file. Every time we make a new file available for viewing, we have to run ffmpeg and we have to run MP4Box. That is annoying, and it also consumes triple the storage because we want to keep MP4 plus HLS plus DASH. Also if we want to change a parameter like segment during from six seconds to four seconds, then you would have to reprocess the entire content library. If we only have a couple of files then it is not a big deal, but if you have hundreds of thousands of files then it means that if you want to make a single parameter change then you have to re-run ffmpeg and MP4Box over hundreds of thousands of files. That is something you want to avoid. So the question here is: is there any way to generate HTTP streaming files like manifest and segment for HLS and manifest and segment for DASH on-the-fly? On-the-fly means that files are generated only when they are requested without having to pre-process the entire file.

16:42 Streaming By NGINX Plus

NGINX Plus offers some streaming features.

16:49 NGINX Plus RTMP Module

The streaming features offered by NGINX Plus is the exact HTTP VOD that is not covered by the NGINX RTMP module.

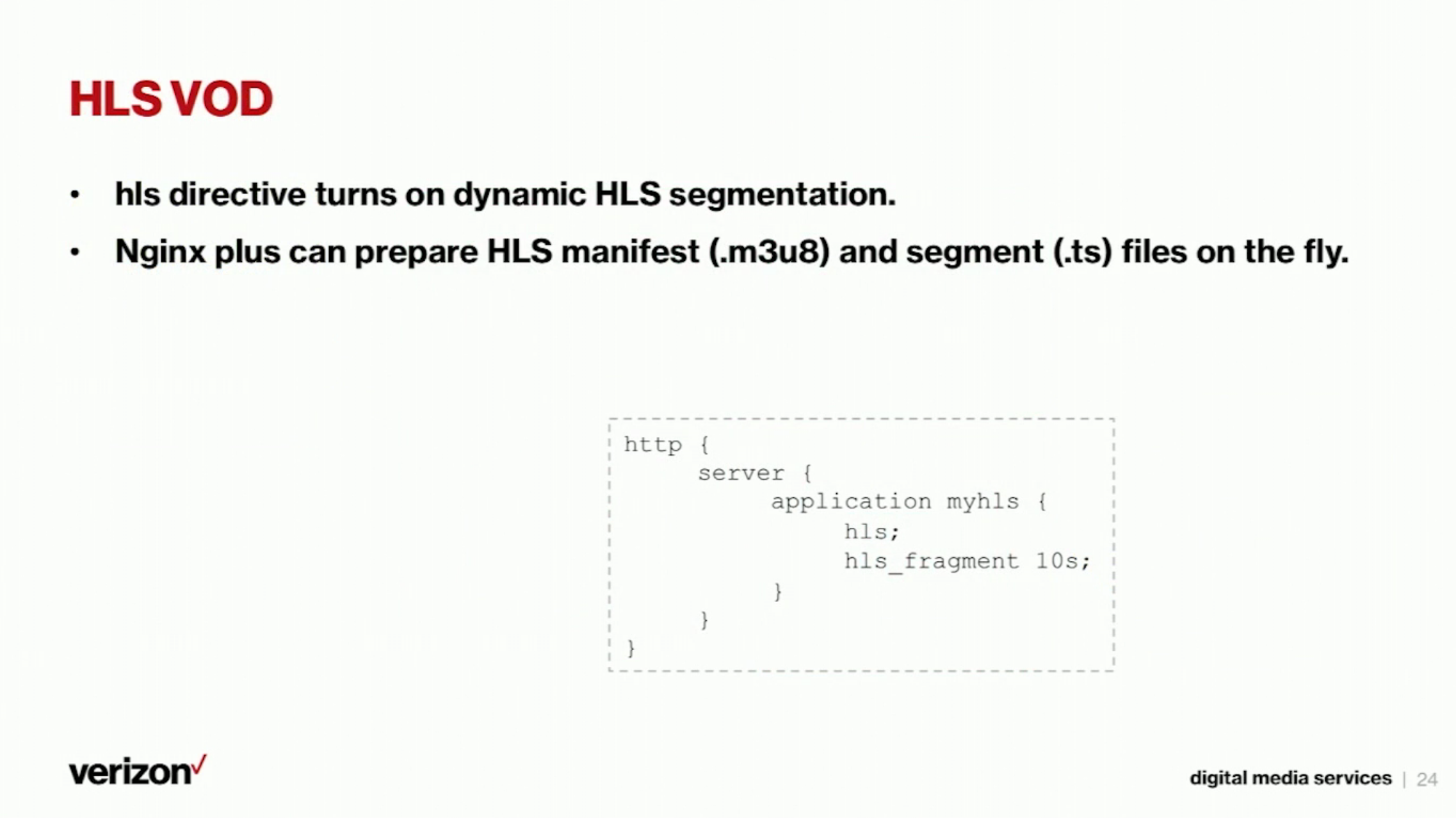

16:58 HLS VOD

If you install NGINX Plus, you can define a HLS application by adding “hls” inside an application block and then “hls_fragment”. Once you do that, then when you make a request for the m3u8 manifest file, then NGINX Plus is dynamically generating that manifest. When your client makes a request for the a segment file, then NGINX Plus is dynamically generating that segment file and returns it.

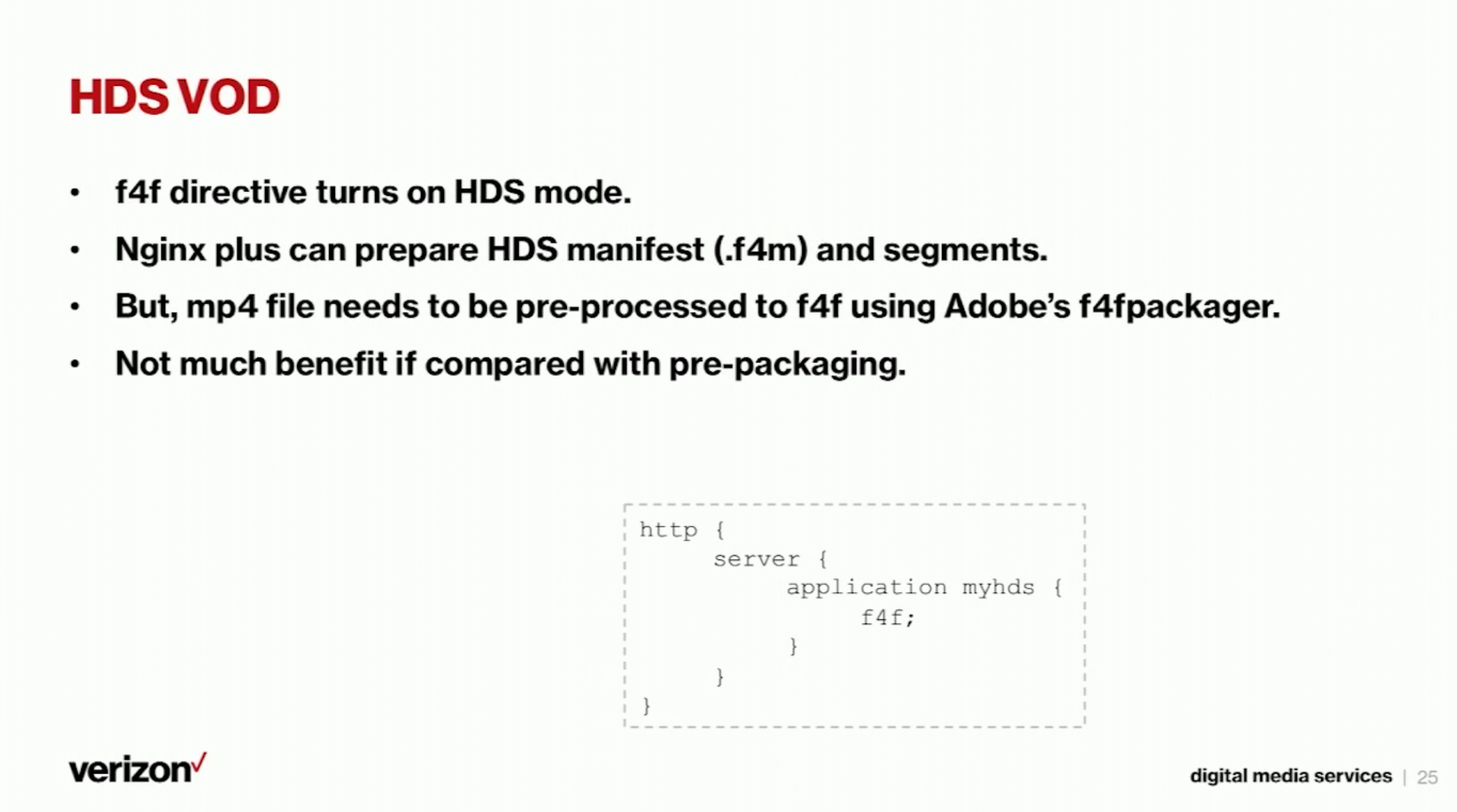

17:33 HDS VOD

NGINX Plus can can also do HDS as a as well as HLS, but HDS support is not complete – you need to run the f4f packager. The f2f packager is from Adobe, so there is no great benefit by using the HDS feature from NGINX Plus.

17:57 We Don’t Really Like It Either

We don’t like this either because NGINX Plus is a little expensive. If all you want is streaming, then maybe you don’t want to pay that much money. Some people don’t like the HLS from NGINX Plus. NGINX Plus doesn’t make a unique filename for each segment, but it uses uses query string to identify different segments. So every segment has the same filename.

The first segment has a query string like ?start = 0 and n = 10. That represents the first 10 second segment. The second segment ?start = 10 and n = 20. In most cases it is ok, but in some situations having the same filename for very different files with only a different query string can cause some issues with certain caching infrastructure. Another thing is that HDS support by NGINX Plus is not complete. It still requires an additional pre-processing and MPEG-DASH is totally missing.

19:17 Now We Have A Dilemma

So what we have so far is: the NGINX RTMP module can do RTMP live and VOD, the NGINX RTMP module can also do HLS and DASH Live, HLS can do HLS and HDS, and NGINX Plus can also do HDS but it is not complete. Is there any way to get HLS and DASH VOD on-the-fly without spending too much using basic NGINX?

20:03 HLS VOD By NGINX and ffmpeg

I’m going to go through a method to achieve that.

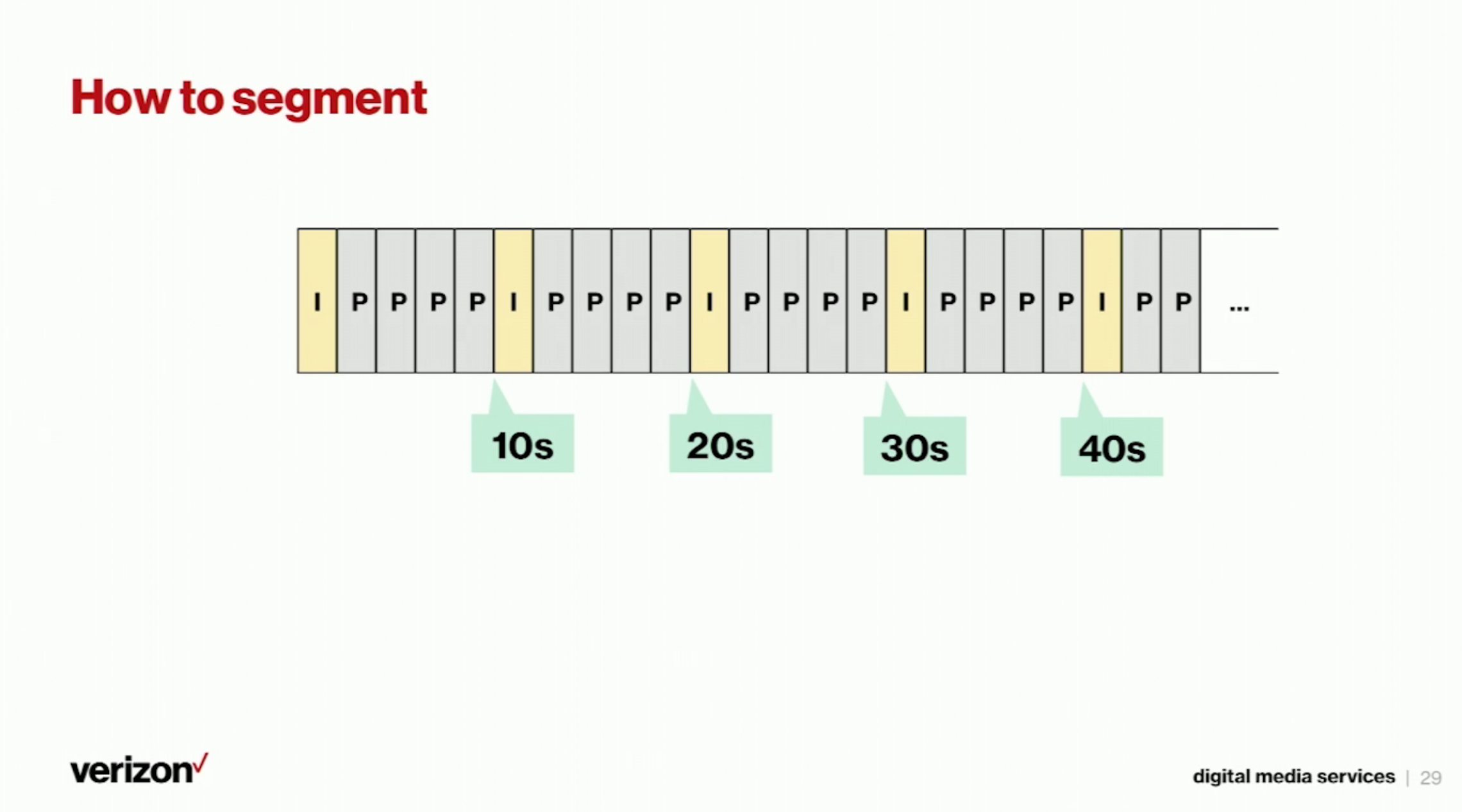

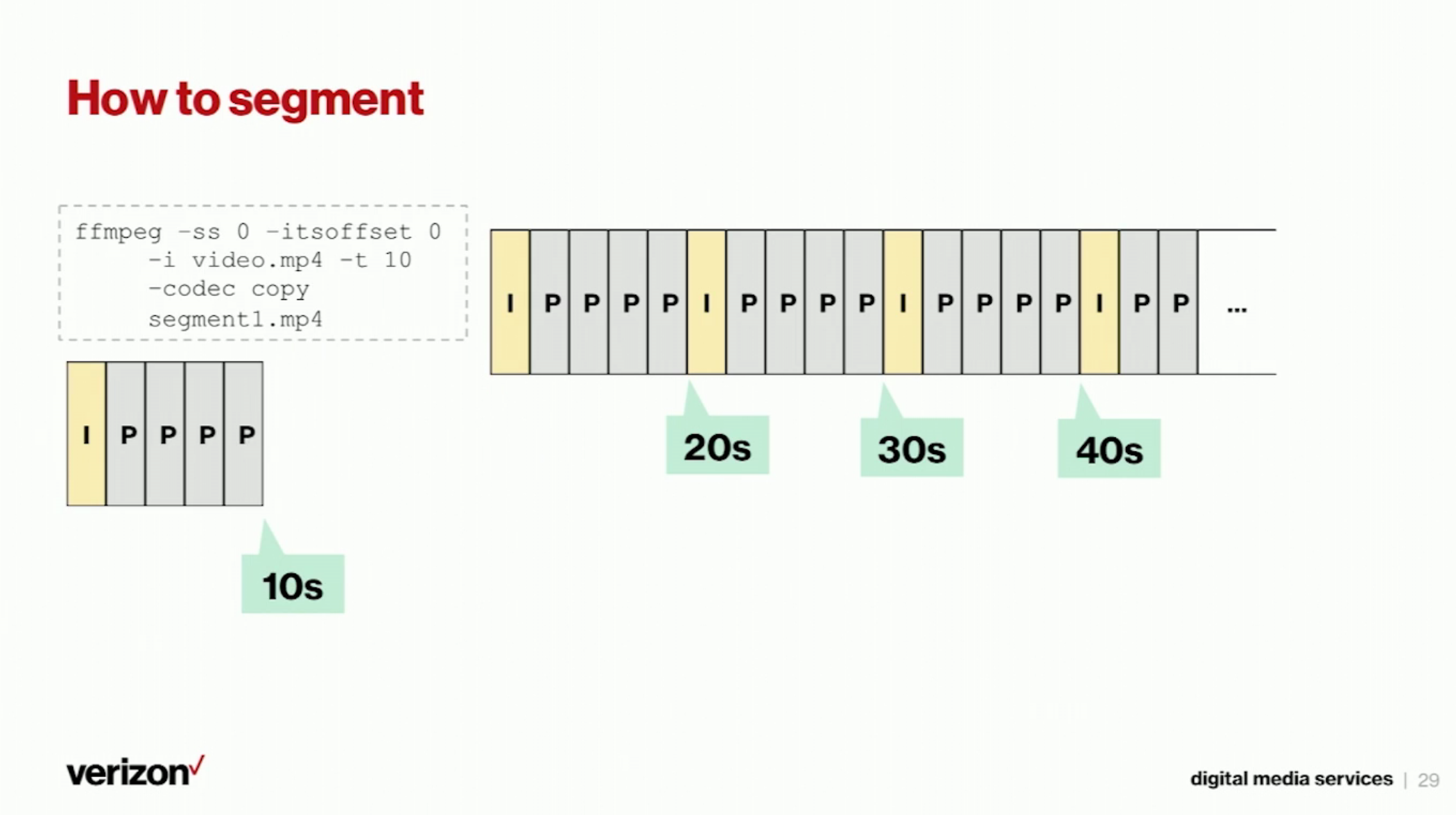

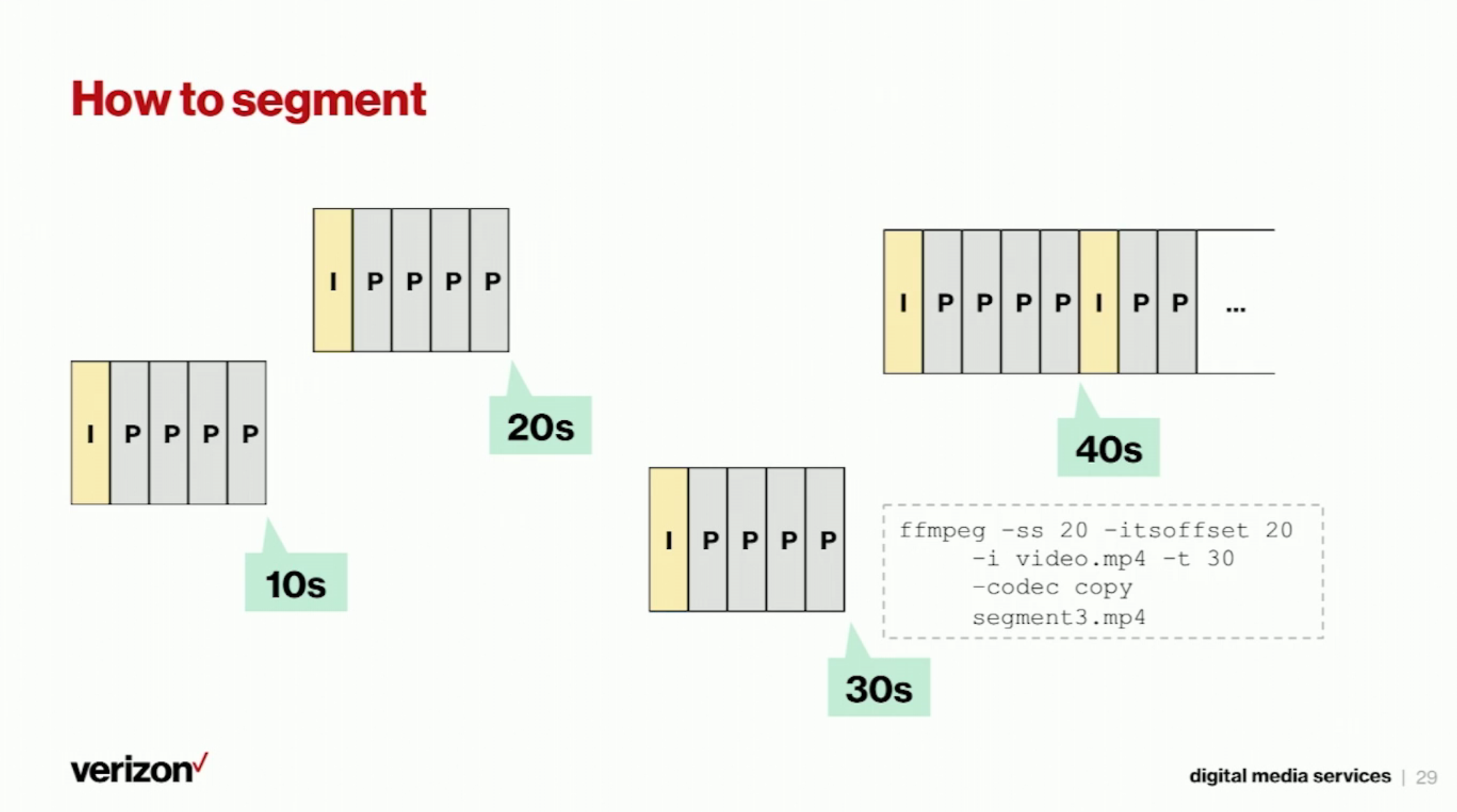

20:10 How To Segment

So the most important part of segmenting a file and the packaging is how to cut a file off into smaller segments. We can do that using ffmpeg.

20:25 How To Segment (cont)

ffmpeg can take “-ss”, start time “-t”, and end time. If you run this, then you get the first 10 second segment. If you put 10 as the start time and 20 as the end time, then you get the second 10 second segment. Then you can get the third 10 second segment.

20:40 How to Segment (cont.)

What makes it a little tricky is there are two different types of frames. First there is an I-frame that has all all of the information required to decode itself. The other other kind of frames are P-frame and B-frame. For simplicity I didn’t include B-frame here, but in the sense that they don’t include the full information but only the difference between two frames they are similar to each other. I-frame can be located anywhere in the file, and P-frame cannot be the first frame.

In this example, I-frame has been inserted every ten seconds regularly, but is an extremely rare case in the real world.

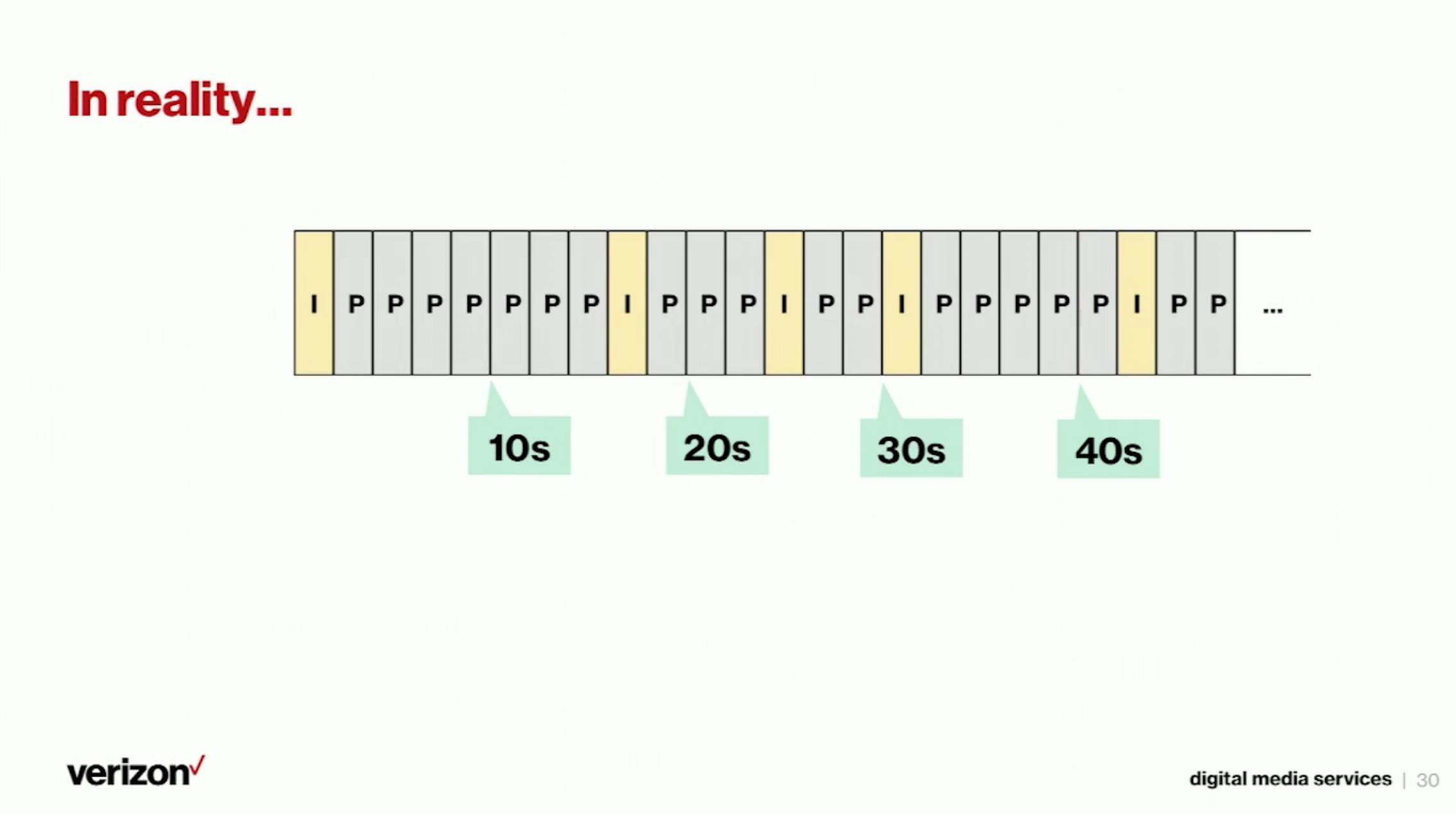

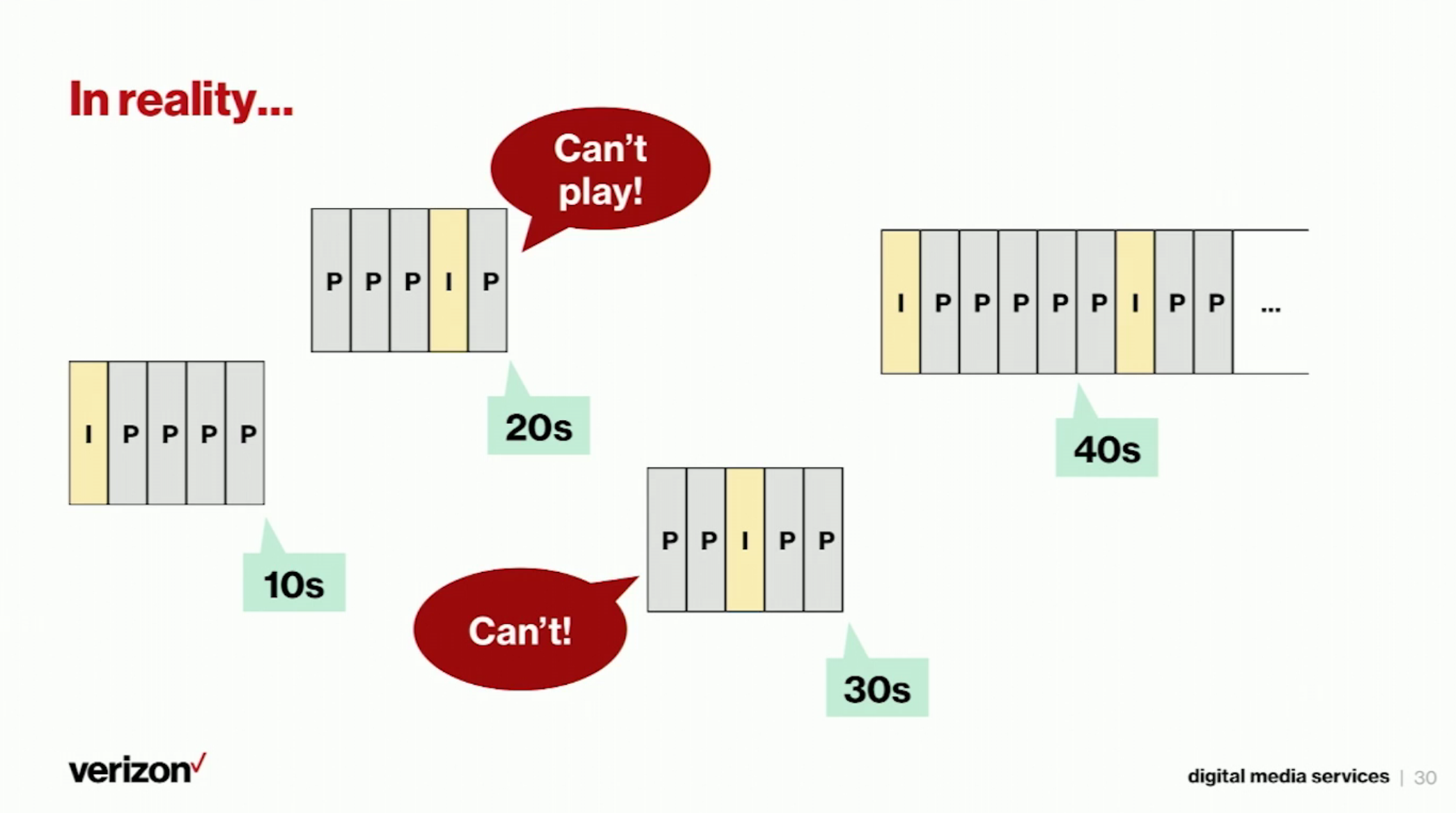

21:30 In Reality

In reality, most of the MP4 files that you are going to work on will look like this. If you segment in the same method, then you get the first segment and that is okay. You get the second segment, but it doesn’t play because the first frame is not an I-frame. You get the third segment and it doesn’t play. Once again, the first frame is not an I-frame.

21:55 In Reality (cont.)

Then how can we make each segment playable? The segment size has to be adjusted by the location of the I-frame.

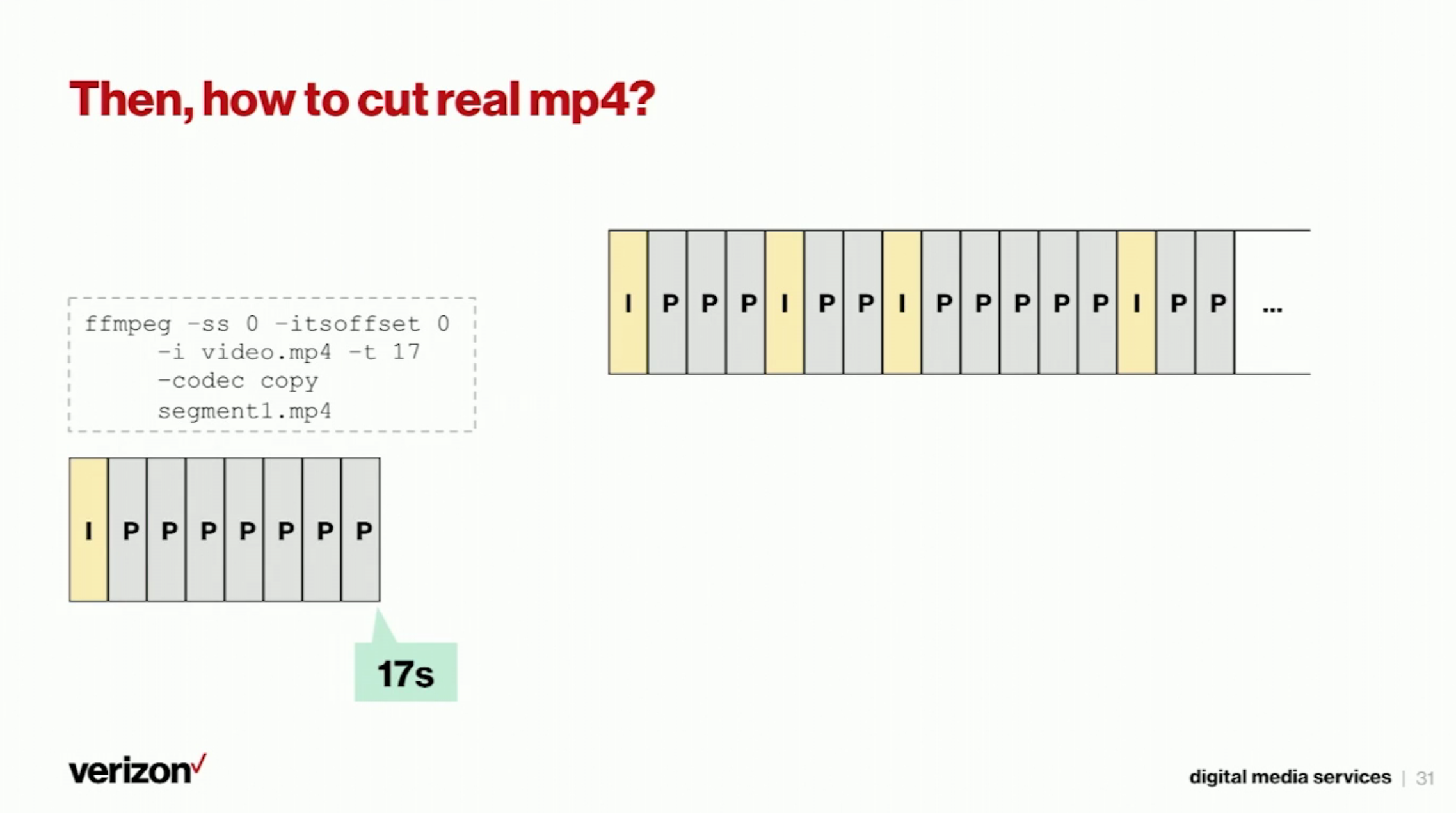

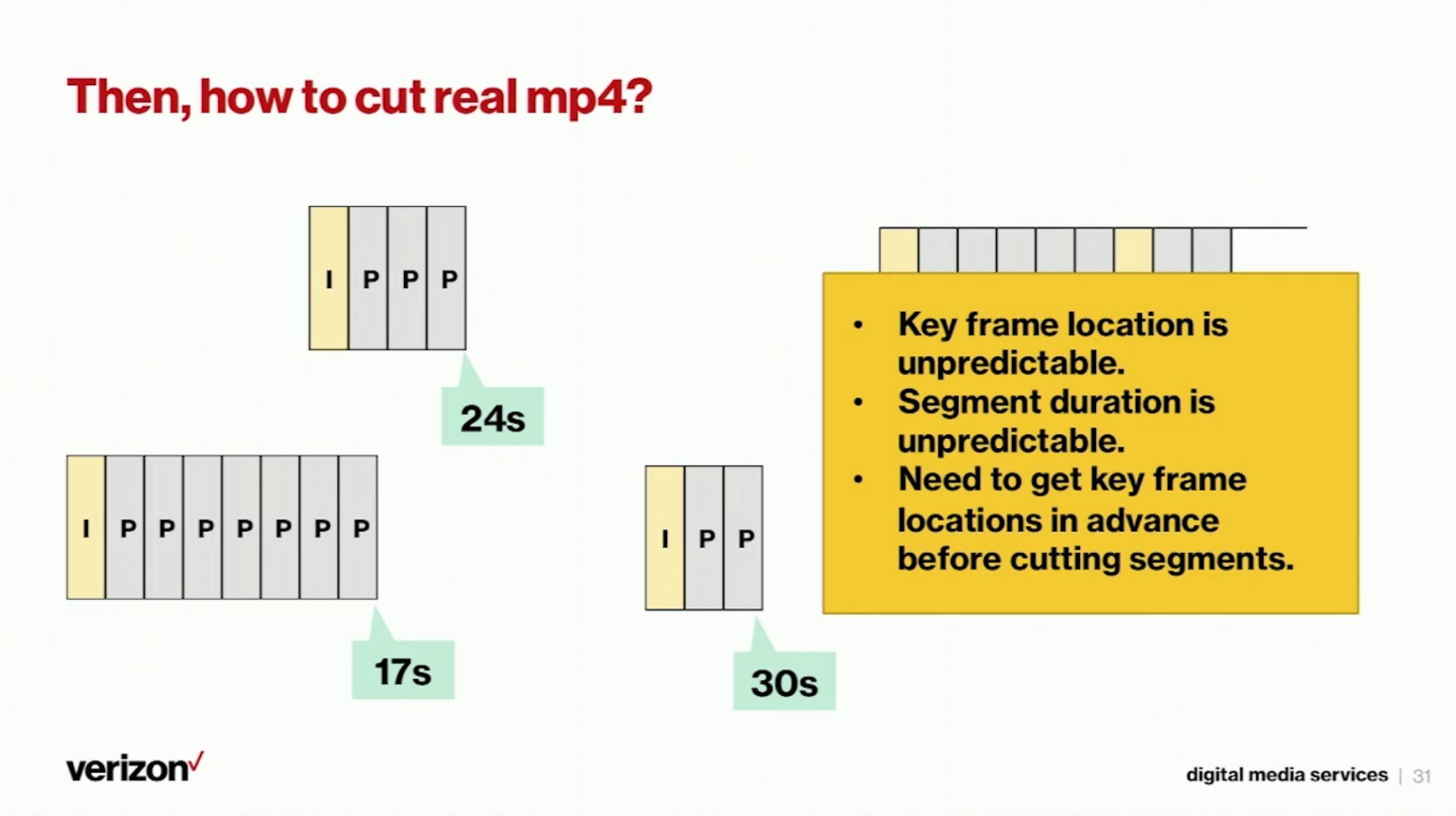

22:11 How To Cut Real MP4?

So the first segment has to start from the first I-frame and ends at the next I-frame. Sometimes the next I-frame comes a little earlier than usual. The I-frame location is unpredictable, and as a result, the segment duration is unpredictable.

22:35 How to Cut Real MP4 (cont.)

The main question here is: how are we going to get the keyframe location in advance? Without that information, we are not able to generate any single segment.

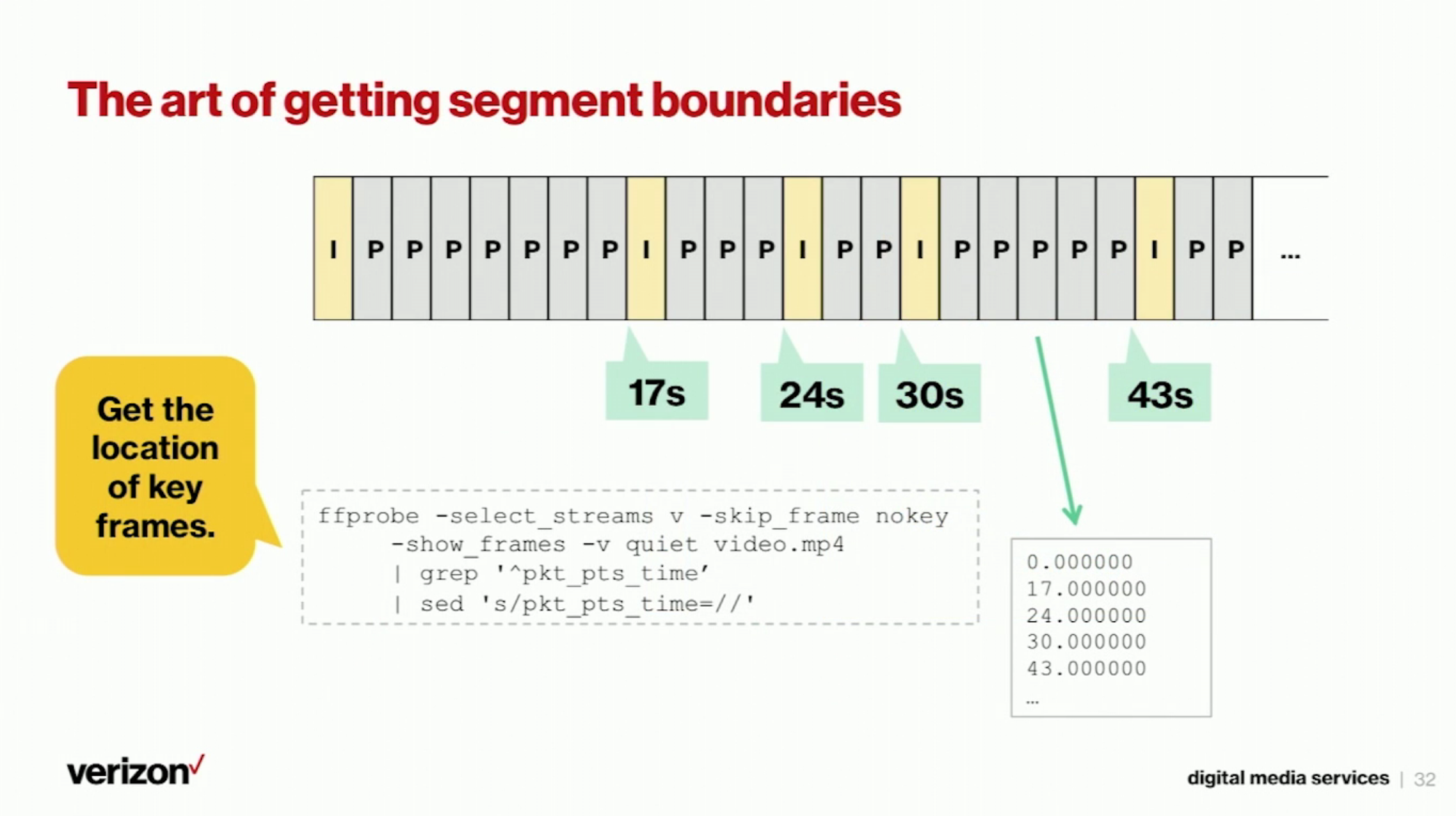

22:48 The Art of Getting Segment Boundaries

We can get that information by running ffprobe. If you run ff probe with those parameters, then ffprobe returns the location of each I-frame.

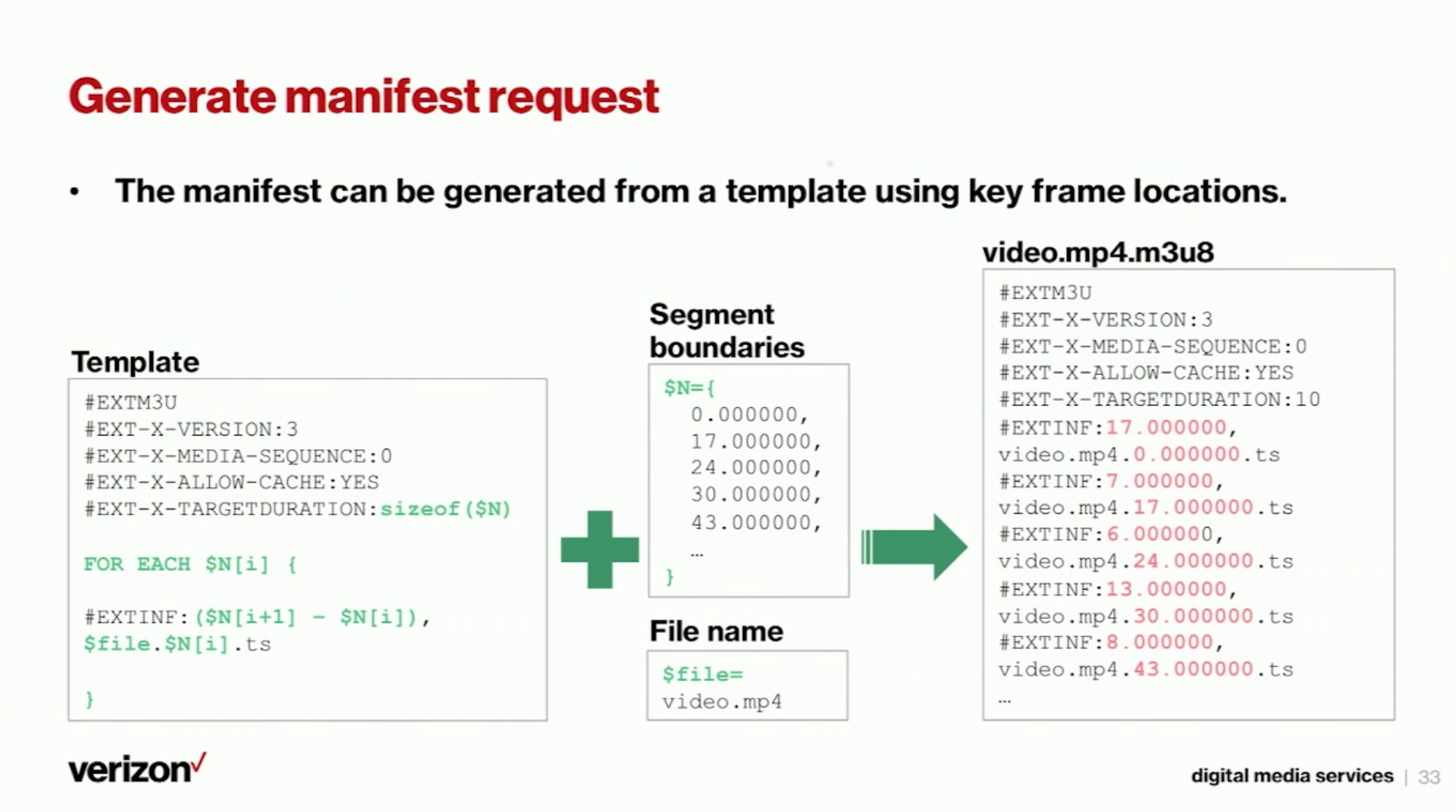

23:05 Generate Manifest Request

Then you have the list of I-frames and you know what the manifest has to look like, then you can combine those two pieces of information to construct the manifest file for that particular MP4 file. You have certain rules, and you can apply those rules to get then you get a m3u8 file. In this m3u8 file you get the list of segments, and each segment has a suffix which represents the start time of that segment.

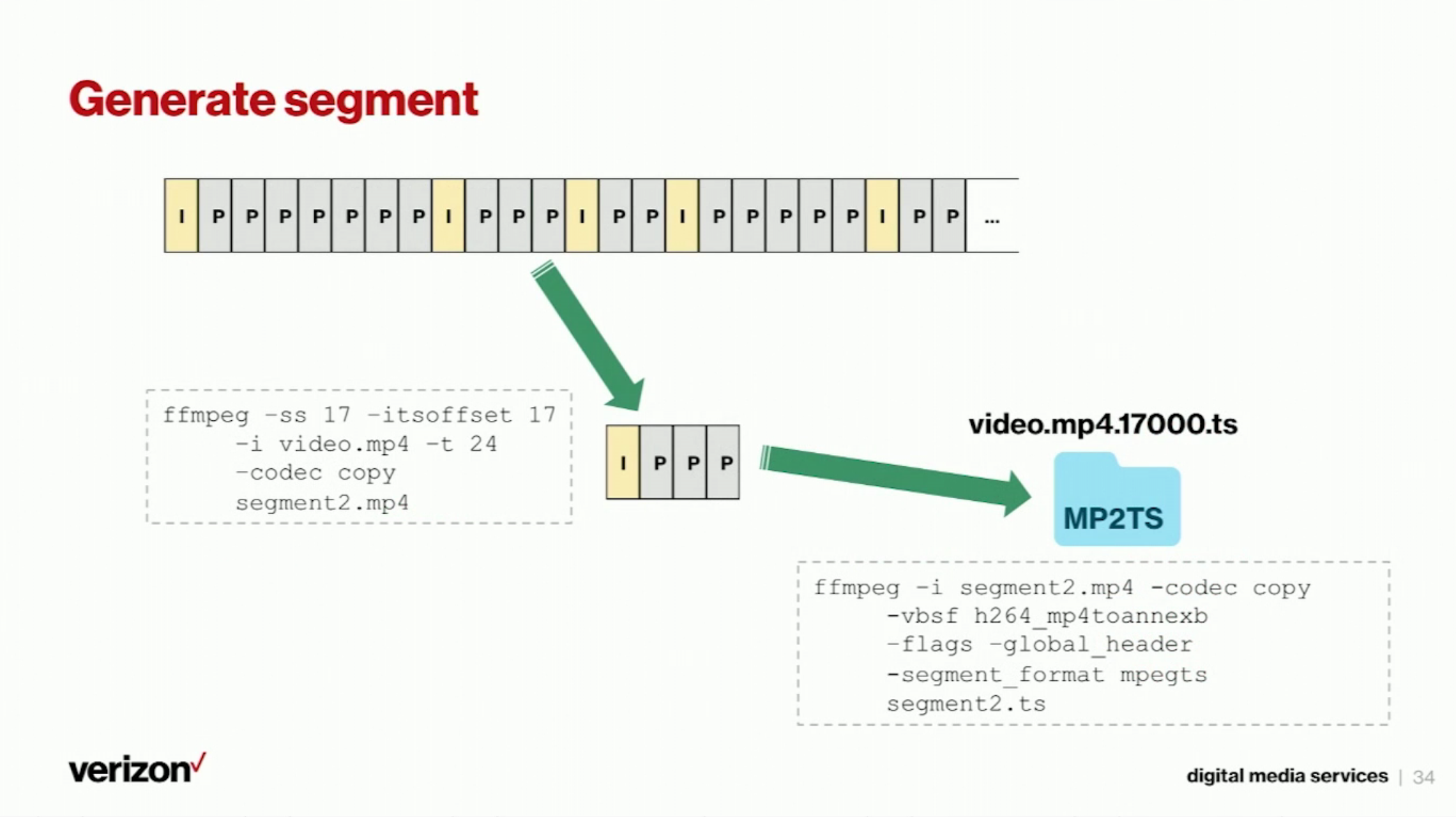

23:40 Generate Segment

When the player requests the segment file, then we know where the time is and can run ffmpeg to cut the segment and repackage it with those parameters. Then we can return that generated segment to the client.

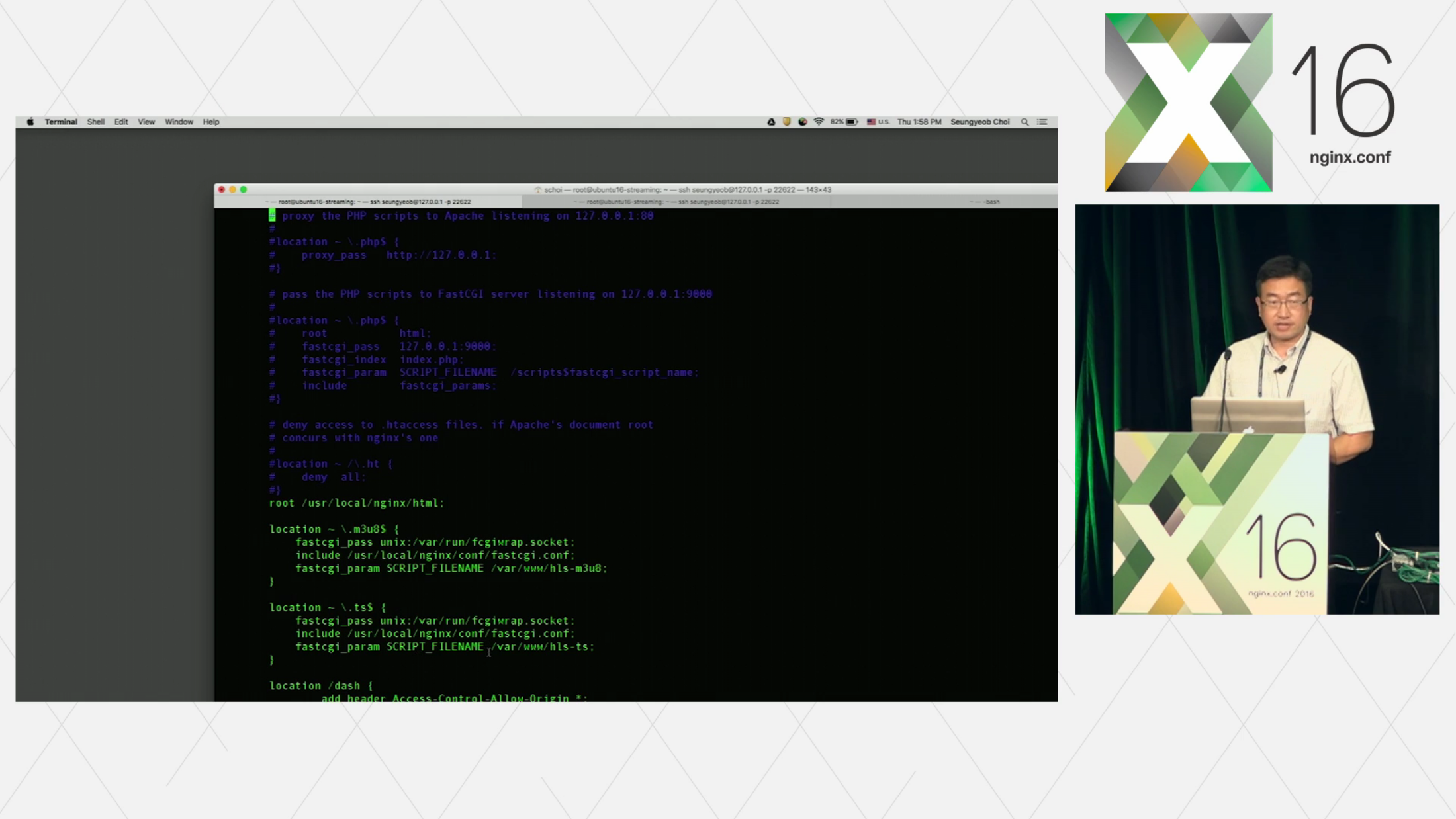

24:08 Streaming Using fastCGI Scripts

Let me show you how it works. So I added these rules so that if we receive requests for m3u8, the server runs this fastCGI script to generate the manifest. If you get a request for ts, we run this fastCGI script to generate the ts file. I implemented that as a fastCGI script just for quick prototyping, but there is probably a better way to do that.

Let’s take a look at the fastCGI scripts for generating manifests. So we get the request, then we run ffprobe to generate the list of I-frame locations. Then we use that information to construct the manifest file. When we request for a segment file, then we get the suffix to identify the start time for that segment and we run ffmpeg to create that segment and repackage that segment using a MPEG2 ts file. Then we return the generated segment.

So when we make the request, the server runs that fastCGI script and generates the manifest for that MP4 file. If we actually play that HLS stream using an HLS player, we can see that we receive the request for the m3u8 file, receive the first ts file, the second ts file, and third ts file. They were all generated by the fastCGI script and ffmpeg, then they were returned.

28:56 DASH VOD By NGINX and gpac

MPEG-DASH can be generated using a similar method.

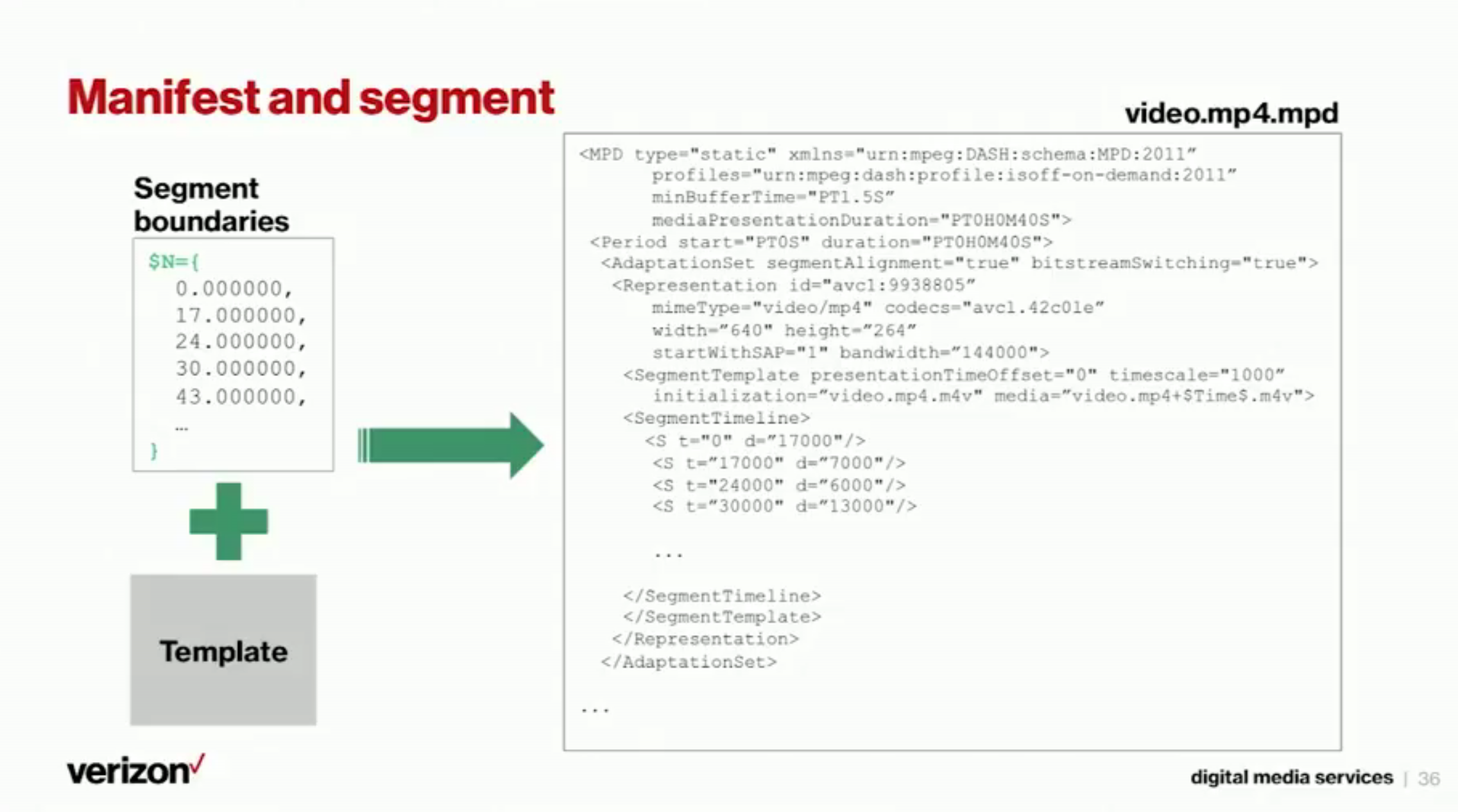

29:02 Manifest and Segment

You get the locations of I-frames, then you have a DASH manifest template and you can combine those pieces of information together to construct a DASH manifest file.

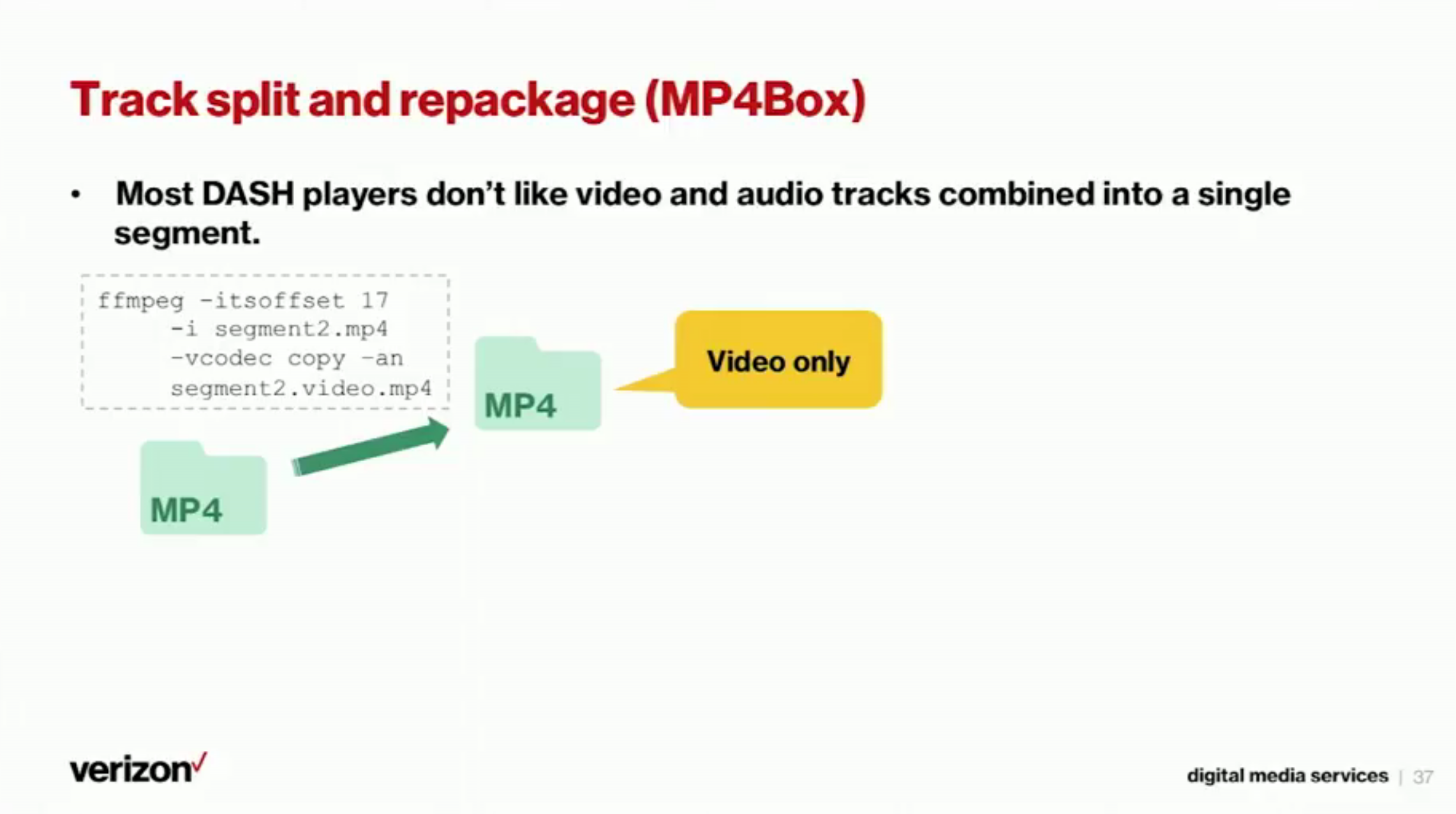

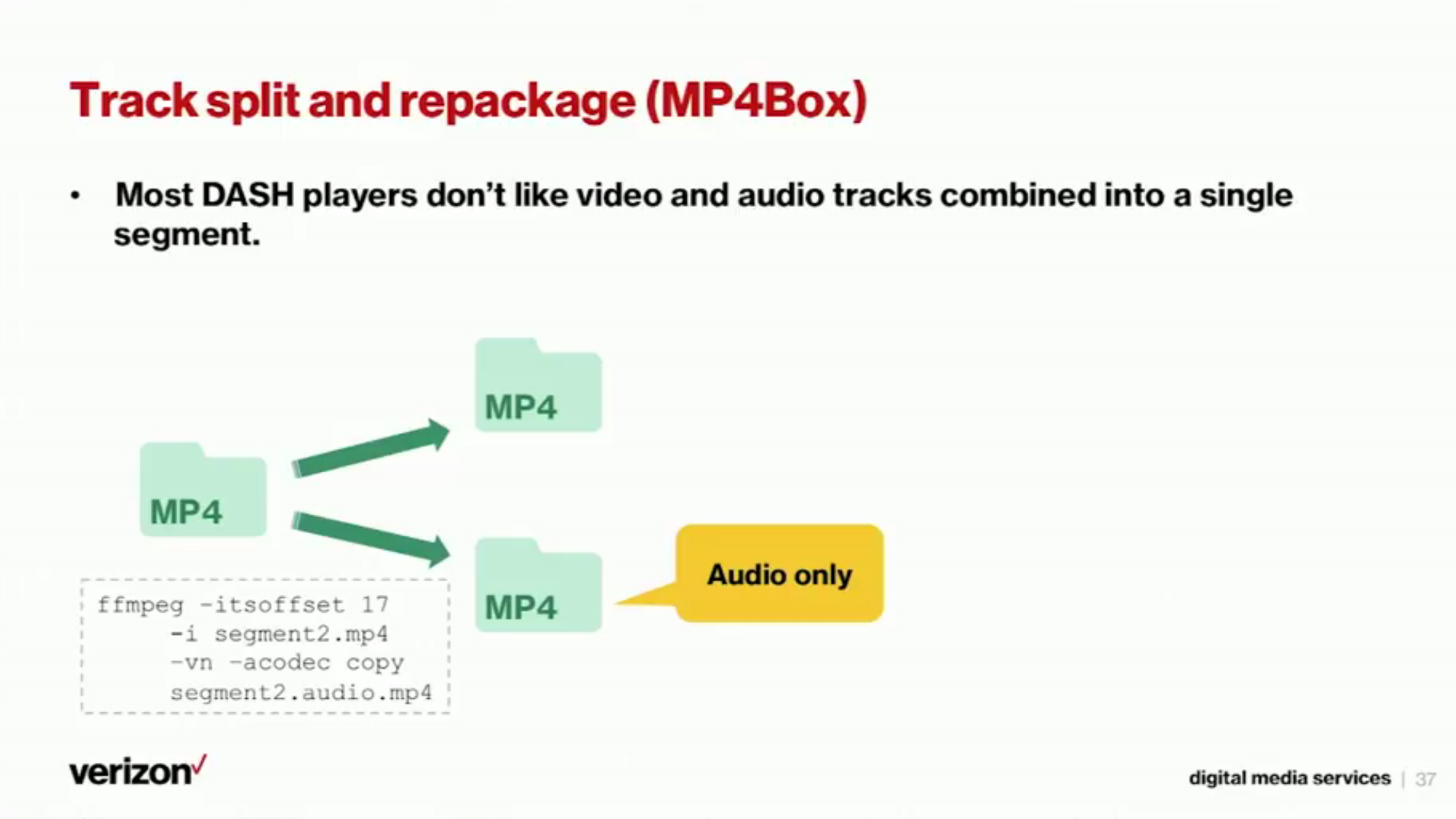

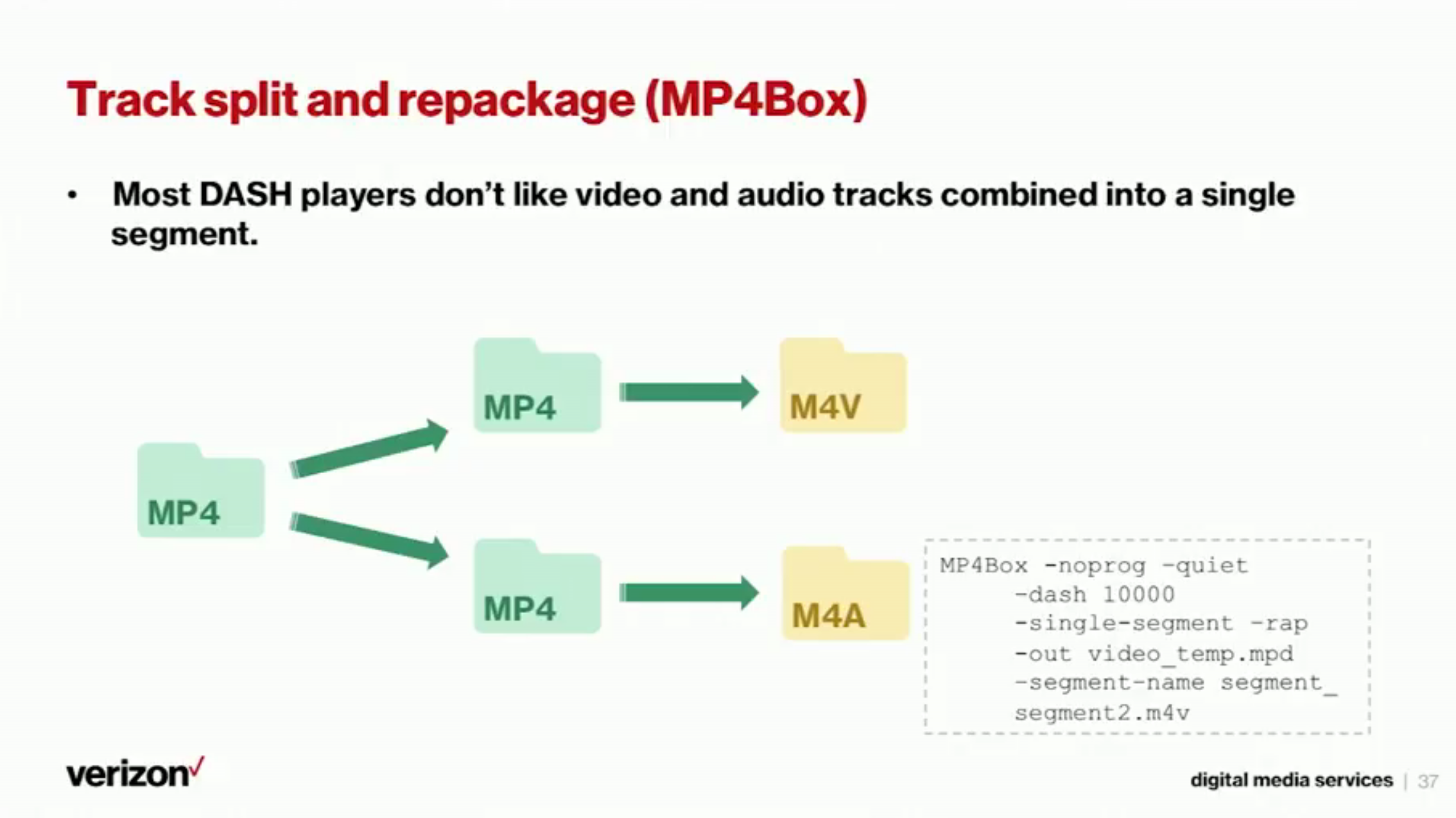

29:16 Track Split and Repackage (MP4Box)

You can also generate DASH segment files. One difference is, in case of DASH, players don’t normally like the segment files that have video and audio tracks in the same segment.

29:37 Track Split and Repackage (MP4Box) (cont.)

So to make those players happy, we generate segments twice. The first time we get the video only segment by using “-an”, which means that there is no audio.

29:48 Track Split and Repackage (MP4Box)

Then we get the audio only segment by putting “-vn”, which means that there is no video.

29:54 Track Split and Repackage (MP4Box)

Then we repackage those two segments using MP4Box, and we generate video and audio segments for MPEG-DASH and we return those segments to the player.

30:13 Multi-Screen: HTTP Progressive Download and HTML5

Now we know how to do RTMP Live and VOD and HTTP Live and VOD. We can have a streaming server using NGINX combined with ffmpeg, MP4Box, the RTMP module, and some fastCGI scripts to link incoming request with ffmpeg and MP4Box. Then we are running a multipurpose streaming server using NGINX.

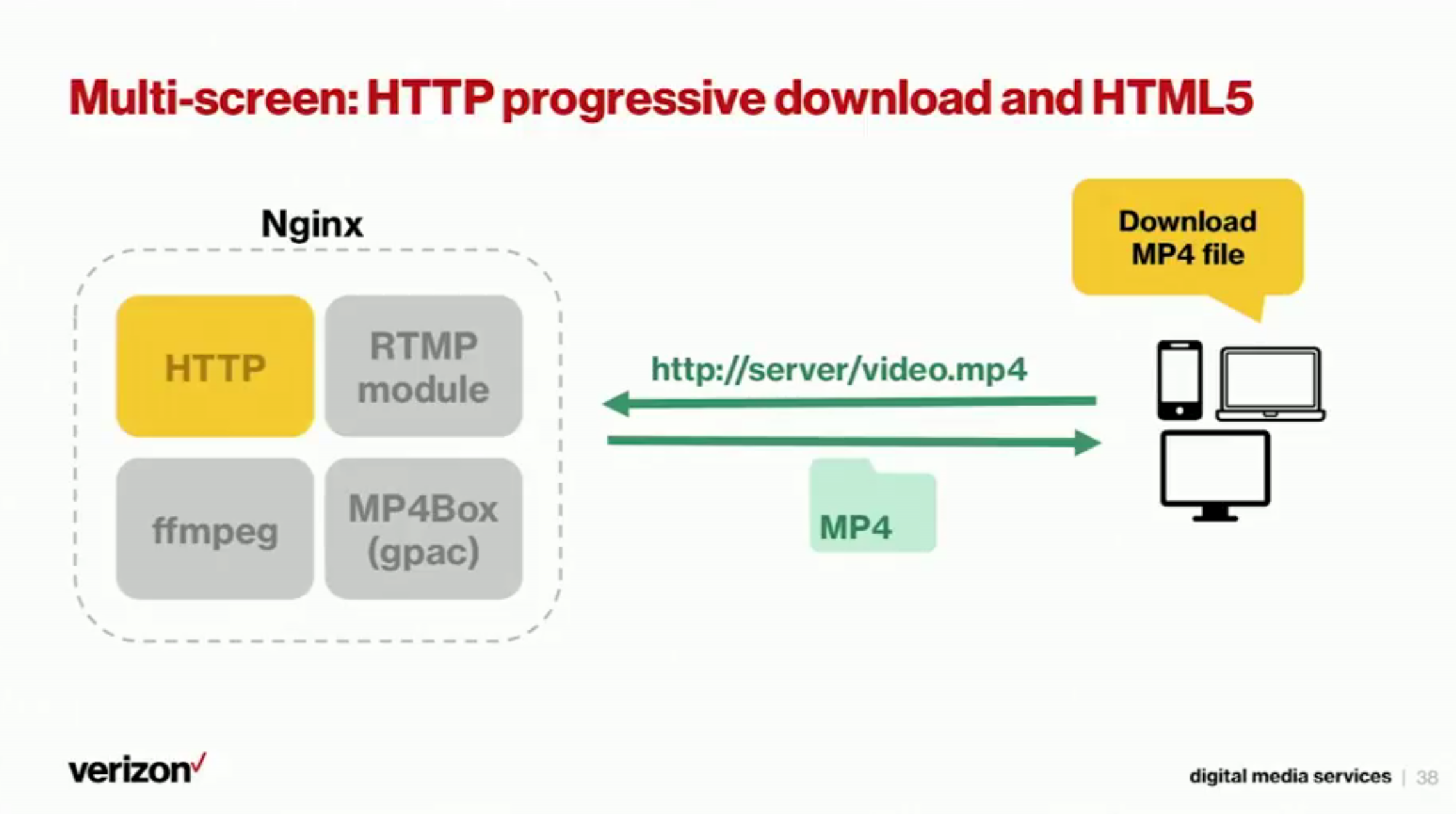

30:46 Multi-Screen: HTTP Progressive Download and HTML5 (cont.)

When we get a request for a MP4 file over HTTP, we can just serve the file over HTTP. That is the case when the client is capable of HTML5, or trying to play the video using progressive download.

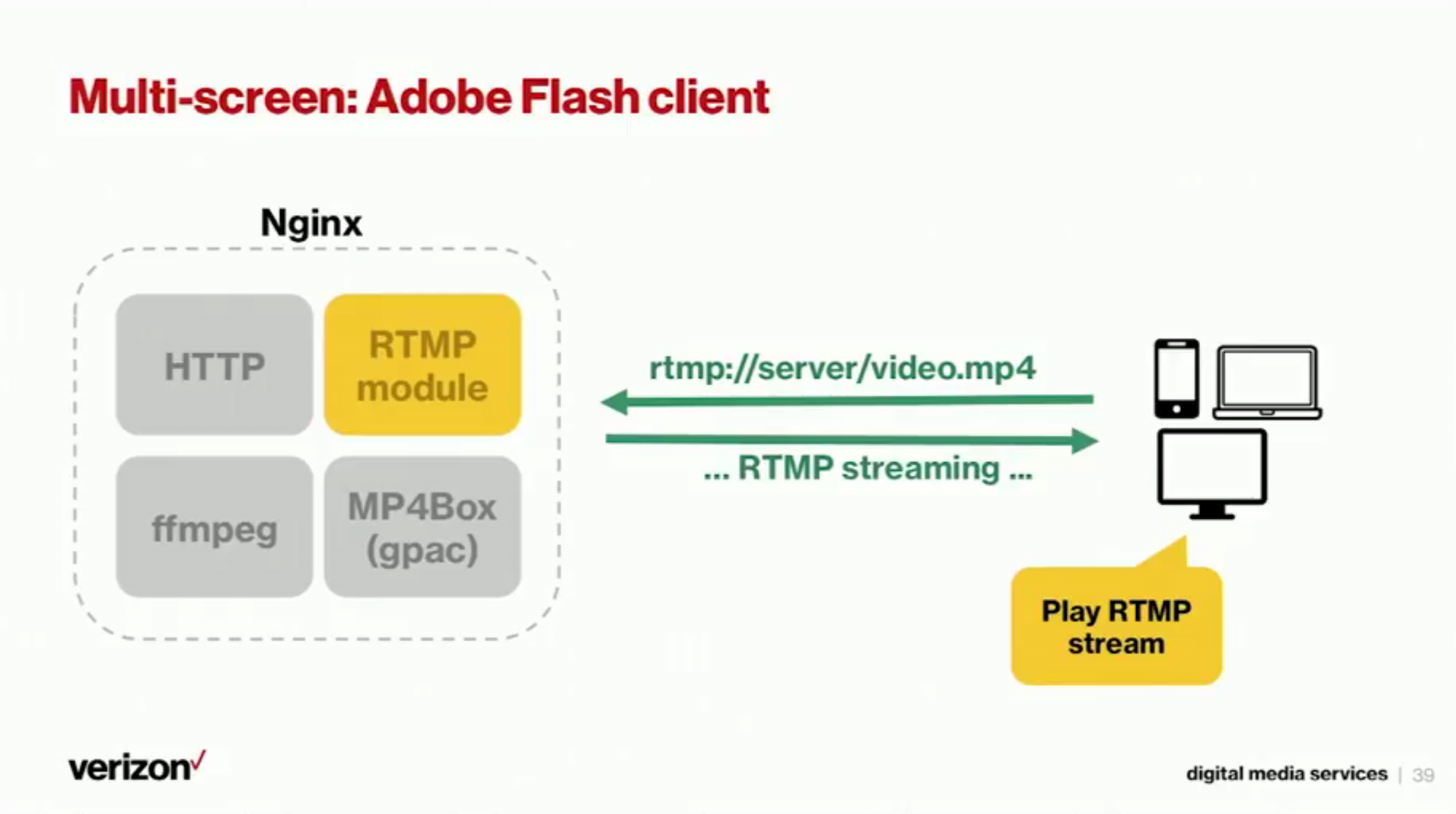

31:05 Multi-Screen: Adobe Flash Client

Some other client that has Adobe Flash Player makes a request over RTMP, then the NGINX RTMP module can return the content over the RTMP protocol.

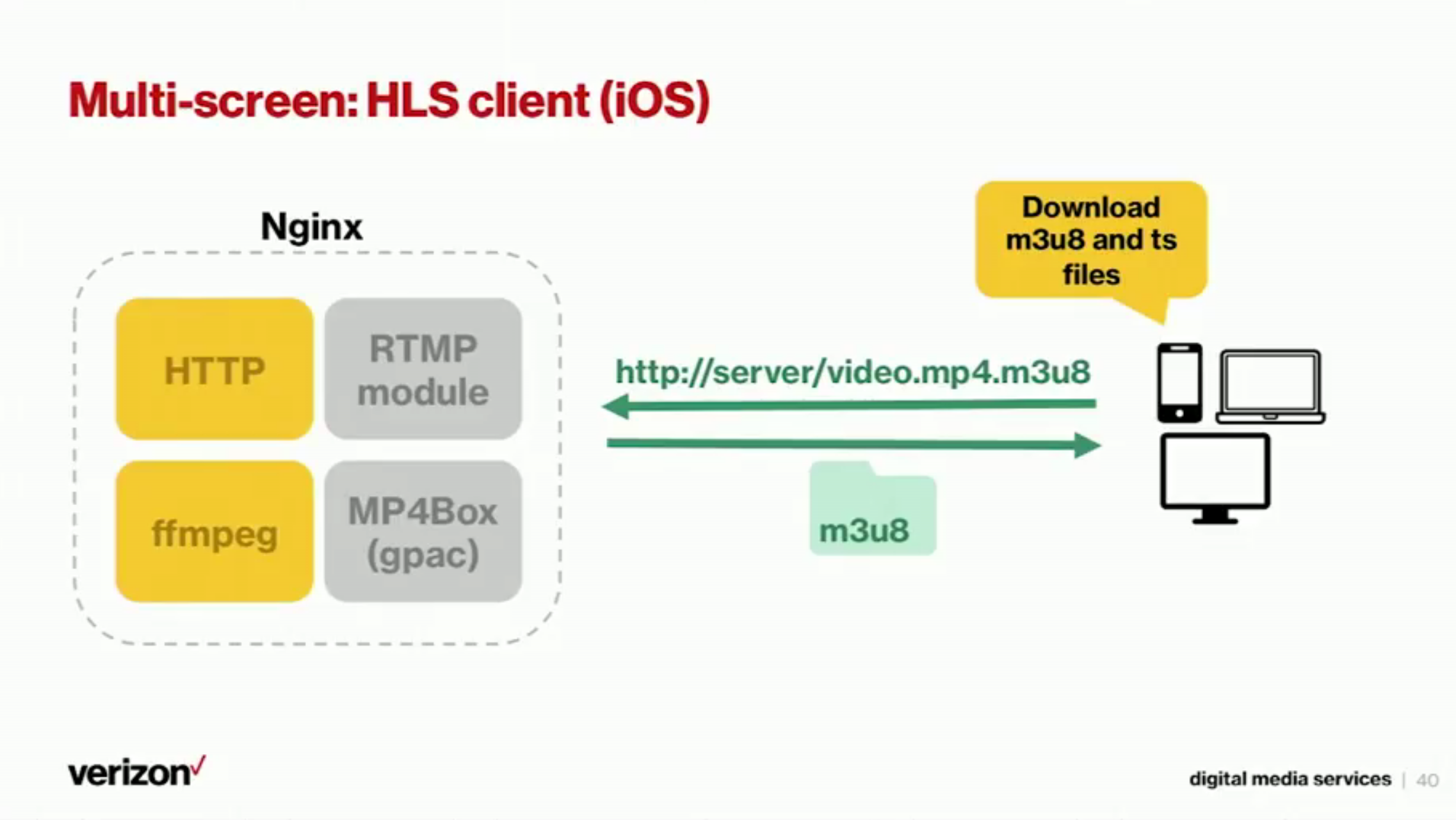

31:22 Multi-Screen: HLS Client (iOS)

If an Apple device makes a request for the content over HLS, then we can run ffmpeg to generate the manifest in segments and return the m3u8 manifest and ts segment.

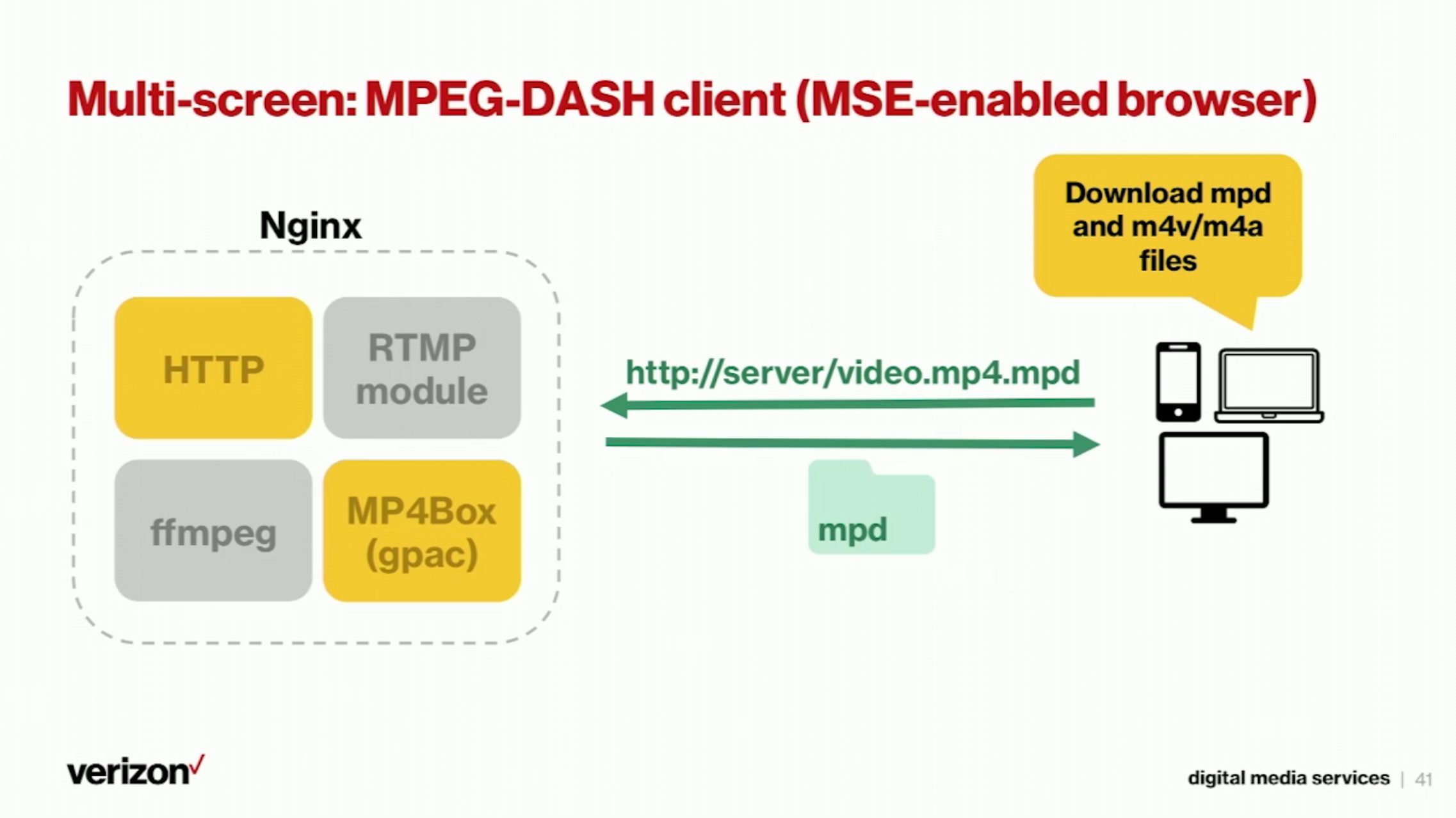

31:46 Multi-Screen: MPEG-DASH Client (MSE-Enabled Brower)

When the client makes a request for DASH content, then we can run MP4Box to generate the DASH file and return the manifest and m4a/m4v segment files to the client. Using this way, we can offer different streaming protocols that will probably cover most of the clients.

32:22 Thank You

Any questions?

Q:

It can be any HTTP server, but NGINX has a very good third party module called NGINX RTMP module that already does RTMP Live and VOD and HTTP Live very well. So the only thing that is missing is HTTP VOD. So using this method, HTTP VOD can be implemented on any HTTP server, not only NGINX. But the reason NGINX is great for streaming is it already has the open source module called NGINX RTMP module that does its job very nicely.

Q: Which protocol do you think will be a replacement for RTMP?

Many people believe that RTMP is dying, so we have seen that stream traffic is moving out of RTMP. The destination is HTTP protocols like HLS and DASH. It used to be HDS, but it is losing its foothold now. So we expect that most streaming traffic is moving towards HLS and MPEG-DASH, but somebody still wants RTMP. The biggest reason is be that there is no other protocol that can allow such a short end to end latency. If you stream over HLS or MPEG-DASH, it is very hard to achieve low latency. It is really hard to make the end to end latency less that 10 seconds, but in the case of RTMP streaming, it is not that difficult to have 2 to 3 seconds of delay. Some people really need very short latency. For example, imagine the kind of situation where you want to run a streaming server and your business streams gambling content and you allow the end user to compete based on them watching. If there is one minute delay from the real event, then you would be in trouble. For those kinds of people, they really need RTMP and there is no other streaming protocol that can allow that short latency. So for those kinds of applications, we believe that there will always be a certain niche that will still need RTMP.

Q:

Yes, we expect that everything should be moved to MPEG-DASH or MPEG-DASH plus HLS. People really don’t like RTMP, but certain kind of applications still needs RTMP. There is no good alternative for that kind of situation that requires low latency.

Q: Can you talk a little about adaptive bitrate?

I didn’t include adaptive bitrate here. Once you can offer single bitrate, adaptive bitrate is not difficult. It just requires multiple files, and each one represents different bitrate. Then you can generate the stream level manifest for each one, and you can generate segments from each file. Then one thing that is missing is the set level manifest that includes the list of stream level manifests for each different bitrate. You can automate that using the existing MP4 file. That is not difficult to implement at all. So the most important part is on the generation of the stream level manifest and segments rather than the set level manifest.

Once it is done, bitrate switching is actually taken care of by the player and not the server. What the server has to do is make sure that the set level manifest is generated correctly. Thank you.