Generative AI’s Impact on Application Performance

Studies abound on the impatience of consumers. They’ll dump an app, delete an app, and complain loudly on social media if an app performs poorly. And to many, poorly means “responds in more than a couple seconds.”

Enter generative AI—which, based on experience and benchmarks, generally takes far longer than a couple of seconds to respond. But, like our text conversations with friends and family, while chatbots are ‘thinking,’ we are thoughtfully presented with an animated “…” to indicate a response is forthcoming.

For some reason, the animation activates a nearly digital Pavlovian reaction that makes us willing to wait. Perhaps the reason lies in the psychology of anthropomorphism, which tends to make us look kindlier upon non-humans imbued with human-like personality. Thus, because we perceive the AI as at least human-like, we grant it the same grace we would grant, well, a human being.

Whatever the reason behind our willingness to wait for today’s AI user experience, it raises the question about how far that grace will go, and for how long? As more and more applications become integrated, augmented, and imbued with AI capabilities, the questions about acceptable performance become more and more important to answer.

Just how much latency is acceptable for an AI user experience? Does it matter where that latency is introduced, or is it only acceptable when we know there’s generative AI involved?

This is an important area to examine because we know that one of the taboos of application security is the introduction of latency into the process. Despite the reality that requires latency to inspect and evaluate content against known threats—SQLi, malicious code, prompt injection—users of application security services are quick to shut down any solution that causes performance to degrade.

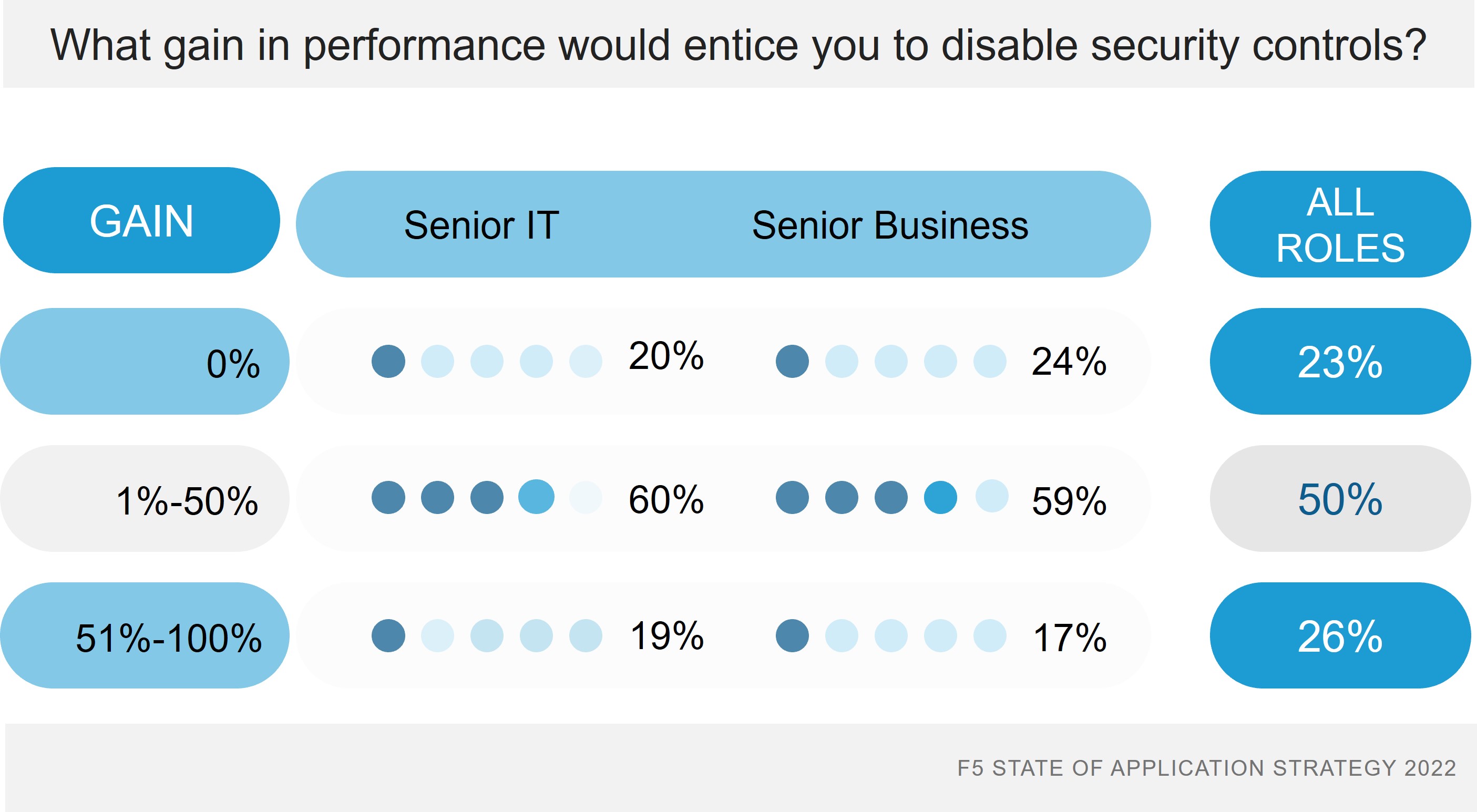

I give you exhibit A, the responses to a question on this topic from our 2022 State of Application Strategy survey, in which just about 60% of both IT and business leaders would turn off security controls for between a 1% and 50% gain in performance.

Clearly performance matters and latency is viewed as a Very Bad Thing™. So, the question becomes just how much latency is acceptable for the AI user experience? Are the old measures of “response must be less than X seconds” still applicable? Or is AI pushing that limit further out for all apps, or merely for those that are obviously AI.

And if our patience is only an initial reaction, partially due to the novelty of generative AI, what do we do when the novelty wears off?

If, as is currently the trend, inferencing becomes faster, perhaps the question will be moot. But it if does not, will the components and services that deliver, secure, and support AI need to be even faster to make up for how slow inferencing is?

This is how fast the industry is moving. We have questions that generate more questions and before we have answers, new questions arise. The backlog of unanswered questions looks like trouble tickets in an enterprise where someone unplugged a core switch and then all of IT left for the day.

We know that app delivery and security is going to change because of AI. Both in the needs of those who want to use AI to augment customer and corporate operations, and in those who build the solutions for them. The obvious solutions—AI gateways, data security, and defenses against traditional attacks like DDoS—are easy to answer, and we are already on that. But understanding the long-term impact is a much more difficult task, especially when it comes to performance.

Because the other reality is that hardware is only going to get us so far before we run into physical constraints, and then it will be up to the rest of the industry to figure out how to improve the performance of what will certainly be a critical component of every business.