提高 HTTP/2 性能的 7 个技巧

For more about HTTP/2, view the recording of our popular webinar, What’s New in HTTP/2?

The venerable HyperText Transfer Protocol, or HTTP, standard has now been updated. The HTTP/2 standard was approved in May 2015 and is now being implemented in browsers and web servers (including NGINX Plus and NGINX Open Source). HTTP/2 is currently supported by nearly two‑thirds of all web browsers in use, and the proportion grows by several percentage points per month.

HTTP/2 is built on Google’s SPDY protocol, and SPDY is still an option until support for it in Google’s Chrome browser ends in early 2016. NGINX was a pioneering supporter of SPDY and is now playing the same role with HTTP/2. Our comprehensive white paper, HTTP/2 for Web Application Developers (PDF), describes HTTP/2, shows how it builds on SPDY, and explains how to implement it.

The key features of HTTP/2 are the same as SPDY:

- HTTP/2 is a binary, rather than text, protocol, making it more compact and efficient

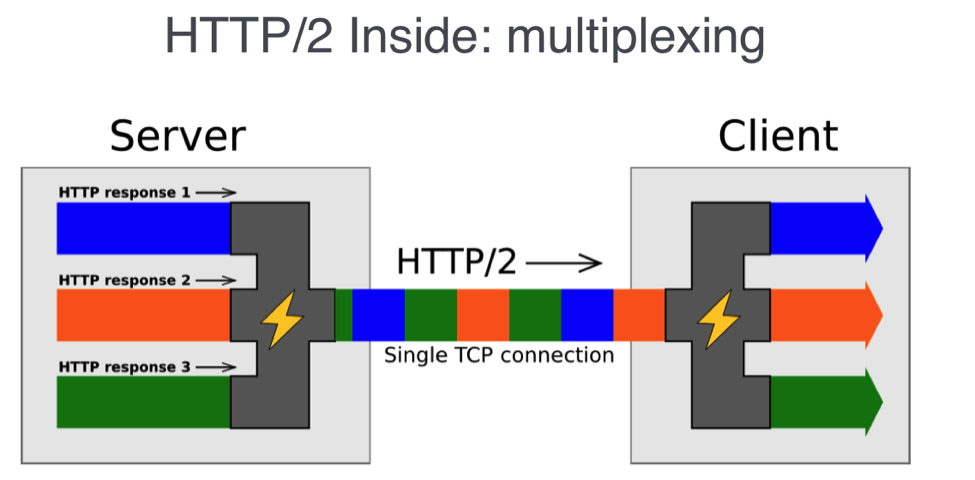

- It uses a single, multiplexed connection per domain, rather than multiple connections carrying one file each

- Headers are compressed with the purpose‑built HPACK protocol (rather than gzip, as in SPDY)

- HTTP/2 has a complex prioritization scheme to help browsers request the most‑needed files first, which is fully supported in NGINX (SPDY had a simpler scheme)

Now you need to decide whether to move forward with HTTP/2 – and part of that is knowing how to get the most out of it. This blog post will guide you through the performance‑related aspects of the decision‑making and implementation process. Check out these 7 tips for HTTP/2 performance:

- Decide if You Need HTTP/2 Today

- Terminate HTTP/2 and TLS

- Consider Starting with SPDY

- Identify HTTP/1.x Optimizations in Your Code

- Implement HTTP/2 or SPDY

- Revise HTTP/1.x Optimizations

- Implement Smart Sharding

Note: Neither SPDY nor HTTP/2 requires TLS, strictly speaking, but both are mainly beneficial when used with SSL/TLS, and browsers only support SPDY or HTTP/2 when SSL/TLS is in use.

Tip 1 – Decide if You Need HTTP/2 Today

Implementing HTTP/2 is easy, as we discuss our white paper, HTTP/2 for Web Application Developers (PDF). However, HTTP/2 is not a magic bullet; it’s useful for some web applications, less so for others.

Using HTTP/2 is likely to improve website performance if you’re using SSL/TLS (referred to as TLS from here on). But if you have not, you’ll need to add TLS support before you can use HTTP/2. In that case, expect the performance penalty from using TLS to be roughly offset by the performance benefit of using HTTP/2, but test whether this is really true for your site before making an implementation decision.

Here are five key potential benefits of HTTP/2:

- Uses a single connection per server – HTTP/2 uses one connection per server, instead of one connection per file request. This means much less need for time‑consuming connection setup, which is especially beneficial with TLS, because TLS connections are particularly time‑consuming to create.

- Delivers faster TLS performance – HTTP/2 only needs one expensive TLS handshake, and multiplexing gets the most out of the single connection. HTTP/2 also compresses header data, and avoiding HTTP/1.x performance optimizations such as file concatenation lets caching work more efficiently.

- Simplifies your web applications – With HTTP/2 you can move away from HTTP/1.x “optimizations” that make you, as the app developer – and the client device work harder.

- Great for mixed Web pages – HTTP/2 shines with traditional Web pages that mix HTML, CSS, JavaScript code, images, and limited multimedia. Browsers can prioritize file requests to make key parts of the page appear first and fast.

- Supports greater security – By reducing the performance hit from TLS, HTTP/2 allows more web applications to use it, providing greater security for users.

And here are five corresponding downsides you’ll encounter:

- Greater overhead for the single connection – The HPACK data‑compression algorithm updates a look‑up table at both ends. This makes the connection stateful and the single connection requires extra memory.

- You might not need encryption – If your data doesn’t need protection, or it’s already protected by DRM or other encoding, TLS security probably doesn’t help you much.

- HTTP/1.x optimizations hurt – HTTP/1.x optimizations actually hurt performance in browsers that support HTTP/2, and unwinding them is extra work for you.

- Big downloads don’t benefit – If your web application mainly delivers large, downloadable files or media streams, you probably don’t want TLS, and multiplexing doesn’t provide any benefit when only one stream is in use.

- Your customers may not care – Perhaps you believe your customers don’t care whether the cat videos they share on your site are protected with TLS and HTTP/2 – and you might be right.

It all comes down to performance – and on that front, we have good news and bad news.

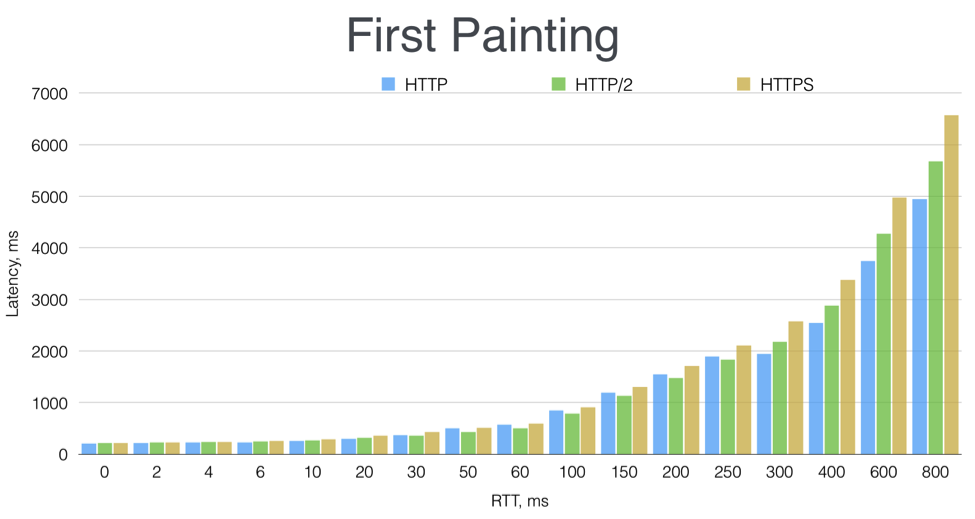

The good news is that an initial, internal test we ran here at NGINX shows the result that theory would predict: for mixed‑content web pages requested over connections with typical Internet latencies, HTTP/2 performs better than HTTP/1.x and HTTPS. The results fall into three groups depending on the connection’s typical round‑trip time (RTT):

- Very low RTTs (0–20 ms) – There is virtually no difference between HTTP/1.x, HTTP/2, and HTTPS.

- Typical Internet RTTs (30–250 ms) – HTTP/2 is faster than HTTP/1.x, and both are faster than HTTPS. For US cities near one another, RTT is about 30 ms, and it’s about 70 ms from coast to coast (about 3000 miles). On the shortest route between Tokyo and London, RTT is about 240 ms.

- High RTTs (300 ms and up) – HTTP/1.x is faster than HTTP/2, which is faster than HTTPS.

The figure shows the time to first painting – that is, the time until the user first sees Web page content appear on the screen. This measurement is often considered critical to users’ perception of a web site’s responsiveness.

For details of our testing, watch my presentation from nginx.conf 2015, The HTTP/2 Module in NGINX.

However, every web page is different, and indeed every user session is different. If you have streaming media or large downloadable files, or if you’ve scienced the, err, sugar out of your HTTP/1.x optimizations (with apologies to The Martian), your results may be different, or even opposite.

The bottom line is: you might find the cost‑benefit trade‑off to be unclear. If so, learn as much as you can, do performance testing on your own content, and then make a call. (For guidance, watch our webinar, What’s New in HTTP/2?.)

Tip 2 – Terminate HTTP/2 and TLS

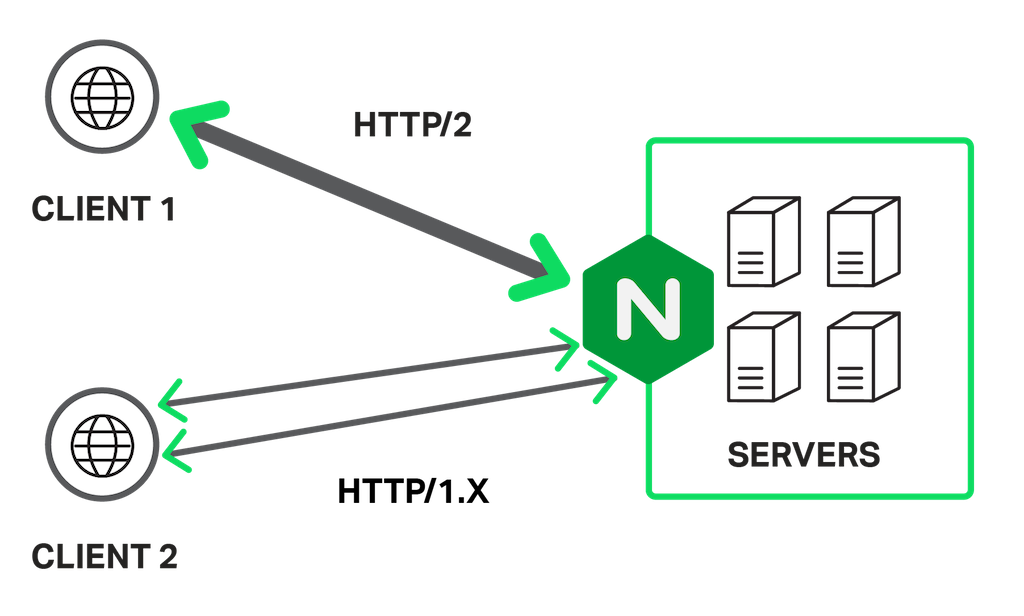

Terminating a protocol means that clients connect to a proxy server using a desired protocol, such as TLS or HTTP/2. The proxy server then connects to application servers, database servers, and so on without necessarily using the same protocol, as shown in the figure.

Using a separate server for termination means moving to a multiserver architecture. The servers may be separate physical servers, virtual servers, or virtual server instances in a cloud environment such as AWS. This is a step up in complexity compared to a single server, or even an application server/database server combination. But it affords many benefits and is virtually – no pun intended – a necessity for busy sites.

Once a server or virtual server is placed in front of an existing setup, many things are possible. The new server offloads the job of client communications from the other servers, and it can be used for load balancing, static file caching, and other purposes. It becomes easy to add and replace application servers and other servers as needed.

NGINX and NGINX Plus are often used for all of these purposes – terminating TLS and HTTP/2, load balancing, and more. The existing environment doesn’t need to be changed at all, except for “frontending” it with the NGINX server.

Tip 3 – Consider Starting with SPDY

Editor – Since the publication of this blog post, SPDY has reached the end of support from major browsers. Starting with SPDY is no longer an option for widespread deployment.

SPDY is the predecessor protocol to HTTP/2, and its overall performance is about the same. Because it’s been around for several years, more web browsers support SPDY than support HTTP/2. However, at this writing, the gap is closing; about two‑thirds of web browsers support HTTP/2, while about four out of five support SPDY.

If you’re in a hurry to implement the new style of Web transport protocol, and you want to support the largest possible number of users today, you can start out by offering SPDY. Then, in early 2016, when SPDY support begins to be removed, you can switch over to HTTP/2 – a quick change, at least with NGINX. By that point more users will be on browsers that support HTTP/2, so you will have done the best thing for the largest number of your users during this transition.

Tip 4 – Identify Your Use of HTTP/1.x Optimizations

Before you decide to implement HTTP/2, you need to identify how much of your code base is optimized for HTTP/1.x. There are four types of optimizations to look out for:

- Domain sharding – You may have already put files in different domains to increase parallelism in file transfer to the web browser; content domain networks (CDNs) do this automatically. But it doesn’t help – and can hurt – performance under HTTP/2. You can use HTTP/2‑savvy domain sharding (see Tip 7) to shard only for HTTP/1.x users.

- Image sprites – Image sprites are agglomerations of images that download in a single file; separate code then retrieves images as they’re needed. The advantages of images sprites are less under HTTP/2, though you may still find them useful.

- Concatenating code files – ;Similar to image sprites, code chunks that would normally be maintained and transferred as separate files are combined into one. The browser then finds and runs the needed code within the concatenated file as needed.

- Inlining files – CSS code, JavaScript code, and even images are inserted directly into the HTML file. This means fewer file transfers, at the expense of a bloated HTML file for initial downloading.

The last three types of optimizations all combine small files into larger ones to reduce new connections and initialization handshaking, which is especially expensive for TLS.

The first optimization, domain sharding, does the opposite – forces more connections to be opened by involving additional domains in the picture. Together, these seemingly contradictory techniques can be fairly effective in increasing the performance of HTTP/1.x sites. However, they all take time, effort, and resources to design, implement, manage, and run.

Before implementing HTTP/2, identify where these optimizations exist and how they are currently affecting application design and workflow in your organization, so you can then modify or undo them after the move to HTTP/2.

Tip 5 – Deploy HTTP/2 or SPDY

Actually deploying HTTP/2 or SPDY is not that hard. If you’re an NGINX user, you simply “turn on” the protocol in your NGINX configuration, as we detail for HTTP/2 in our white paper, HTTP/2 for Web Application Developers (PDF). The browser and the server will then negotiate to choose a protocol, settling on HTTP/2 if the browser also supports it (and if TLS is in use).

Once you implement HTTP/2 at the server level, users on browser versions that support HTTP/2 will have their sessions with your web app run on HTTP/2. Users on older browser versions will have their sessions run on HTTP/1.x, as shown in the figure. If and when you implement HTTP/2 or SPDY for a busy site, you’ll want to have processes in place to measure performance before and after, and reverse the change if it has significant negative impacts.

Note: With HTTP/2 and its use of a single connection, some details of your NGINX configuration become more important. Review your NGINX configuration, with special attention to tuning and testing the settings of directives such as output_buffers, proxy_buffers, and ssl_buffer_size. For general configuration instructions, see NGINX SSL/TLS Termination in the NGINX Plus Admin Guide. You can get more specific tips in our blogs SSL Offloading, Encryption, and Certificates with NGINX and How to Improve SEO with HTTPS and NGINX. Our white paper, NGINX SSL Performance, explores how the choice of server key size, server agreement, and bulk cipher affects performance.

Note: The use of ciphers you use with HTTP/2 may require extra attention. The RFC for HTTP/2 has a long list of cipher suites to avoid, and you may prefer to configure the cipher list yourself. In NGINX and NGINX Plus, consider tuning the ssl_ciphers directive and enabling ssl_prefer_server_ciphers, and testing the configuration with popular browser versions. The Qualys SSL Server Test analyzes the configuration of SSL web servers, but (as of November 2015) it is not reliable for HTTP/2 handshakes.

Tip 6 – Revise HTTP/1.x Optimizations

Surprisingly, undoing or revising your HTTP/1.x optimizations is actually the most creative part of an HTTP/2 implementation. There are several issues to consider and many degrees of freedom as to what to do.

Before you start making changes, consider whether users of older browsers will suffer from them. With that in mind, there are three broad strategies for undoing or revising HTTP/1.x optimizations:

- Nothing to see here, folks – If you haven’t optimized your application(s) for HTTP/1.x, or you’ve made moderate changes that you’re comfortable keeping, then you have no work to do to support HTTP/2 in your app.

- Mixed approach – You may decide to reduce file and data concatenation, but not eliminate them entirely. For instance, some image spriting for cohesive groups of small images might stay, whereas bulking up the initial HTML file with inlined data might go.

- Complete reversal of HTTP/1.x optimizations (but see the domain sharding note in Tip 7) – You may want to get rid of these optimizations altogether.

Caching is a bit of a wildcard. Theoretically, caching operates optimally where there is a plethora of small files. However, that’s a lot of file I/O. Some concatenation of closely related files may make sense, both for workflow and application performance. Carefully consider your own experience and what other developers share as they implement HTTP/2.

Tip 7 – Implement Smart Sharding

Domain sharding is perhaps the most extreme, and also possibly the most successful, HTTP/1.x optimization strategy. You can use a version of domain sharding that still improves performance for HTTP/1.x but is basically ignored, and beneficially so, for HTTP/2. (Which generally doesn’t benefit from domain sharding, because of the use of a single connection.)

For HTTP/2‑friendly sharding, make these two things happen:

- Make the domain names for sharded resources resolve to the same IP address.

- Make sure your certificate has a wildcard that makes it valid for all of the domain names used for sharding, or that you have an appropriate multidomain certificate.

For details, see RFC 7540, Hypertext Transfer Protocol Version 2 (HTTP/2).

If these conditions are in place, sharding will happen for HTTP/1.x – the domains are different enough to trigger browsers to create additional sets of connections – and not for HTTP/2; the separate domains are treated as one domain, and a single connection can access all of them.

Conclusion

HTTP/2 and TLS are likely to improve your site performance and let users know that their interaction with your site is secure. Whether you’re first on your block to implement HTTP/2, or catching up to competitors, you can add HTTP/2 support quickly and well.

Use these tips to help you achieve the highest HTTP/2 performance with the least ongoing effort, so you can put your focus on creating fast, effective, secure applications that are easy to maintain and run.

Resources

- For the lowdown on HTTP/2, see the white paper from NGINX (PDF).

- The Can I use website shows browser support for a wide range of front-end web technologies, including SPDY and HTTP/2.

- As of January 2016, more than 6% of websites used HTTP/2.

- For details of our testing, see the HTTP/2 presentation from nginx.conf 2015.

- NGINX has a webinar, What’s New in HTTP/2?, which describes key features and gives implementation advice.

- For an overview of NGINX performance tips, see our blog post, 10 Tips for 10x Application Performance.

"This blog post may reference products that are no longer available and/or no longer supported. For the most current information about available F5 NGINX products and solutions, explore our NGINX product family. NGINX is now part of F5. All previous NGINX.com links will redirect to similar NGINX content on F5.com."