借助 NGINX 最大限度地提高 Python 性能,第 1 部分:Web 服务和缓存

Introduction – How NGINX is Used with Python

Python is famous for being easy and fun to use, for making software development easier, and for runtime performance that is said to exceed other scripted languages. (Though the latest version of PHP, PHP 7, may give Python a run for its money.)

Everyone wants their website and application to run faster. Also, every website with growing traffic or sharp traffic spikes is vulnerable to performance problems and downtime, often occurring at the worst – that is, busiest – times. Also, nearly all websites suffer performance problems and downtime, whether traffic volume is growing steadily or they experience sharp spikes in usage.

That’s where NGINX and NGINX Plus come in. They improve website performance in three different ways:

- As a web server – NGINX was originally developed to solve the C10K problem – that is, to easily support 10,000 or more simultaneous connections. Using NGINX as the web server for your Python app makes your website faster, even at low levels of traffic. When you have many thousands of users, it’s virtually certain to deliver much higher performance, fewer crashes, and less downtime. You can also do static file caching or microcaching on your NGINX web server, though both work better when run on a separate NGINX reverse proxy server (see the next paragraph).

- As a reverse proxy server – You can “drop NGINX in” as a reverse proxy server in front of your current application server setup. NGINX faces the Web and passes requests to your application server. This “one weird trick” makes your website run faster, reduces downtime, consumes less server resources, and improves security. You can also cache static files on the reverse proxy server (very efficient), add microcaching of dynamic content to reduce load on the application itself, and more.

- As a load balancer for multiple application servers – Start by deploying a reverse proxy server. Then scale out by running multiple application servers in parallel and using NGINX or NGINX Plus to load balance traffic across them. With this kind of deployment, you can easily scale your website’s performance in line with traffic requirements, increasing reliability and uptime. If you need a given user session to stay on the same server, configure the load balancer to support session persistence.

NGINX and NGINX Plus bring advantages whether you use them as a web server for your Python app, as a reverse proxy server, as a load balancer, or for all three purposes.

In this first article of a two‑part series, we describe five tips for improving the performance of your Python apps, including the use of NGINX and NGINX Plus as a web server, how to implement caching of static files, and microcaching of application‑generated files. In Part 2, we’ll describe how to use NGINX and NGINX Plus as a reverse proxy server and as a load balancer for multiple application servers.

Tip 1 – Find Python Performance Bottlenecks

There are two very different conditions in which the performance for your Python application matters – first, with everyday, “reasonable” numbers of users; and second, under heavy loads. Many site owners worry too little about performance under light loads, when – in our humble opinion – they should be sweating every tenth of a second in response time. Shaving milliseconds off of response times is difficult and thankless work, but it makes users happier and business results better.

However, this blog post, and the accompanying Part 2, are focused instead on the scenario that everyone does worry about: performance problems that occur when a site gets busy, such as big performance slowdowns and crashes. Also, many hacker attacks mimic the effects of sudden surges in user numbers, and improving site performance is often an important step in addressing attacks as well.

With a system that allocates a certain amount of memory per user, like Apache HTTP Server, adding users causes physical memory to get overloaded as more and more users pile in. The server starts swapping to disk, performance plummets, and poor performance and crashes ensue. Moving to NGINX, as described in this blog post, helps solve this problem.

Python is particularly prone to memory‑related performance problems, because it generally uses more memory to accomplish its tasks than other scripting languages (and executes them faster as a result). So, all other things being equal, your Python‑based app may “fall over” under a smaller user load than an app written in another language.

Optimizing your app can help somewhat, but that’s usually not the best or fastest way to solve traffic‑related site performance problems. The steps in this blog post, and the accompanying Part 2, are the best and fastest ways to address traffic‑related performance problems. Then, after you address the steps given here, by all means go back and improve your app, or rewrite it to use a microservices architecture.

Tip 2 – Choose Single‑Server or Multiserver Deployment

Small websites work fine when deployed on a single server. Large websites require multiple servers. But if you’re in the gray area in‑between – or your site is growing from a small site to a large one – you have some interesting choices to make.

If you have a single‑server deployment, you’re at significant risk if you experience spikes in traffic or rapid overall traffic growth. Your scalability is limited, with possible fixes that include improving your app, switching your web server to NGINX, getting a bigger and faster server, or offloading storage to a content delivery network (CDN). Each of these options takes time to implement, has a cost to it, and risks introducing bugs or problems in implementation.

Also, with single‑server deployment, your site by definition has a single point of failure – and many of the problems that can take your site offline don’t have quick or simple solutions.

If you switch your server to NGINX in a single‑server deployment, you can freely choose between NGINX Open Source and NGINX Plus. NGINX Plus includes enterprise‑grade support and additional features. Some of the additional features, such as live activity monitoring, are relevant for a single‑server deployment, and others, such as load balancing and session persistence, come into play if you use NGINX Plus as a reverse proxy server in a multiserver deployment.

All things considered, unless you’re sure that your site is going to stay small for a long time to come, and downtime is not a major worry, single‑server deployment has some risk to it. Multiserver deployment is almost arbitrarily scalable – single points of failure can be engineered out and performance can be whatever you choose it to be, with the ability to add capacity quickly.

Tip 3 – Change Your Web Server to NGINX

In the early days of the Web, the name “Apache” was synonymous with “web server”. But NGINX was developed in the early 2000s and is steadily gaining in popularity; it’s already the #1 web server at the 1,000, 10,000, 100,000, and 1 million busiest websites in the world.

NGINX was developed to solve the C10K problem – that is, to handle more than 10,000 simultaneous connections within a given memory budget. Other web servers need a chunk of memory for each connection, so they run out of physical memory and slow down or crash when thousands of users want to access a site at the same time. NGINX handles each request separately and gracefully scales to many more users. (It’s also excellent for additional purposes, as we’ll describe below.)

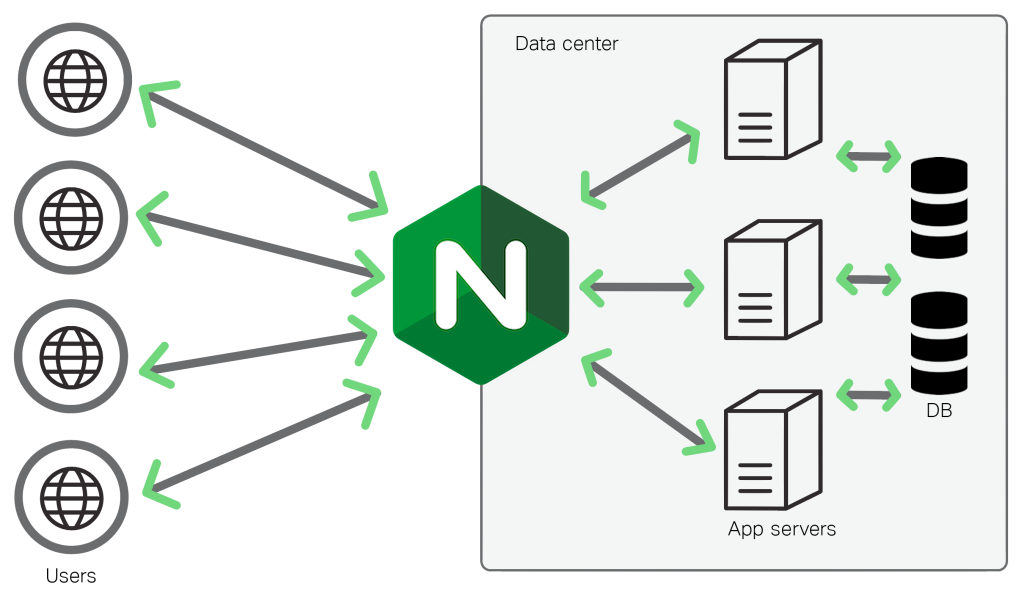

A high‑level overview of NGINX’s architecture is shown below.

In the diagram, a Python application server fits into the Application server block in the backend, and is shown being accessed by FastCGI. NGINX doesn’t “know” how to run Python, so it needs a gateway to an environment that does. FastCGI is a widely used interface for PHP, Python, and other languages.

However, a more popular choice for communication between Python and NGINX is the Web Server Gateway Interface (WSGI). WSGI works in multithreaded and multiprocess environments, so it scales well across all the deployment options mentioned in this blog post.

If you move to NGINX as your web server, there’s lots of support out there for the steps involved:

- Configuring gunicorn – “Green Unicorn”, a popular WSGI server, for use with NGINX.

- Configuring uWSGI – Another popular WSGI server, for use with NGINX. uWSGI includes direct support for NGINX.

- Using uWSGI, NGINX, and Django – A popular Python web framework.

This snippet shows how you might configure NGINX for use with uWSGI – in this case, a project using the Python framework Django:

http { # ...

upstream django {

server 127.0.0.1:29000;

}

server {

listen 80;

server_name myapp.example.com;

root /var/www/myapp/html;

location / {

index index.html;

}

location /static/ {

alias /var/django/projects/myapp/static/;

}

location /main {

include /etc/nginx/uwsgi_params;

uwsgi_pass django;

uwsgi_param Host $host;

uwsgi_param X-Real-IP $remote_addr;

uwsgi_param X-Forwarded-For $proxy_add_x_forwarded_for;

uwsgi_param X-Forwarded-Proto $http_x_forwarded_proto;

}

}

}Tip 4 – Implement Static File Caching

Caching of static content involves keeping a copy of files that don’t change all that often – which might mean every few hours or never – at a location other than the application server. A typical example of static content is a JPEG image that’s displayed as part of a web page.

Static file caching is a common way to enhance application performance, and it actually happens at several levels:

- In the user’s browser

- At Internet providers at several levels – from a company’s internal network to an Internet Service Provider (ISP)

- On a web server, as we’ll describe here

Implementing static file caching on the web server has two benefits:

- Faster delivery to the user – NGINX is optimized for static file caching and executes requests for static content much faster than an application server.

- Reduced load on the application server – The application server doesn’t even see requests for cached static files, because the web server satisfies them.

Static file caching works fine with a single‑server implementation, but the underlying hardware is still shared by the web server and the application server. If the web server has the hardware busy retrieving a cached file – even if very efficiently – those hardware resources aren’t available to the application, possibly slowing it to some extent.

To support browser caching, set HTTP headers correctly for static files. Consider the HTTP Cache-Control header (and its max-age setting in particular), the Expires header, and Entity tags. For a good introduction to the topic, see Using NGINX and NGINX Plus as an Application Gateway with uWSGI and Django in the NGINX Plus Admin Guide.

The following code configures NGINX to cache static files, including JPEG files, GIFs, PNG files, MP4 video files, Powerpoint files, and many others. Replace www.example.com with the URL of your web server.

server { # substitute your web server's URL for "www.example.com"

server_name www.example.com;

root /var/www/example.com/htdocs;

index index.php;

access_log /var/log/nginx/example.com.access.log;

error_log /var/log/nginx/example.com.error.log;

location / {

try_files $uri $uri/ /index.php?$args;

}

location ~ .php$ {

try_files $uri =404;

include fastcgi_params;

# substitute the socket, or address and port, of your Python server

fastcgi_pass unix:/var/run/php5-fpm.sock;

#fastcgi_pass 127.0.0.1:9000;

}

location ~* .(ogg|ogv|svg|svgz|eot|otf|woff|mp4|ttf|css|rss|atom|js|jpg

|jpeg|gif|png|ico|zip|tgz|gz|rar|bz2|doc|xls|exe|ppt|tar|mid

|midi|wav|bmp|rtf)$ {

expires max;

log_not_found off;

access_log off;

}

}Tip 5 – Implement Microcaching

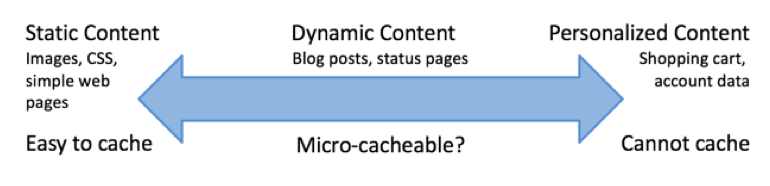

Microcaching exploits a huge opportunity for improving performance on application servers running Python, PHP, and other languages. For caching purposes, there are three types of web pages:

- Static files – These can be cached, as described in Tip 4.

- Application‑generated, non‑personalized pages – It doesn’t usually make sense to cache these, as they need to be fresh. An example is a page delivered to an e‑commerce user who’s not logged in (see the next point) – the available products, recommended similar products, and so on may be changing constantly, so it’s important to provide a fresh page. However, if another user comes along, say a tenth of a second later, it might be just fine to show them the same page as the previous user.

- Application‑generated, personalized pages – These can’t usefully be cached, as they’re user‑specific, and the same user is unlikely to see the same personalized page twice. An example is an e‑commerce page for a user who’s logged in; the same page can’t be shown to other users.

Microcaching is useful for the second page type described above – application‑generated, non‑personalized pages. “Micro” refers to a brief timeframe. When your site generates the same page several times a second, it might not hurt the freshness of the page very much if you cache it for one second. Yet this brief caching period can hugely offload the application server, especially during traffic spikes. Instead of generating 10, or 20, or 100 pages (with the same content) during the cache‑timeout period, it generates a given page just once, then the page is cached and served to many users from the cache.

The effect is kind of miraculous. A server that’s slow when handling scores of requests per second becomes quite speedy when handling exactly one. (Plus, of course, any personalized pages.) Our own Owen Garrett has a blog post which elaborates on the benefits of microcaching, with configuration code. The core change, setting up a proxy cache with a timeout of one second, requires just a few lines of configuration code.

proxy_cache_path /tmp/cache keys_zone=cache:10m levels=1:2 inactive=600s max_size=100m;server {

proxy_cache cache;

proxy_cache_valid 200 1s;

# ...

}For more sample configuration, see Tyler Hicks‑Wright’s blog about Python and uWSGI with NGINX.

Conclusion

In Part I we’ve gone over solutions for increasing the performance of a single‑server Python implementation, as well as caching, which can be deployed on a single‑server implementation or can be run on a reverse proxy server or a separate caching server. (Caching works better on a separate server.) The next part, Part 2, describes performance solutions that require two or more servers.

If you’d like to explore the advanced features of NGINX Plus for your application, such as support, live activity monitoring, and on‑the‑fly reconfiguration, start your free 30-day trial today or contact us to discuss your use cases.

"This blog post may reference products that are no longer available and/or no longer supported. For the most current information about available F5 NGINX products and solutions, explore our NGINX product family. NGINX is now part of F5. All previous NGINX.com links will redirect to similar NGINX content on F5.com."