In the world of container orchestration there are two names that we run into all the time: RedHat OpenShift Container Platform (OCP) and Kubernetes. OpenShift, as you probably know, uses Kubernetes underneath, as do many of the other container orchestration platforms. Routing external traffic into a Kubernetes or OpenShift environment has always been a little challenging, in two ways:

- Exposing services deployed inside Kubernetes to the outside world. The solution is an Ingress controller like NGINX Plus Ingress Controller. You can read more about it in our blog Getting Started with NGINX Ingress Operator on Red Hat OpenShift.

- Load balancing traffic across your Kubernetes nodes. To solve this problem, organizations usually choose an external hardware or virtual load balancer or a cloud‑native solution. However, NGINX Plus can also be used as the external load balancer, improving performance and simplifying your technology investment.

In this blog, I focus on how to solve the second problem using NGINX Plus in a way that is simple, efficient, and enables your App Dev teams to manage both the Ingress configuration inside Kubernetes and the external load balancer configuration outside. As a reference architecture to help you get started, I’ve created the nginx-lb-operator project in GitHub – the NGINX Load Balancer Operator (NGINX-LB-Operator) is an Ansible‑based Operator for NGINX Controller created using the Red Hat Operator Framework and SDK. NGINX-LB-Operator drives the declarative API of NGINX Controller to update the configuration of the external NGINX Plus load balancer when new services are added, Pods change, or deployments scale within the Kubernetes cluster.

Please note that NGINX-LB-Operator is not covered by your NGINX Plus or NGINX Controller support agreement. You can report bugs or request troubleshooting assistance on GitHub.

Kubernetes and NGINX Technologies – A Review

NGINX-LB-Operator relies on a number of Kubernetes and NGINX technologies, so I’m providing a quick review to get us all on the same page. If you’re already familiar with them, feel free to skip to The NGINX Load Balancer Operator.

Kubernetes Controllers and Operators

Kubernetes is an orchestration platform built around a loosely coupled central API. The API provides a collection of resource definitions, along with Controllers (which typically run as Pods inside the platform) to monitor and manage those resources. The Kubernetes API is extensible, and Operators (a type of Controller) can be used to extend the functionality of Kubernetes.

- Controllers – A core part of the Kubernetes system. They create “watches” for specific Kubernetes resources and perform the necessary steps to reach the desired state of each resource as it changes. In customer conversations, the most common Kubernetes Controller discussed is the “Ingress Controller.”

- Operators – Custom controllers which define and make use of custom resource definitions (CRDs) to manage applications and their components.

NGINX Ingress Controllers for Kubernetes

There are two main Ingress controller options for NGINX, and it can be a little confusing to tell them apart because the names in GitHub are so similar. We discussed this topic in detail in a previous blog, but here’s a quick review:

- kubernetes/ingress-nginx – The Ingress controller supported and maintained by the Kubernetes open source community. For ease of use, we call this the “community’s Ingress controller”. Unsurprisingly, it’s based on NGINX Open Source. It provides additional features that are enabled by third‑party Lua modules, which unfortunately tend to hurt performance.

-

nginxinc/kubernetes-ingress – The Ingress controller maintained by the NGINX team at F5. There are two versions: one for NGINX Open Source (built for speed) and another for NGINX Plus (also built for speed, but commercially supported and with additional enterprise‑grade features). We call these “NGINX (or our) Ingress controllers”.

You can manage both of our Ingress controllers using standard Kubernetes Ingress resources. We also support Annotations and ConfigMaps to extend the limited functionality provided by the Ingress specification, but extending resources in this way is not ideal.

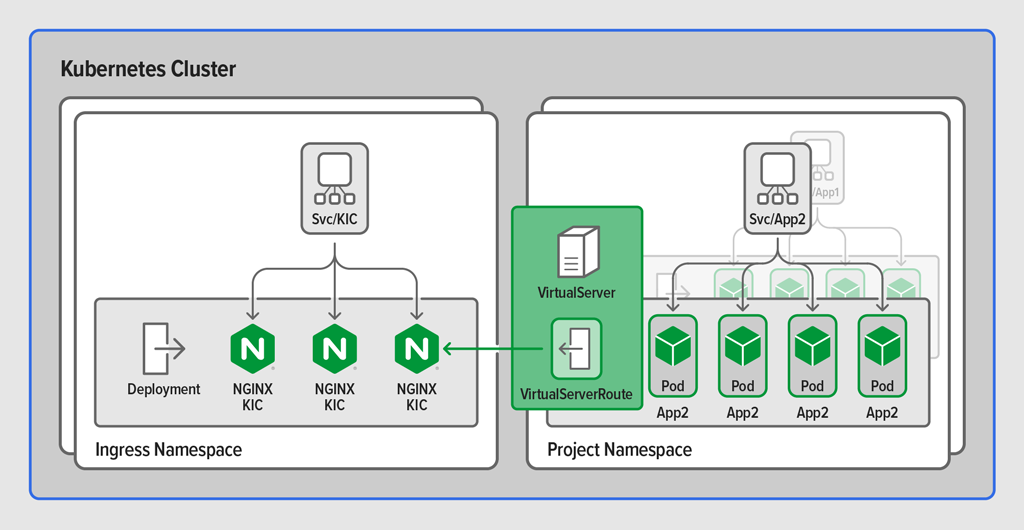

Release 1.6.0 and later of our Ingress controllers include a better solution: custom NGINX Ingress resources called VirtualServer and VirtualServerRoute that extend the Kubernetes API and provide additional features in a Kubernetes‑native way. NGINX Ingress resources expose more NGINX functionality and enable you to use advanced load balancing features with Ingress, implement blue‑green and canary releases and circuit breaker patterns, and more.

For a summary of the key differences between these three Ingress controller options, see our GitHub repository.

NGINX Controller

NGINX Controller is our cloud‑agnostic control plane for managing your NGINX Plus instances in multiple environments and leveraging critical insights into performance and error states. Its modules provide centralized configuration management for application delivery (load balancing) and API management. NGINX Controller can manage the configuration of NGINX Plus instances across a multitude of environments: physical, virtual, and cloud. It is built around an eventually consistent, declarative API and provides an app‑centric view of your apps and their components. It’s designed to easily interface with your CI/CD pipelines, abstract the infrastructure away from the code, and let developers get on with their jobs.

When it comes to Kubernetes, NGINX Controller can manage NGINX Plus instances deployed out front as a reverse proxy or API gateway. It doesn’t make sense for NGINX Controller to manage the NGINX Plus Ingress Controller itself, however; because the Ingress Controller performs the control‑loop function for a core Kubernetes resource (the Ingress), it needs to be managed using tools from the Kubernetes platform – either standard Ingress resources or NGINX Ingress resources.

External Load Balancers

The NGINX Plus Ingress Controller for Kubernetes is a great way to expose services inside Kubernetes to the outside world, but you often require an external load balancing layer to manage the traffic into Kubernetes nodes or clusters. If you’re running in a public cloud, the external load balancer can be NGINX Plus, F5 BIG-IP LTM Virtual Edition, or a cloud‑native solution. If you’re deploying on premises or in a private cloud, you can use NGINX Plus or a BIG-IP LTM (physical or virtual) appliance. I’m told there are other load balancers available, but I don’t believe it 😉 .

When it comes to managing your external load balancers, you can manage external NGINX Plus instances using the NGINX Controller directly. Its declarative API has been designed for the purpose of interfacing with your CI/CD pipeline, and you can deploy each of your application components using it. But what if your Ingress layer is scalable, you use dynamically assigned Kubernetes NodePorts, or your OpenShift Routes might change?

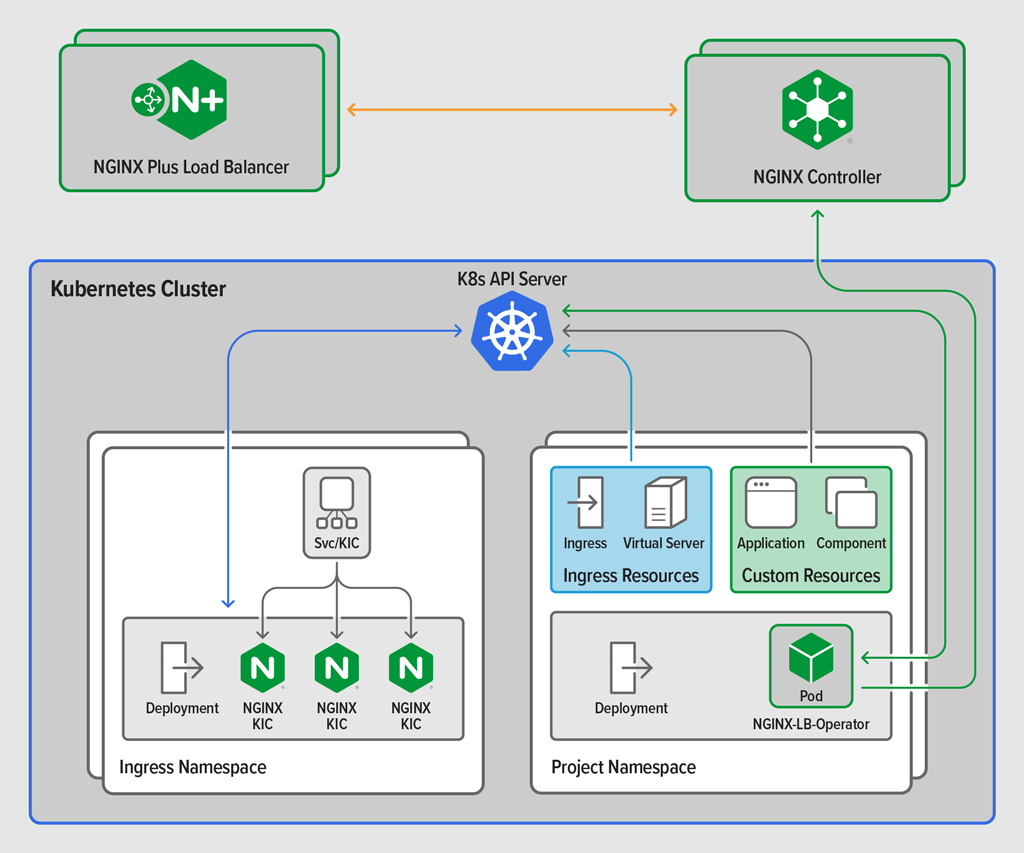

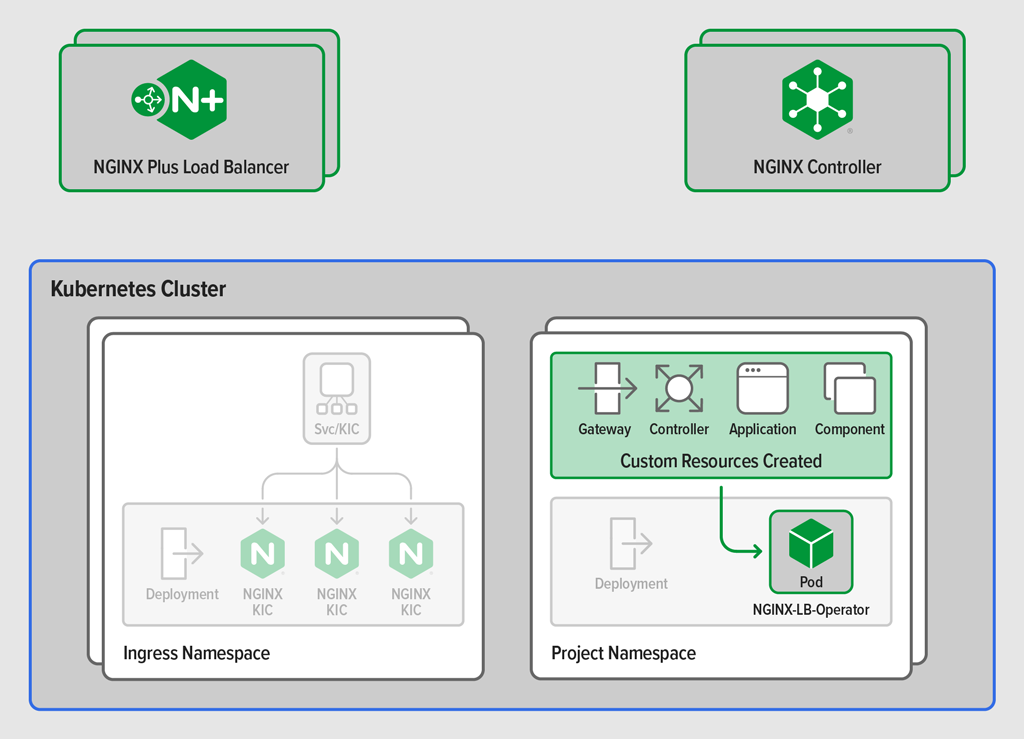

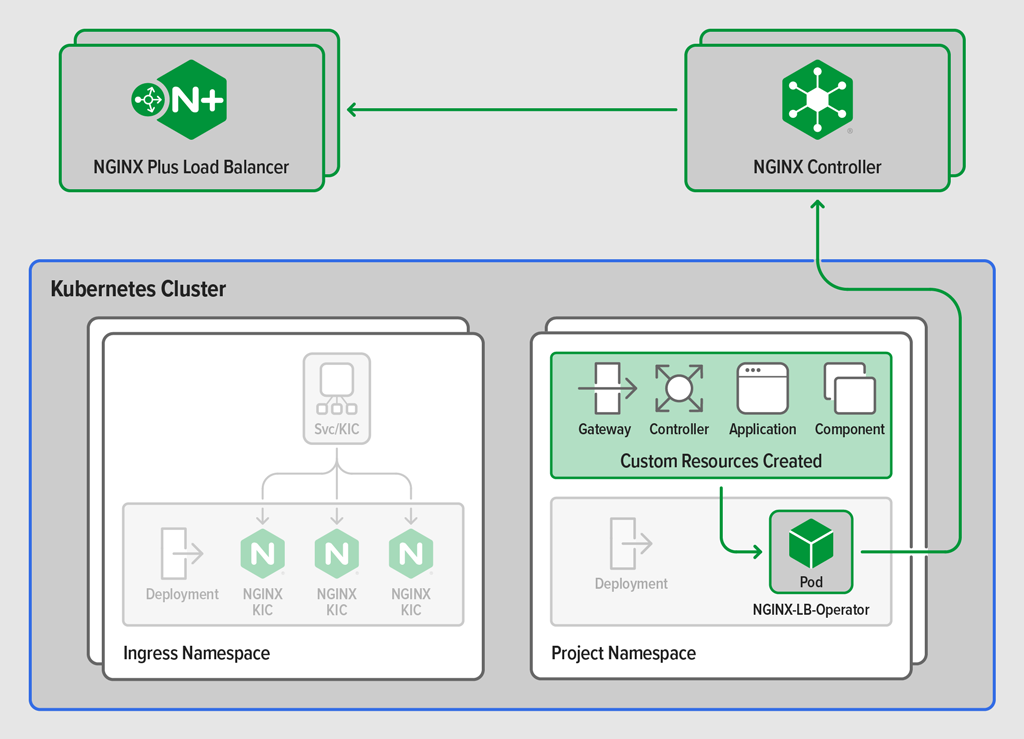

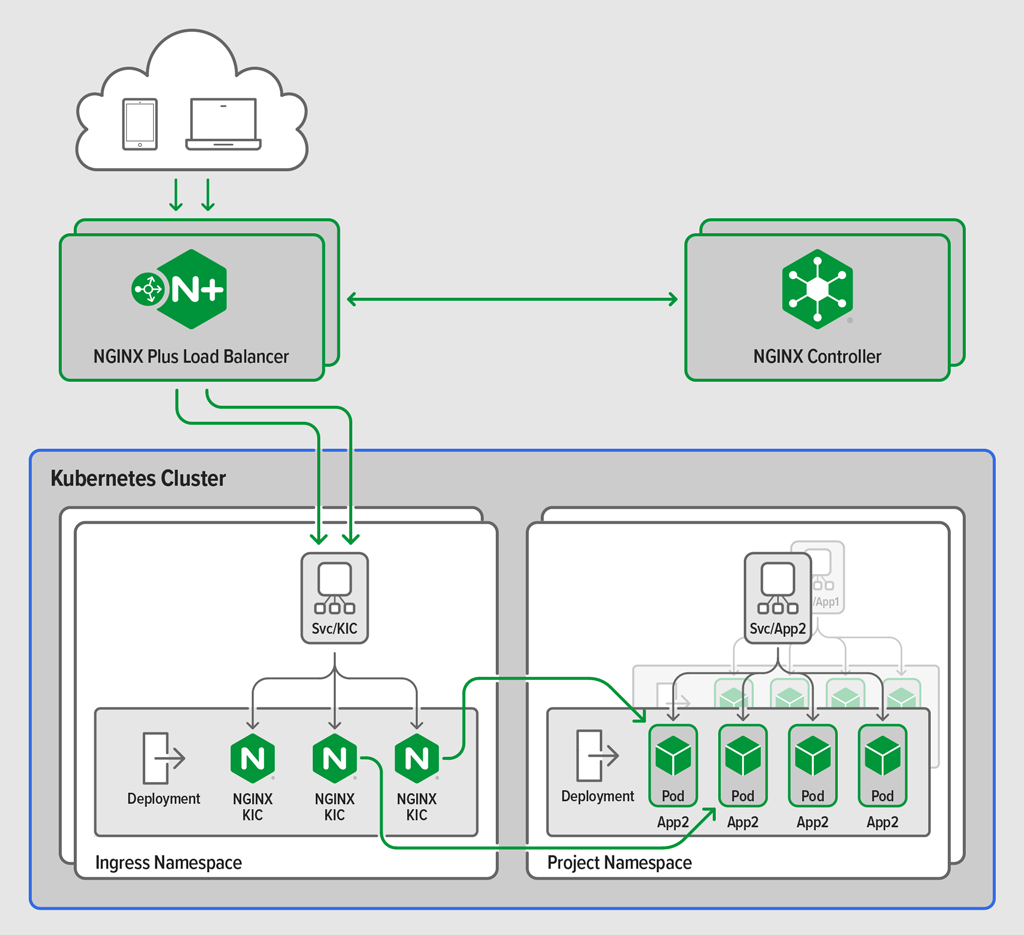

In cases like these, you probably want to merge the external load balancer configuration with Kubernetes state, and drive the NGINX Controller API through a Kubernetes Operator. The diagram shows a sample deployment that includes just such an operator (NGINX-LB-Operator) for managing the external load balancer, and highlights the differences between the NGINX Plus Ingress Controller and NGINX Controller.

where:

- Ingress resources (blue box) – A standard Ingress resource and a NGINX VirtualServer resource are defined in the project namespace.

- Blue arrows – Ingress resources are created in the Kubernetes API, and picked up by the NGINX Plus Ingress Controller which is running in a different namespace.

- Custom resources (green box) – Custom resources, which are instantiations of CRDs installed with NGINX-LB-Operator, are defined in your project’s namespace and consumed by NGINX-LB-Operator running in the same namespace.

- Green arrows – Resources are created in the API and then picked up by NGINX-LB-Operator. Unlike the Ingress Controller which configures a local NGINX Plus instance running in the same Pod, NGINX-LB-Operator makes an API call to NGINX Controller.

- Orange arrows – NGINX Controller configures the external NGINX Plus instance to load balance onto the NGINX Plus Ingress Controller.

In this topology, the custom resources contain the desired state of the external load balancer and set the upstream (workload group) to be the NGINX Plus Ingress Controller. NGINX-LB-Operator collects information on the Ingress Pods and merges that information with the desired state before sending it onto the NGINX Controller API.

The NGINX Load Balancer Operator

Writing an Operator for Kubernetes might seem like a daunting task at first, but Red Hat and the Kubernetes open source community maintain the Operator Framework, which makes the task relatively easy. The Operator SDK enables anyone to create a Kubernetes Operator using Go, Ansible, or Helm. At F5, we already publish Ansible collections for many of our products, including the certified collection for NGINX Controller, so building an Operator to manage external NGINX Plus instances and interface with NGINX Controller is quite straightforward.

I used the Operator SDK to create the NGINX Load Balancer Operator, NGINX-LB-Operator, which can be deployed with a Namespace or Cluster Scope and watches for a handful of custom resources. The custom resources map directly onto NGINX Controller objects (Certificate, Gateway, Application, and Component) and so represent NGINX Controller’s application‑centric model directly in Kubernetes. The custom resources configured in Kubernetes are picked up by NGINX-LB-Operator, which then creates equivalent resources in NGINX Controller.

NGINX-LB-Operator enables you to manage configuration of an external NGINX Plus instance using NGINX Controller’s declarative API. Because NGINX Controller is managing the external instance, you get the added benefits of monitoring and alerting, and the deep application insights which NGINX Controller provides.

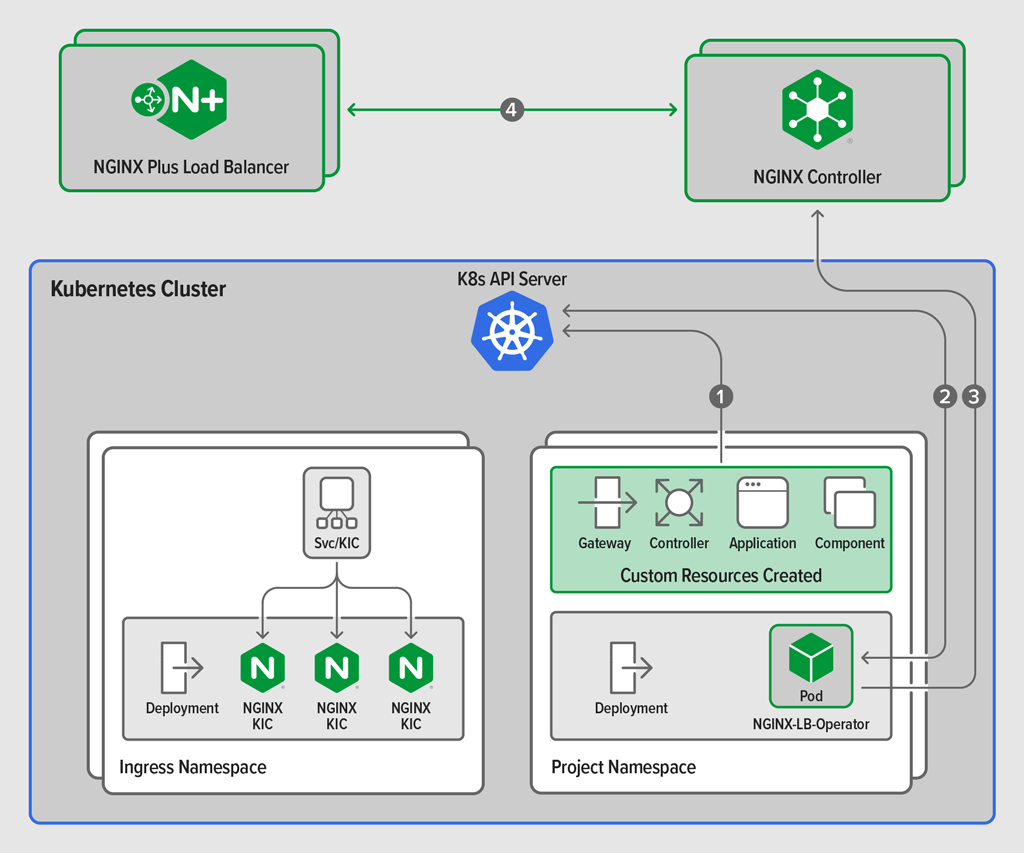

This diagram illustrates how:

- You create custom resources in the project namespace which are sent to the Kubernetes API.

- NGINX-LB-Operator sees the newly configured resources and collects the desired state of the component and the deployment information from the Ingress controller in the Ingress namespace.

- A merged configuration from your definition and current state of the Ingress controller is sent to NGINX Controller.

- The configuration is delivered to the requested NGINX Plus instances and NGINX Controller begins collecting metrics for the new application.

Detailed deployment instructions and a sample application are provided on GitHub. If you don’t like role play or you came here for the TL;DR version, head there now.

A Sample Deployment

So let’s role play. I’ll be Susan and you can be Dave.

As Dave, you run a line of business at your favorite imaginary conglomerate. You’re down with the kids, and have your finger on the pulse, etc., so you deploy all of your applications and microservices on OpenShift and for Ingress you use the NGINX Plus Ingress Controller for Kubernetes. All of your applications are deployed as OpenShift projects (namespaces) and the NGINX Plus Ingress Controller runs in its own Ingress namespace.

You were never happy with the features available in the default Ingress specification and always thought ConfigMaps and Annotations were a bit clunky. This is why you were over the moon when NGINX announced that the NGINX Plus Ingress Controller was going to start supporting its own CRDs. Today your application developers use the VirtualServer and VirtualServerRoutes resources to manage deployment of applications to the NGINX Plus Ingress Controller and to configure the internal routing and error handling within OpenShift.

Sometimes you even expose non‑HTTP services, all thanks to the TransportServer custom resources also available with the NGINX Plus Ingress Controller. Developers can define the custom resources in their own project namespaces which are then picked up by NGINX Plus Ingress Controller and immediately applied. It’s awesome, but you wish it were possible to manage the external network load balancer at the edge of your OpenShift cluster just as easily. The times when you need to scale the Ingress layer always cause your lumbago to play up.

It’s Saturday night and you should be at the disco, but yesterday you had to scale the Ingress layer again and now you have a pain in your lower back. Ping! In a cloud of smoke your fairy godmother Susan appears.

“Hello, Dave,” she says.

“Who are you? Look what you’ve done to my Persian carpet,” you reply.

Ignoring your attitude, Susan proceeds to tell you about NGINX-LB-Operator, now available on GitHub. She explains that with an NGINX Plus cluster at the edge of OpenShift and NGINX Controller to manage it from an application‑centric perspective, you can create custom resources which define how to configure the NGINX Plus load balancer.

The NGINX-LB-Operator watches for these resources and uses them to send the application‑centric configuration to NGINX Controller. In turn, NGINX Controller generates the required NGINX Plus configuration and pushes it out to the external NGINX Plus load balancer.

Your end users get immediate access to your applications, and you get control over changes which require modification to the external NGINX Plus load balancer!

NGINX Controller collects metrics from the external NGINX Plus load balancer and presents them to you from the same application‑centric perspective you already enjoy. And next time you scale the NGINX Plus Ingress layer, NGINX-LB-Operator automatically updates the NGINX Controller and external NGINX Plus load balancer for you. No more back pain!

Conclusion

Kubernetes is a platform built to manage containerized applications. NGINX Controller provides an application‑centric model for thinking about and managing application load balancing. NGINX-LB-Operator combines the two and enables you to manage the full stack end-to-end without needing to worry about any underlying infrastructure. Head on over to GitHub for more technical information about NGINX-LB-Operator and a complete sample walk‑through.

Learn more about our solutions:

- Request a free trial of NGINX Controller

- Contact us to discuss your use cases

- Get the NGINX Ingress Operator for OpenShift

- Register for an instructor‑led course