A typical eretailer gets nearly a quarter of its yearly revenue between Thanksgiving and Christmas. For many online stores, the two busiest days of the year are Black Friday (the day after Thanksgiving) and the following Cyber Monday, which sees an overwhelming number of ecommerce sales. While those days account for a huge spike in sales, they also represent huge spikes in traffic and the associated stress and uncertainty about site performance and uptime.

Shoppers demand high performance and a flawless experience from your site. If your page load time is reduced by just one second due to an influx of traffic, you can expect to see conversions reduced by 7%. And at peak traffic times, more than 75% of online consumers will leave for a competitor’s site rather than suffer delays (PDF).

Google search results are influenced by site performance, so you can create a virtuous circle: faster performance brings you more customers, through improved search results, and you get more conversions, as shoppers have a better experience.

To learn how to improve your website’s performance – not only at peak times, but every day of the year – start with our blog, How to Manage Sudden Traffic Surges and Server Overload. Then, use the detailed guidance in this blog to refine your strategy.

Increase Capacity with an HTTP Gateway

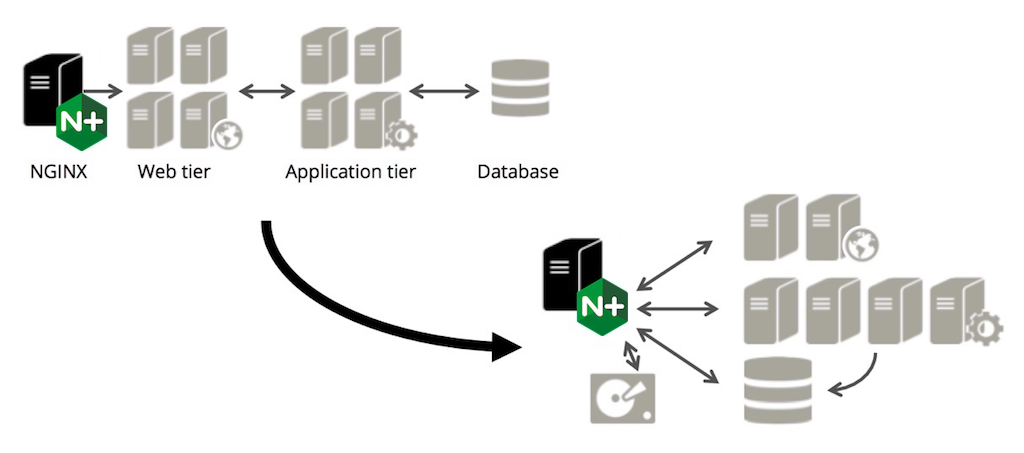

An HTTP gateway is known technically as a reverse proxy server. By either name, an HTTP gateway receives requests from user devices – clients – on the Web, then dispatches the requests to other servers over a local area network. Once a response is generated, the gateway returns it to the client that made the request.

Many sites have a simple architecture: a web server that runs application code to put together web pages, often with a database server supporting the application. More often than not, the application server is an Apache server.

Apache and many other web applications platforms are, however, limited in their ability to handle significant amounts of traffic. Every new browser session opens six to eight server connections, and Apache devotes significant memory to each connection. The connections stay open while pages are requested, put together, returned, and acknowledged.

If several thousand users or more are online at once, tens of thousands of connections are needed. But three things can happen:

- The Apache server can run out of memory and start swapping sessions out to disk, greatly slowing performance. If connection requests continue to mount, swapping can increase, affecting more and more of the site’s users.

- The Apache server can run out of connections. To save memory, the server software can limit the number of simultaneous connections, but the effect is similar to running out of memory: the available connections get used up, new connection requests have to wait, and users see slow performance – especially if connection requests back up.

- The application server can run out of CPU capacity due to managing connections and running the application, again making users wait and causing requests to back up.

An HTTP gateway reverse proxy server can prevent these problems. The reverse proxy server receives requests from the web and feeds them over a local area network to the Apache server. The Apache server then runs application code and returns the needed file to the reverse proxy server, which acknowledges receipt quickly. The Apache server can run at close to its rated capacity because it doesn’t have to hold thousands of connections open waiting for acknowledgements from faraway clients.

NGINX Open Source and NGINX Plus are very widely used for this purpose, with other functions added on as needed (see below). NGINX uses an event‑driven architecture that can handle hundreds of thousands of connections concurrently, translating slow Internet‑side connections to high‑speed local transactions.

By using NGINX or NGINX Plus as a reverse proxy:

- An Apache server can be run at full speed, receiving requests and responding over the local area network.

- Static files are easily cached on the NGINX Plus server, so the load on the Apache server and, following onto that, the database server, is significantly reduced.

- More Apache servers can be added, with the NGINX Plus server handling load balancing among them (see below).

Cache and Microcache Your Site Content

Caching objects has a multitude of beneficial effects. The large majority of the data on a typical web page is made up of static objects – image files such as JPEGs and PNG files, code files such as JavaScript and CSS, and so on. (You can see how this would be the case on a typical page from an ecommerce site.) Caching these files can greatly speed performance. You can also briefly cache dynamically generated files, which is called microcaching.

There are three levels of caching for static files:

- Local caching. You can support local caching of static assets through configuration settings and by repeating the same assets on multiple pages. Sample configuration settings for NGINX and NGINX Plus are given below. (You are likely to get some local caching‑type benefits from organizational and service provider caching.)

- Content delivery network (CDN) caching. A CDN puts content closer to the user and onto dedicated machines that answer requests quickly. However, the impact of HTTP/2 on CDNs may reduce their benefits without careful optimization; see our whitepaper, HTTP/2 for Web Application Developers.

- Caching server. You can put a caching server in front of web and application servers. NGINX combines static object caching with web server capabilities, and NGINX Plus adds optimized caching and monitoring features.

Most analysis of caching’s benefits focuses on faster file retrieval. However, all forms of caching have an additional benefit that is harder to measure, but very important for high‑performance websites: offloading traffic from web and application servers, which frees them up to deliver high performance, and prevents problems caused by overloading.

The following code shows how to use the proxy_cache_path and proxy_cache directives in an NGINX or NGINX Plus configuration. For more details, see the NGINX Plus Admin Guide, and for an overview of caching configuration, see our Guide to Caching with NGINX and NGINX Plus.

# Define a content cache location on disk

proxy_cache_path /tmp/cache keys_zone=mycache:10m inactive=60m;

server {

listen 80;

server_name localhost;

location / {

proxy_pass http://localhost:8080;

# reference the cache in a location that uses proxy_pass

proxy_cache mycache;

}

}The idea of running a PHP (or similar) server is to serve freshly assembled content to every user. This is a simple and appealing approach, but it bogs down when traffic skyrockets and the application server can’t keep up.

Microcaching, as mentioned above, means caching a dynamically generated page for a brief period of time, perhaps as short as one second. This form of caching converts a dynamically generated object into a new, static object for a brief, but useful, period of time. For a web page that gets tens, or up to hundreds, of requests per second, most of the requests now come out of the cache with minimal effect on page freshness.

Like other PHP‑driven frameworks, Magento is a good example of the benefits of microcaching. Magento is a flexible, powerful content management system that’s widely used for ecommerce. Magento is frequently implemented as a web and application server running PHP to assemble pages, calling a database server for content. This assemblage works great up to a point, then can die an ugly death when traffic exceeds the application server’s ability to cope – which can easily happen to an ecommerce site, especially during the holidays.

To prevent overload, put a reverse proxy server, such as an NGINX or NGINX Plus server, in front of a Magento application server, then use the NGINX Plus server for microcaching. Putting the NGINX Plus server in front offloads Internet traffic handling and caching from Magento, while cutting peak processing demands on the application server by one or two orders of magnitude. It also opens the door to expansion to multiple application servers, load balancing among them, and monitoring, all of which allow the site to scale without changing the core application logic at all.

Add Multiple Application Servers with Load Balancing

If you have a simple web server architecture, adding load balancing is the quickest step you can take to make it more flexible and resilient. (The NGINX Plus Admin Guide has extensive details on load balancing.)

Load balancing assumes a few things that we should spell out, because each item can be either a gateway for better performance, or a performance bottleneck:

- Web and/or application servers. A reverse proxy server can improve performance even if you have just one application server, as it offloads Internet traffic from the application server, can implement static caching, and provides the first building block for a more robust setup. However, the promise of a more robust setup is only fully realized when you use multiple web and/or application servers and load balance between them.

- Smart load‑balancing strategy. You need a load‑balancing strategy that makes sense for your web application. “Next request goes to next server” – also known as round robin – is a good start. But taking steps to send traffic to a less‑used server, to keep specific clients on the same server during a session, and to monitor processing as it occurs are all important elements of an effective load‑balancing strategy. (Click for more information on the advanced load‑balancing and monitoring features in NGINX Plus.)

- Resource extensibility and flexibility. A smart load‑balancing strategy allows you to almost arbitrarily route traffic and add resources, whether running on physical servers or in the cloud, to meet spikes and long‑term increases in demand.

- Use of additional features. Using your reverse proxy server to support additional features such as content caching (as mentioned), SSL termination, HTTP/2 termination, and so on are important steps for maximizing your investment in a more complex site architecture and minimizing the impact of placing an intermediary between your application server and the Internet.

Note: For a deeper description of where a reverse proxy server fits in your application architecture, see our article on Layer 7 load balancing.

Limit Incoming Requests per User

A typical web page may have more than 100 assets, including the core HTML file, images, and code files. With the typical browser opening half a dozen or so connections per user session, a user moving quickly through your site may generate a small blizzard of requests.

However, both legitimate and illegitimate nonhuman users may generate many more requests than an actual visitor. During busy periods, you want to take extra care to preserve site capability for regular users.

Spiders for search engines are one example of a nonhuman, resource‑intensive user; a spider can hammer a site, slowing performance for regular users. Use the HTTP gateway to restrict access, shutting out high‑volume (generally, not human) users. Restrictions include the number of connections per IP address, the request rate, and the download speed allowed for a connection. You can also take additional steps to prevent distributed denial of service (DDoS) attacks.

Conclusion

Black Friday, Cyber Monday, new product releases, and other real‑world events are an opportunity to examine website architecture for weak points that can lead to slow response times and downtime. But effective performance optimization is a continual process.

Set goals, review your architecture, then search for weak points and vulnerabilities. NGINX and NGINX Plus are widely known remedies for site performance problems, opening the door for many different optimization techniques.

To try NGINX Plus, start your free 30-day trial today or contact us to discuss your use cases.